Note:

Original topic: BR 备份报错 txnLockFast

[TiDB Version]

br backup db --db mydb --pd "192.168.x.x:2379" -s "local:///xxx/xxx" --log-file /xxx/xxx/backup.log

Backup log error:

[2022/11/14 20:45:34.939 +08:00] [WARN] [backoff.go:158] ["txnLockFast backoffer.maxSleep 80000ms is exceeded, errors:\n[BR:Common:ErrUnknown]internal error at 2022-11-14T20:44:28.904862754+08:00\n[BR:Common:ErrUnknown]internal error at 2022-11-14T20:44:49.916982047+08:00\n[BR:Common:ErrUnknown]internal error at 2022-11-14T20:45:10.928640175+08:00\nlongest sleep type: txnLockFast, time: 80514ms"]

[2022/11/14 20:45:34.940 +08:00] [INFO] [client.go:588] ["backup range finished"] [range-sn=11335] [take=25m21.509103139s]

[2022/11/14 20:45:34.940 +08:00] [INFO] [client.go:594] ["backup started"] [range-sn=11498] [startKey=7480000000000078F55F720000000000000000] [endKey=7480000000000078F55F72FFFFFFFFFFFFFFFF00] [rateLimit=0] [concurrency=4]

[2022/11/14 20:45:34.940 +08:00] [ERROR] [client.go:1055] ["fail to backup"] [range-sn=11497] [store-id=1] [StoreID=1] [retry=0] [stack="github.com/pingcap/tidb/br/pkg/backup.SendBackup\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:1055\ngithub.com/pingcap/tidb/br/pkg/backup.(*pushDown).pushBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/push.go:92"]

[2022/11/14 20:45:34.940 +08:00] [WARN] [push.go:212] ["skipping disconnected stores"] [range-sn=11497] [error="[BR:Common:ErrFailedToConnect]failed to create backup stream to store 1: rpc error: code = Canceled desc = context canceled"]

[2022/11/14 20:45:34.940 +08:00] [INFO] [client.go:610] ["finish backup push down"] [range-sn=11497] [small-range-count=0]

[2022/11/14 20:45:34.940 +08:00] [INFO] [client.go:718] ["start fine grained backup"] [range-sn=11497] [incomplete=1]

[2022/11/14 20:45:34.940 +08:00] [ERROR] [client.go:1055] ["fail to backup"] [range-sn=11497] [store-id=6] [StoreID=6] [retry=0] [stack="github.com/pingcap/tidb/br/pkg/backup.SendBackup\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:1055\ngithub.com/pingcap/tidb/br/pkg/backup.(*pushDown).pushBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/push.go:92"]

[2022/11/14 20:45:34.940 +08:00] [WARN] [backoff.go:179] ["unexpected error, stop to retry"] [error="rpc error: code = Canceled desc = context canceled"] [errorVerbose="rpc error: code = Canceled desc = context canceled\ngithub.com/tikv/pd/client.(*client).GetAllStores\n\t/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20220307081149-841fa61e9710/client.go:1596\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStores\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:155\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:192\ngithub.com/pingcap/tidb/br/pkg/utils.WithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/retry.go:51\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:189\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRange\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:600\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRanges.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:562\ngithub.com/pingcap/tidb/br/pkg/utils.(*WorkerPool).ApplyOnErrorGroup.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/worker.go:73\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20220513210516-0976fa681c29/errgroup/errgroup.go:74\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1571"]

......

......

[2022/11/14 20:45:35.705 +08:00] [WARN] [backoff.go:179] ["unexpected error, stop to retry"] [error="rpc error: code = Canceled desc = context canceled"] [errorVerbose="rpc error: code = Canceled desc = context canceled\ngithub.com/tikv/pd/client.(*client).GetAllStores\n\t/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20220307081149-841fa61e9710/client.go:1596\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStores\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:155\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:192\ngithub.com/pingcap/tidb/br/pkg/utils.WithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/retry.go:51\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:189\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRange\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:600\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRanges.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:562\ngithub.com/pingcap/tidb/br/pkg/utils.(*WorkerPool).ApplyOnErrorGroup.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/worker.go:73\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20220513210516-0976fa681c29/errgroup/errgroup.go:74\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1571"]

[2022/11/14 20:45:35.705 +08:00] [INFO] [client.go:588] ["backup range finished"] [range-sn=19248] [take=45.618µs]

[2022/11/14 20:45:35.942 +08:00] [ERROR] [client.go:680] ["can not find leader"] [key=7480000000000078FFF45F698000000000FF0000030000000000FB] [stack="github.com/pingcap/tidb/br/pkg/backup.(*Client).findRegionLeader\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:680\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).handleFineGrained\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:880\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).fineGrainedBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:737"]

[2022/11/14 20:45:35.942 +08:00] [INFO] [client.go:588] ["backup range finished"] [range-sn=11497] [take=1.544899708s]

[2022/11/14 20:45:37.939 +08:00] [WARN] [push.go:103] ["reset the connection in push"] [range-sn=11495] [store-id=50288811]

[2022/11/14 20:45:37.939 +08:00] [WARN] [push.go:103] ["reset the connection in push"] [range-sn=11478] [store-id=50288811]

[2022/11/14 20:45:37.939 +08:00] [INFO] [client.go:588] ["backup range finished"] [range-sn=11495] [take=4.907649714s]

[2022/11/14 20:45:37.939 +08:00] [INFO] [client.go:588] ["backup range finished"] [range-sn=11478] [take=22.184525987s]

[2022/11/14 20:45:37.939 +08:00] [INFO] [client.go:574] ["Backup Ranges"] [take=149ns]

[2022/11/14 20:45:37.940 +08:00] [INFO] [client.go:768] ["[pd] stop fetching the pending tso requests due to context canceled"] [dc-location=global]

[2022/11/14 20:45:37.940 +08:00] [INFO] [client.go:706] ["[pd] exit tso dispatcher"] [dc-location=global]

[2022/11/14 20:45:37.940 +08:00] [INFO] [client.go:768] ["[pd] stop fetching the pending tso requests due to context canceled"] [dc-location=global]

[2022/11/14 20:45:37.940 +08:00] [INFO] [client.go:706] ["[pd] exit tso dispatcher"] [dc-location=global]

[2022/11/14 20:45:37.941 +08:00] [INFO] [metafile.go:592] ["exit write metas by context done"]

[2022/11/14 20:45:37.947 +08:00] [INFO] [collector.go:204] ["units canceled"] [cancel-unit=2]

[2022/11/14 20:45:38.143 +08:00] [INFO] [collector.go:70] ["Database Backup failed summary"] [total-ranges=7755] [ranges-succeed=0] [ranges-failed=7755] [backup-total-ranges=19249] [unit-name="range start:74800000000000794a5f69800000000000000500 end:74800000000000794a5f698000000000000005fb"] [error="rpc error: code = Canceled desc = context canceled"] [errorVerbose="rpc error: code = Canceled desc = context canceled\ngithub.com/tikv/pd/client.(*client).GetAllStores\n\t/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20220307081149-841fa61e9710/client.go:1596\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStores\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:155\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:192\ngithub.com/pingcap/tidb/br/pkg/utils.WithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/retry.go:51\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:189\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRange\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:600\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRanges.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:562\ngithub.com/pingcap/tidb/br/pkg/utils.(*WorkerPool).ApplyOnErrorGroup.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/worker.go:73\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20220513210516-0976fa681c29/errgroup/errgroup.go:74\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1571"] [unit-name="range start:7480000000000080a55f69800000000000000200 end:7480000000000080a55f698000000000000002fb"] [error="rpc error: code = Canceled desc = context canceled"] [errorVerbose="rpc error: code = Canceled desc = context canceled\ngithub.com/tikv/pd/client.(*client).GetAllStores\n\t/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20220307081149-841fa61e9710/client.go:1596\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStores\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:155\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:192\ngithub.com/pingcap/tidb/br/pkg/utils.WithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/retry.go:51\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:189\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRange\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:600\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRanges.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:562\ngithub.com/pingcap/tidb/br/pkg/utils.(*WorkerPool).ApplyOnErrorGroup.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/worker.go:73\ngolang.org.x/sync/errgroup.(*Group).Go.func1\n\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20220513210516-0976fa681c29/errgroup/errgroup.go:74\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1571"] [unit-name="range start:748000000000010eeb5f69800000000000000500 end:748000000000010eeb5f698000000000000005fb"] [error="rpc error: code = Canceled desc = context canceled"] [errorVerbose="rpc error: code = Canceled desc = context canceled\ngithub.com/tikv/pd/client.(*client).GetAllStores\n\t/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20220307081149-841fa61e9710/client.go:1596\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStores\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg

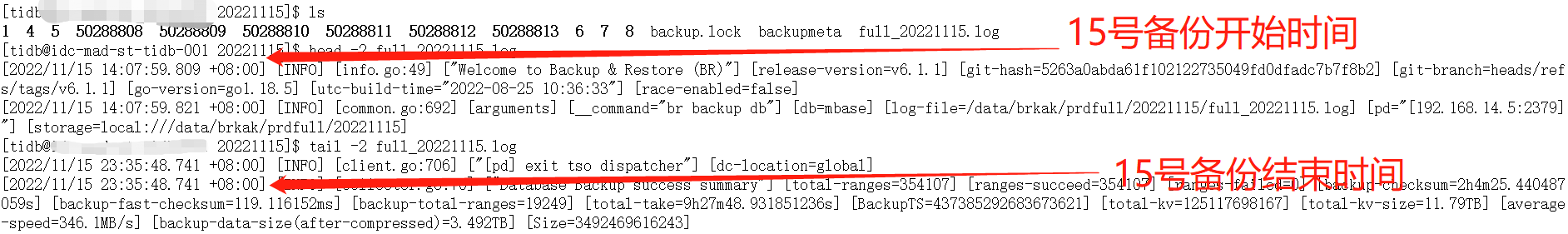

The version of BR is 6.1.1. I checked the start_key, and at that time, this table indeed had write operations, but there were no locks for this table during that period. Here is the log of today’s backup failure:

[2022/11/16 13:35:02.547 +08:00] [WARN] [backoff.go:158] ["txnLockFast backoffer.maxSleep 80000ms is exceeded, errors:\n[BR:Common:ErrUnknown]internal error at 2022-11-16T13:34:05.527343742+08:00\n[BR:Common:ErrUnknown]internal error at 2022-11-16T13:34:26.528966798+08:00\n[BR:Common:ErrUnknown]internal error at 2022-11-16T13:34:44.563046299+08:00\nlongest sleep type: txnLockFast, time: 80305ms"]

[2022/11/16 13:35:02.547 +08:00] [INFO] [client.go:588] ["backup range finished"] [range-sn=5759] [take=22m18.438076389s]

[2022/11/16 13:35:02.547 +08:00] [INFO] [client.go:594] ["backup started"] [range-sn=5769] [startKey=748000000000003BA15F69800000000000000500] [endKey=748000000000003BA15F698000000000000005FB] [rateLimit=0] [concurrency=4]

[2022/11/16 13:35:02.547 +08:00] [WARN] [backoff.go:179] ["unexpected error, stop to retry"] [error="rpc error: code = Canceled desc = context canceled"] [errorVerbose="rpc error: code = Canceled desc = context canceled\ngithub.com/tikv/pd/client.(*client).GetAllStores\n\t/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20220307081149-841fa61e9710/client.go:1596\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStores\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:155\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:192\ngithub.com/pingcap/tidb/br/pkg/utils.WithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/retry.go:51\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:189\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRange\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:600\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRanges.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:562\ngithub.com/pingcap/tidb/br/pkg/utils.(*WorkerPool).ApplyOnErrorGroup.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/worker.go:73\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20220513210516-0976fa681c29/errgroup/errgroup.go:74\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1571"]

[2022/11/16 13:35:02.547 +08:00] [INFO] [client.go:588] ["backup range finished"] [range-sn=5769] [take=372.686µs]

[2022/11/16 13:35:02.547 +08:00] [INFO] [client.go:594] ["backup started"] [range-sn=5770] [startKey=748000000000003BA25F720000000000000000] [endKey=748000000000003BA25F72FFFFFFFFFFFFFFFF00] [rateLimit=0] [concurrency=4]

[2022/11/16 13:35:02.547 +08:00] [WARN] [backoff.go:179] ["unexpected error, stop to retry"] [error="rpc error: code = Canceled desc = context canceled"] [errorVerbose="rpc error: code = Canceled desc = context canceled\ngithub.com/tikv/pd/client.(*client).GetAllStores\n\t/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20220307081149-841fa61e9710/client.go:1596\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStores\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:155\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:192\ngithub.com/pingcap/tidb/br/pkg/utils.WithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/retry.go:51\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:189\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRange\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:600\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRanges.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:562\ngithub.com/pingcap/tidb/br/pkg/utils.(*WorkerPool).ApplyOnErrorGroup.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/worker.go:73\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20220513210516-0976fa681c29/errgroup/errgroup.go:74\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1571"]

[2022/11/16 13:35:02.548 +08:00] [INFO] [client.go:588] ["backup range finished"] [range-sn=5770] [take=182.424µs]

[2022/11/16 13:35:02.548 +08:00] [INFO] [client.go:594] ["backup started"] [range-sn=5771] [startKey=748000000000003BA25F69800000000000000100] [endKey=748000000000003BA25F698000000000000001FB] [rateLimit=0] [concurrency=4]

......

......

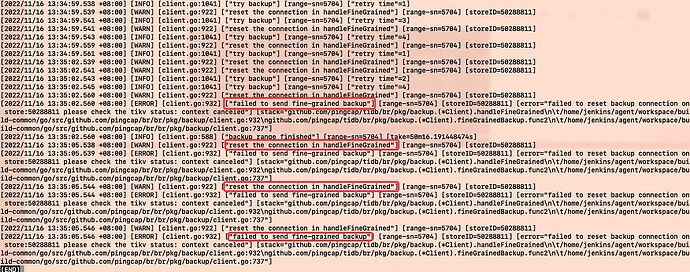

[2022/11/16 13:35:05.541 +08:00] [WARN] [client.go:922] ["reset the connection in handleFineGrained"] [range-sn=5768] [storeID=50288811]

[2022/11/16 13:35:05.541 +08:00] [ERROR] [client.go:932] ["failed to send fine-grained backup"] [range-sn=5768] [storeID=50288811] [error="failed to reset backup connection on store:50288811 please check the tikv status: context canceled"] [stack="github.com/pingcap/tidb/br/pkg/backup.(*Client).handleFineGrained\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:932\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).fineGrainedBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:737"]

[2022/11/16 13:35:05.543 +08:00] [WARN] [client.go:922] ["reset the connection in handleFineGrained"] [range-sn=5768] [storeID=50288811]

[2022/11/16 13:35:05.543 +08:00] [ERROR] [client.go:932] ["failed to send fine-grained backup"] [range-sn=5768] [storeID=50288811] [error="failed to reset backup connection on store:50288811 please check the tikv status: context canceled"] [stack="github.com/pingcap/tidb/br/pkg/backup.(*Client).handleFineGrained\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:932\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).fineGrainedBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:737"]

[2022/11/16 13:35:05.544 +08:00] [WARN] [client.go:922] ["reset the connection in handleFineGrained"] [range-sn=5704] [storeID=50288811]

[2022/11/16 13:35:05.544 +08:00] [ERROR] [client.go:932] ["failed to send fine-grained backup"] [range-sn=5704] [storeID=50288811] [error="failed to reset backup connection on store:50288811 please check the tikv status: context canceled"] [stack="github.com/pingcap/tidb/br/pkg/backup.(*Client).handleFineGrained\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:932\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).fineGrainedBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:737"]

[2022/11/16 13:35:05.545 +08:00] [WARN] [client.go:922] ["reset the connection in handleFineGrained"] [range-sn=5764] [storeID=50288811]

[2022/11/16 13:35:05.545 +08:00] [ERROR] [client.go:932] ["failed to send fine-grained backup"] [range-sn=5764] [storeID=50288811] [error="failed to reset backup connection on store:50288811 please check the tikv status: context canceled"] [stack="github.com/pingcap/tidb/br/pkg/backup.(*Client).handleFineGrained\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:932\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).fineGrainedBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:737"]

[2022/11/16 13:35:05.545 +08:00] [INFO] [client.go:588] ["backup range finished"] [range-sn=5764] [take=6m34.561498857s]

[2022/11/16 13:35:05.545 +08:00] [INFO] [client.go:574] ["Backup Ranges"] [take=164ns]

[2022/11/16 13:35:05.546 +08:00] [WARN] [client.go:922] ["reset the connection in handleFineGrained"] [range-sn=5704] [storeID=50288811]

[2022/11/16 13:35:05.546 +08:00] [ERROR] [client.go:932] ["failed to send fine-grained backup"] [range-sn=5704] [storeID=50288811] [error="failed to reset backup connection on store:50288811 please check the tikv status: context canceled"] [stack="github.com/pingcap/tidb/br/pkg/backup.(*Client).handleFineGrained\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:932\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).fineGrainedBackup.func2\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:737"]

[2022/11/16 13:35:05.546 +08:00] [INFO] [client.go:768] ["[pd] stop fetching the pending tso requests due to context canceled"] [dc-location=global]

[2022/11/16 13:35:05.546 +08:00] [INFO] [client.go:706] ["[pd] exit tso dispatcher"] [dc-location=global]

[2022/11/16 13:35:05.547 +08:00] [INFO] [client.go:768] ["[pd] stop fetching the pending tso requests due to context canceled"] [dc-location=global]

[2022/11/16 13:35:05.547 +08:00] [INFO] [client.go:706] ["[pd] exit tso dispatcher"] [dc-location=global]

[2022/11/16 13:35:05.547 +08:00] [INFO] [metafile.go:592] ["exit write metas by context done"]

[2022/11/16 13:35:05.567 +08:00] [INFO] [collector.go:204] ["units canceled"] [cancel-unit=3]

[2022/11/16 13:35:05.914 +08:00] [INFO] [collector.go:70] ["Database Backup failed summary"] [total-ranges=13484] [ranges-succeed=0] [ranges-failed=13484] [backup-total-ranges=19249] [unit-name="range start:748000000000010f135f69800000000000000a00 end:748000000000010f135f69800000000000000afb"] [error="rpc error: code = Canceled desc = context canceled"] [errorVerbose="rpc error: code = Canceled desc = context canceled\ngithub.com/tikv/pd/client.(*client).GetAllStores\n\t/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20220307081149-841fa61e9710/client.go:1596\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStores\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:155\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:192\ngithub.com/pingcap/tidb/br/pkg/utils.WithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/retry.go:51\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:189\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRange\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:600\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).BackupRanges.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:562\ngithub.com/pingcap/tidb/br/pkg/utils.(*WorkerPool).ApplyOnErrorGroup.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/utils/worker.go:73\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\t/go/pkg/mod/golang.org/x/sync@v0.0.0-20220513210516-0976fa681c29/errgroup/errgroup.go:74\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1571"] [unit-name="range start:7480000000000044df5f69800000000000000700 end:7480000000000044df5f698000000000000007fb"] [error="rpc error: code = Canceled desc = context canceled"] [errorVerbose="rpc error: code = Canceled desc = context canceled\ngithub.com/tikv/pd/client.(*client).GetAllStores\n\t/go/pkg/mod/github.com/tikv/pd/client@v0.0.0-20220307081149-841fa61e9710/client.go:1596\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStores\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:155\ngithub.com/pingcap/tidb/br/pkg/conn.GetAllTiKVStoresWithRetry.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/conn/conn.go:192\ngithub.com/pingcap/tidb/br/pkg/utils.WithRetry\n\t/home/

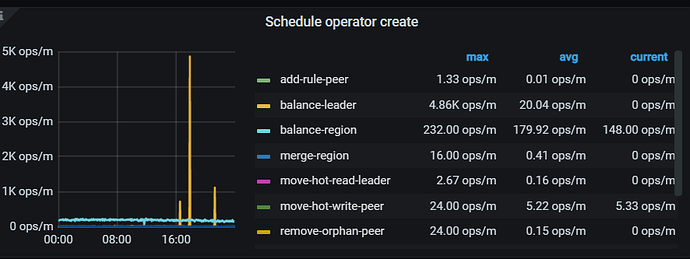

The screenshot time and clinic collection time do not match.

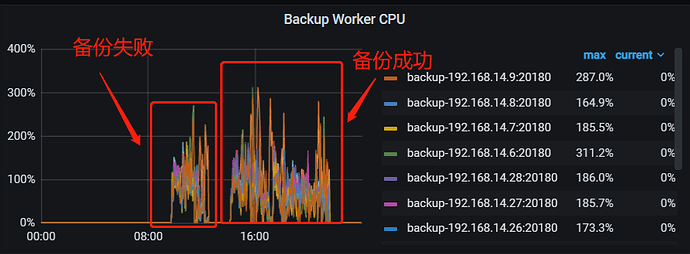

If we “assume” the issue is caused by the same reason, the backup worker of IP-24 is running the highest, just look at this:

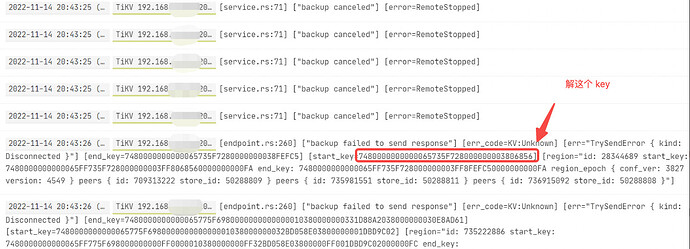

Regarding the backup failure, the first thing found in the collected time is that the TiKV service failed to send a response to BR. This indicates that at 2022 @ 13:13:22.781, the backup request could not receive a message.

As for what action caused the BR side to disconnect, there are no corresponding BR logs at that time point, so it cannot be determined.

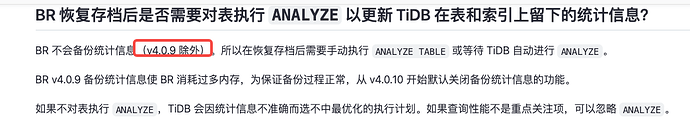

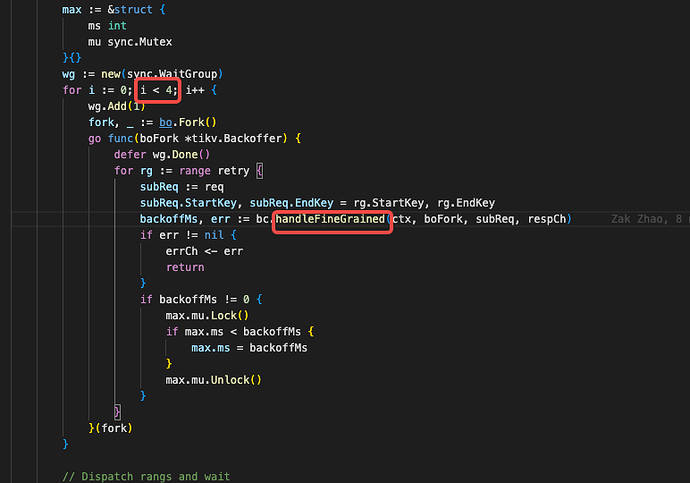

Regarding txnLockFast backoffer.maxSleep 80000ms is exceeded, the entry point is here → tidb/br/pkg/backup/client.go at 5263a0abda61f102122735049fd0dfadc7b7f8b2 · pingcap/tidb · GitHub fine-grained-range-start in the logs, which will then lock onto the range’s startkey and endkey.

There are a few points that cannot be explained now:

Why the TiKV backup response failed to send back to BR, there are no more logs to explain.

Which table’s range is being retried by this backoff, and it may not necessarily be due to unlock failure. The code defaults to backup request not returning as txnlockfast. We need to check the BR action logs before txnLockFast backoffer.maxSleep 80000ms is exceeded.

After taking a closer look, it seems there is still txnLockFast.

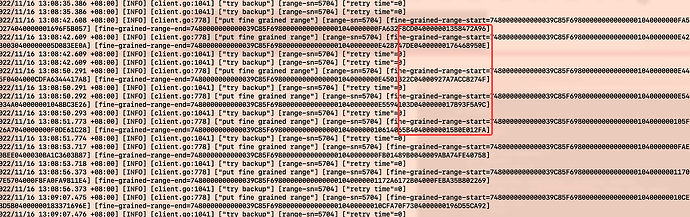

These 4 goroutines reported under failed to send fine-grained backup are part of an intermediate process of the backup crash.cat full_20221116.log|grep “range-sn=5704”|less yields the following. 5704 represents the 5704th table (including index …).

Why did it crash? Fine-grained is the feedback from tikv’s rangeTree retry request, which is the remaining range in the 5704th table. You can see the range keeps changing.

There were no network issues around this time, ping and tcp_retrans …, so it should still be a timeout issue. The retry time is hardcoded, so there’s no way to bypass it.

backupFineGrainedMaxBackoff = 80000

backupRetryTimes = 5

Why can’t it be found in the logs? I’m not sure if read-write conflict errors will be exposed on the tikv side. They will be exposed on the tidb side, but BR does not request TiDB.

However, one solution is to look at the slow_log from 80 seconds before 2022/11/16 13:35:05.546 until 2022/11/16 13:35:05.546 for any uncommitted transactions. Then, identify the table involved in that transaction which corresponds to the 5704 range-sn table-name. If lucky, you can find it, identify the business, and then stagger the backup.

Oh, actually there is a key in the log, but it wasn’t found.

The log only exposes the primary_lock keyword. Previously, I only searched for the tikv with ip-14.24.

Let’s decode the primary-key below (the conflict on ip-14.24 might be the secondary-key in the txn

Auto

Documents

Time cluster_id component message

Nov 16, 2022 @ 13:57:56.027 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F6980000000000000020380000000000044AD lock_version: 437407783577190475 key: 7480000000000000155F698000000000000001040611FC0FCA1C004B03800000000028BEA1 lock_ttl: 3003 txn_size: 2 lock_for_update_ts: 437407783577190475 min_commit_ts: 437407783577190476"]

Nov 16, 2022 @ 13:55:02.477 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000079675F698000000000000005038000000000000002037FFFFFFFFFFFFFFF03800000000000000003800000000000001E0419AE60C97400000003800000000328A9C3 lock_version: 437407737937920025 key: 7480000000000079675F698000000000000006038000000000000002037FFFFFFFFFFFFFFF0003800000000000001E0419AE60C97400000003800000000328A9C3 lock_ttl: 3001 txn_size: 1 min_commit_ts: 437407737937920026"]

Nov 16, 2022 @ 13:53:56.028 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F698000000000000002038000000000003843 lock_version: 437407720662630479 key: 7480000000000000155F698000000000000001040611FC01241C004F03800000000024A218 lock_ttl: 3004 txn_size: 4 lock_for_update_ts: 437407720662630479 min_commit_ts: 437407720662630480"]

Nov 16, 2022 @ 13:47:56.018 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000175F7280000000010C0BDE lock_version: 437407626303897605 key: 7480000000000000175F7280000000010C0BDE lock_ttl: 3003 txn_size: 4 min_commit_ts: 437407626303897606"]

Nov 16, 2022 @ 13:46:56.035 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000175F728000000001156EB6 lock_version: 437407610575257606 key: 7480000000000000175F728000000001156EB6 lock_ttl: 5661 txn_size: 27 min_commit_ts: 437407610575257607"]

Nov 16, 2022 @ 13:46:56.035 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000175F728000000001156EB6 lock_version: 437407610575257606 key: 7480000000000000175F728000000001156ED7 lock_ttl: 5661 txn_size: 12 min_commit_ts: 437407610575257607"]

Nov 16, 2022 @ 13:46:56.028 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F698000000000000002038000000000004376 lock_version: 437407610562150456 key: 7480000000000000155F698000000000000001040611FBE7819C0038038000000000258C4F lock_ttl: 3005 txn_size: 4 lock_for_update_ts: 437407610562150456 min_commit_ts: 437407610562150457"]

Nov 16, 2022 @ 13:45:56.029 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F698000000000000002038000000000003C2F lock_version: 437407594833510478 key: 7480000000000000155F72800000000028C06C lock_ttl: 20001 txn_size: 2 lock_for_update_ts: 437407594833510478 min_commit_ts: 437407594833510479"]

Nov 16, 2022 @ 13:40:03.213 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000079675F69800000000000000503800000000000000203800000000000000003800000000000000003800000000000001E0419AE60C98700000003800000000328B394 lock_version: 437407502204928044 key: 7480000000000079675F69800000000000000503800000000000000203800000000000000003800000000000000003800000000000001E0419AE60C98700000003800000000328B394 lock_ttl: 3001 txn_size: 1 lock_type: Del min_commit_ts: 437407502204928045"]

Nov 16, 2022 @ 13:39:56.020 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000175F72800000000115ED65 lock_version: 437407500474777607 key: 7480000000000000175F72800000000115ED6C lock_ttl: 3360 txn_size: 5 min_commit_ts: 437407500474777608"]

Nov 16, 2022 @ 13:24:56.034 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000175F72800000000112A837 lock_version: 437407264545177607 key: 7480000000000000175F72800000000112A83F lock_ttl: 3514 txn_size: 2 min_commit_ts: 437407264545177608"]

Nov 16, 2022 @ 13:11:21.789 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F698000000000000002038000000000004371 lock_version: 437407048158412856 key: 7480000000000000155F72800000000028731E lock_ttl: 25945 txn_size: 66 lock_type: Del lock_for_update_ts: 437407051055104097 min_commit_ts: 437407051055104098"]

Nov 16, 2022 @ 13:11:21.765 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F698000000000000002038000000000004371 lock_version: 437407048158412856 key: 7480000000000000155F698000000000000001040611FB63BE5C009B03800000000028731E lock_ttl: 25945 txn_size: 2 lock_type: Del lock_for_update_ts: 437407051055104097 min_commit_ts: 437407051055104098"]

Nov 16, 2022 @ 13:11:21.122 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F6980000000000000020380000000000033D5 lock_version: 437407050897817694 key: 7480000000000000155F698000000000000001040611FB65323C0015038000000000260170 lock_ttl: 3006 txn_size: 6 lock_type: Del lock_for_update_ts: 437407050897817694 min_commit_ts: 437407050897817695"]

Nov 16, 2022 @ 13:09:36.770 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F69800000000000000203800000000000354C lock_version: 437407023477555226 key: 7480000000000000175F7280000000010EF298 lock_ttl: 20039 txn_size: 1 lock_type: Del lock_for_update_ts: 437407023490662403 min_commit_ts: 437407023490662404"]

Nov 16, 2022 @ 13:09:36.121 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F69800000000000000203800000000000354C lock_version: 437407023307161751 key: 7480000000000000155F7280000000002A6AB6 lock_ttl: 20002 txn_size: 3 lock_type: Del lock_for_update_ts: 437407023320268952 min_commit_ts: 437407023320268953"]

Nov 16, 2022 @ 13:09:36.113 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F69800000000000000203800000000000354C lock_version: 437407023307161751 key: 7480000000000000155F698000000000000001040611FB5EC104002A0380000000002A6AB6 lock_ttl: 3164 txn_size: 3 lock_type: Del lock_for_update_ts: 437407023320268952 min_commit_ts: 437407023320268953"]

Nov 16, 2022 @ 13:02:56.027 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000000155F698000000000000002038000000000004371 lock_version: 437406918501990522 key: 7480000000000000155F698000000000000001040611FB461564005D038000000000258C4F lock_ttl: 3004 txn_size: 4 lock_type: Del lock_for_update_ts: 437406918501990522 min_commit_ts: 437406918501990523"]

I decoded these keys, and apart from the system tables mysql.stats_meta and mysql.stats_histograms, there is only one business table, but this table is not related to the range-sn table’s business

These are the business table entries:

Nov 16, 2022 @ 13:55:02.477 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000079675F698000000000000005038000000000000002037FFFFFFFFFFFFFFF03800000000000000003800000000000001E0419AE60C97400000003800000000328A9C3 lock_version: 437407737937920025 key: 7480000000000079675F698000000000000006038000000000000002037FFFFFFFFFFFFFFF0003800000000000001E0419AE60C97400000003800000000328A9C3 lock_ttl: 3001 txn_size: 1 min_commit_ts: 437407737937920026"]

Nov 16, 2022 @ 13:40:03.213 6801054821581547599 tikv [endpoint.rs:621] [error-response] [err="Key is locked (will clean up) primary_lock: 7480000000000079675F69800000000000000503800000000000000203800000000000000003800000000000000003800000000000001E0419AE60C98700000003800000000328B394 lock_version: 437407502204928044 key: 7480000000000079675F69800000000000000503800000000000000203800000000000000003800000000000000003800000000000001E0419AE60C98700000003800000000328B394 lock_ttl: 3001 txn_size: 1 lock_type: Del min_commit_ts: 437407502204928045"]

Keys corresponding to the table:

{"index_id":6,"index_vals":{"audio_status":null,"createtime":"2022-11-16 12:37:52","download_status":"-1","priority":"30","type":"2"},"table_id":31079}

{"index_id":5,"index_vals":{"createtime":"2022-11-16 12:38:07","download_status":"0","frame_status":"0","priority":"30","type":"2"},"table_id":31079}

Keys corresponding to the range-sn 5704 table:

{"index_id":1,"index_vals":{"fid":"59918113182","uid":"39236972323528"},"table_id":14792}

Searched the slow logs from 12:30:00 to 14:00:00, but did not find any SQL involving the range-sn 5704 table.

Recently, after expanding TiKV, we no longer encountered failures due to lock conflicts when using BR for backups.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.