Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: v6.4.0版本的TiKV日志备份功能,在集群模式下测试发现一些问题。

【TiDB Usage Environment】

Testing

【TiDB Version】

v6.4.0

【Reproduction Path】

【Encountered Issues: Problem Phenomenon and Impact】

Through testing, the following issues were found in TiDB version v6.4.0 that supports log backup:

- Cluster log backup to local failed, only the backup directory was generated but no backup files were produced. Single node backup to local works fine.

- Six-node cluster, 3 PD 6 TiKV 6 TiDB, stopping one TiKV node, the log backup gap will increase slightly (about five minutes), but it can be caught up within ten minutes, and after catching up, there will be no significant gap increase. Full + incremental recovery works fine.

- Six-node cluster, 3 PD 6 TiKV 6 TiDB, stopping two TiKV nodes, the log backup gap keeps accumulating and could not be caught up even after about two and a half hours (preliminary judgment is that it cannot be caught up).

- During normal log backup, shutting down external storage ceph node, log backup shows no errors, but the gap keeps accumulating, and backup files are normally output to local cache. After ceph node is turned back on, the gap can be caught up. (ceph shutdown for ten minutes)

【Resource Configuration】

6 cloud hosts, configuration 2C4G Disk 50G

【Attachments: Screenshots/Logs/Monitoring】

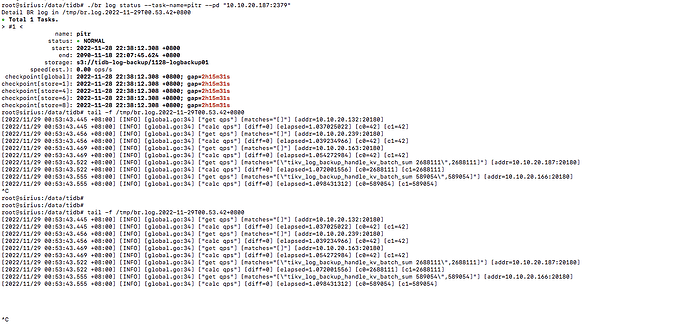

The environment has been removed, only the terminal of issue point 3 can capture the log backup task information:

I didn’t understand… What issue are you trying to address…

Haha, there are actually three questions I want to express:

- How to manage log backup tasks? For example, how to determine if there is an exception?

- If a cluster node is abnormal (tikv down) but the cluster business read and write are normal, will the log backup be abnormal (gap not caught up for two and a half hours)?

- Does the current version still not support backing up tikv logs of the cluster to the local?

Provide the deployment details and check what was running on the machine that was shut down.

I’ll just quietly watch the experts chat.

The key point is, I still don’t understand what issue you’re trying to express

The deployment was done directly by downloading the binary package from the official website, and the configurations were not modified. The machines that were shut down were running pd+tikv+tidb and tidb+tikv respectively.

I just want to express that the PITR feature in v6.4.0 is still unstable, right? Should we wait for a more stable version to see if these issues can be fixed?

You almost made me forget what my core question was

The problem has actually been listed above

6.4 is not LTS, it is DMR.

If you want to go to production, currently 6.1 LTS is recommended.

For POC, this version is also suggested. But if you want to experience the features, 6.4 is fine, just wait a bit longer for production.

I guess you want to experiment with the backup and restore process. TiKV itself supports scheduling with multiple replicas, which can achieve replica balancing as long as there are enough nodes.

The illustration describes the backup and restore process. You can refer to the documentation to simulate it.

I’m a newbie, seeking guidance!

Yes, to be precise, it’s about experimenting with full backups and log backups to achieve PITR.

I should have asked my core question right away

How long will it take for PITR to be ready for production? I see that v6.4.0 just came out on the 17th of this month…

Wait for the next LTS.

This time might be delayed. As for the release version, it still depends on whether some issues have been fixed.

Can you forward the question I mentioned above? Can you also fix it, haha~

The delay shouldn’t be until next year, right?

Oh, to clarify, during my testing, it was data read and write for a single database and single table.

Wait for PITR GA. It seems that it hasn’t reached GA yet, and there are still quite a few issues.

There are two modes: snapshot and log replay. I suggest you test it again.

Single database and single table are not the issue here.

The key is whether this process can meet your expectations.