Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: rewriteBatchedStatements参数导致无Queries Using Plan Cache OPS 命中

[TiDB Usage Environment] Test

[TiDB Version]

V6.1.2

[Encountered Problem]

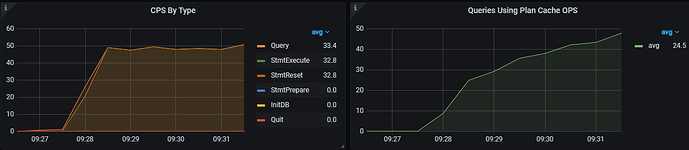

The application scenario involves batch deleting data and then batch writing data using Jdbc’s batch processing. During testing on TiDB, it was found that if rewriteBatchedStatements=true is not used, the write speed is very slow. However, in the monitoring, the [Queries Using Plan Cache OPS] item can hit the cache, and the [CPS By Type] item is StmtExecute, with almost no Query.

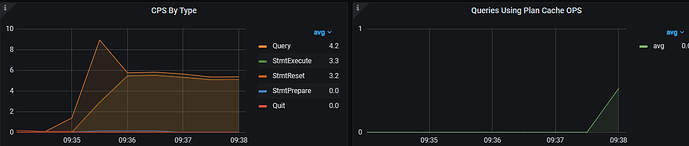

If rewriteBatchedStatements=true is used, the write speed can be significantly improved, but the [Queries Using Plan Cache OPS] item in the monitoring is always 0, unable to hit the cache, and the [CPS By Type] item is all Query.

Is it because the rewritten insert…values statement cannot be cached?

[Phenomenon Update]

I suspect that the batch size is too large, causing the rewritten SQL to exceed prepStmtCacheSqlLimit, resulting in no cache hits. When the batch size is reduced to 10 SQLs per batch and rerun, it can hit [Queries Using Plan Cache OPS]. However, when the batch size is increased to 100, no matter how large prepStmtCacheSqlLimit is set, it cannot hit [Queries Using Plan Cache OPS].

Is there a limit on the length of batch statements on the TiDB server side?

[Test 2]

The specific JDBC connection string is as follows:

jdbc:mysql://11.139.35.194:4000/busiData?useServerPrepStmts=true&cachePrepStmts=true&prepStmtCacheSize=1000&prepStmtCacheSqlLimit=2147483647&rewriteBatchedStatements=true&useConfigs=maxPerformance&allowMultiQueries=true

prepStmtCacheSqlLimit is already Integer.MAX_VALUE, and it has been set to a larger value 20480000000000000000000, but it still has no effect.

Database Related Parameters

tidb_prepared_plan_cache_size: 100

tidb_ignore_prepared_cache_close_stmt: ON

Ran two tests with batchSize=10 and 100 respectively.

This test scenario involves batch inserting data into two tables, one with 56 fields and the other with 146 fields. It is speculated that the batch insert statement will be very large, but it is unclear where to find the rewritten statement.

However, the client did not receive any max_allowed_packet related errors, and all data was successfully inserted.

When batchSize=10, monitoring chart:

When batchSize=100, monitoring chart:

Could not find the Plan Cache Memory Usage monitoring chart in TiDB → Executor monitoring.

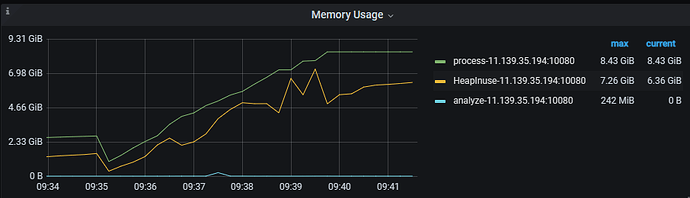

When batchSize=100, Memory Usage monitoring shows memory rising quickly.

What could be the issue? How should I troubleshoot it? Thanks for the help!