Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: TiDB混搭设置

[TiDB Usage Environment] Production Environment / Testing

[TiDB Version] v5.1.0

[Encountered Problem: Phenomenon and Impact] Currently, the production environment TiDB is preparing for expansion. Previously, the expansion was deployed using virtual machines with very low IOPS. Now, we are preparing to use a hybrid direct-attached disk method for expansion. This time, we are preparing to expand with 5 TiKV, 1 TiDB, and 1 TiFlash. The questions are as follows:

-

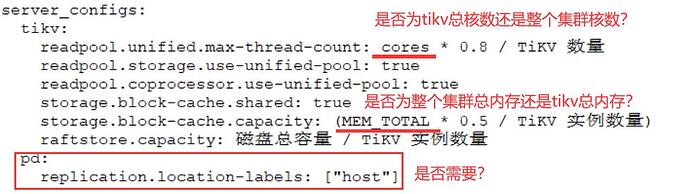

How should the following parameters be set?

混合部署拓扑 | PingCAP 文档中心

-

Does anyone have a complete reference document for installing and setting up numactl?

TiDB 环境与系统配置检查 | PingCAP 归档文档站

For PD and TiDB, use virtual machines with high disk I/O, or directly use physical machines.

The first and second are the number of cores and memory of the machine where your components are deployed. The third label is for tagging. If you have related architectural requirements that need tagging in the future, you can add it; otherwise, you can skip it. The main thing with mixed deployment is to plan the machine resource allocation well to avoid resource contention.

Are you planning to redeploy an environment or scale out TiKV, TiDB, and TiFlash nodes? Scaling out just requires the scale-out command and doesn’t need these parameters. These parameters are key for multi-instance on a single machine. Did you originally have just one physical machine and are now adding so many nodes on it?

Forget about these parameters, just scale up directly.

I originally deployed on virtual machines, with 3 TiDB, 3 TiPD, 6 TiKV, and 2 TiFlash. Currently, the servers prepared for expansion are physical servers, and I plan to use a hybrid deployment (multiple instances on a single machine). I am preparing to expand with 1 TiDB, 5 TiKV, and 1 TiFlash.

The key point is that the previous environment was deployed on virtual machines, and now the newly added servers are physical machines. We are planning to use a hybrid deployment method. If we continue to use the old method, we can expand without setting these parameters.

“The first and second refer to the number of cores and memory of the machines where your components are deployed.” Does this include the cores and memory of the entire cluster, including the existing and planned expansion servers? Or just the cores and memory of the new expansion servers? Also, could you please explain how to understand tagging?

Here’s how I understand it: You originally had 3 TiDB, 3 TiPD, 6 TiKV, and 2 TiFlash, totaling 14 virtual machines. Now you want to scale out with 1 physical machine, on which you plan to deploy 1 TiDB, 5 TiKV, and 1 TiFlash. Subsequently, you will have a mix of 14 virtual machines and 1 physical machine. Assuming the physical machine you are scaling out to has 48 cores and 192GB of RAM, I suggest setting the readpool.unified.max-thread-count to 7 for each TiKV node and setting storage.block-cache.capacity to 15GB (since you also need to deploy 1 TiDB and 1 TiFlash). You don’t need to configure PD parameters; you can directly use scale-out to expand with 1 TiDB, 5 TiKV, and 1 TiFlash.

I have another question. My original environment uses XFS disk partitions. Now, through FIO testing, I found that EXT4 performs better. So, I plan to use the EXT4 format for the new servers. This means the cluster will have two different partition formats. I wonder if there will be any conflicts.

I don’t think there’s a conflict. Although the newly expanded nodes using ext4 are indeed a bit faster, the data running on your old nodes will still be slow. It seems like the running speed is still being dragged down by the old nodes because it’s impossible for all your data to be on the new nodes…

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.