Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tidb 请求已经缩掉的kv

[TiDB Usage Environment] Production Environment / Testing / PoC

[TiDB Version]

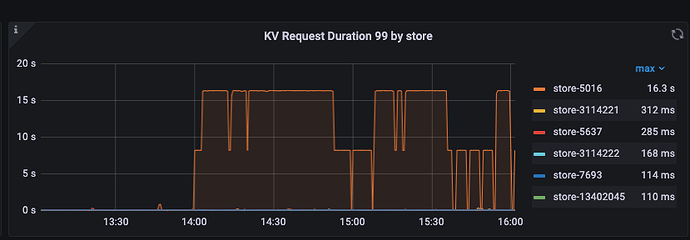

tidb 4.0.14. I removed a KV node, and it is no longer visible in pd-ctl. Why is TiDB still requesting this offline KV?

[Reproduction Path] What operations were performed to cause the issue

[Encountered Issue: Symptoms and Impact]

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

When was the scale-down completed? Use tiup cluster display to check.

So how did you scale down?

First, evict the store’s leader, then execute pd-ctl store delete 5016. After waiting for the KV to become a tombstone, execute remove tombstone.

This approach may result in the cluster information not being updated, so TiDB will still make requests, which can take a long time. It is recommended to use TiUP for scaling in and out: 使用 TiUP 扩容缩容 TiDB 集群 | PingCAP 归档文档站

What specific command was executed, and what was the error reported? It seems like the region information in the PD cache hasn’t been updated.

I have restarted both PD and TiDB. It’s useless.

Check out this article by Binbin.

I replaced all the TiDB nodes through scaling up and down, and then restored them. Also, when the TiDB layer encounters a region miss during a query, it will re-fetch the region information from PD, right? Could it be that the fetch was unsuccessful? If it was successful, why does it still request the KV that has already been removed next time?

Sure, please provide the text you need translated.

But on my side, I waited until it became a tombstone, rather than using --force to forcibly take it offline.

The monitoring also shows that it waits until the store region count drops to zero.

Could it be that there is an unaddressed bug related to the region cache?

Have any corresponding changes been made in the configuration file? Check if you need to comment out a kv node in the configuration file.