Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: Tiflash在k8s上启动失败

[TiDB Usage Environment] Test/PoC

[TiDB Version] 6.1.0

[Reproduction Path]

Add a TiFlash node to the existing TiDB cluster through the operator

[Encountered Issue: Symptoms and Impact]

TiFlash failed to start and could not join the cluster

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

Our TiDB has a password set, but I understand it should not affect this

Operator config:

tiflash:

baseImage: pingcap/tiflash

maxFailoverCount: 0

replicas: 1

storageClaims:

- resources:

requests:

storage: {{ .Values.tiflash.storageSize }}

storageClassName: shared-ssd-storage

requests:

memory: "48Gi"

cpu: "32"

config:

config: |

[flash]

[flash.flash_cluster]

log = "/data0/logs/flash_cluster_manager.log"

[logger]

count = 10

level = "information"

errorlog = "/data0/logs/error.log"

log = "/data0/logs/server.log"

server log:

[2022/11/10 08:09:55.756 +00:00] [INFO] [<unknown>] ["Welcome to TiFlash"] [thread_id=1]

[2022/11/10 08:09:55.756 +00:00] [INFO] [<unknown>] ["Starting daemon with revision 54381"] [thread_id=1]

[2022/11/10 08:09:55.756 +00:00] [INFO] [<unknown>] ["TiFlash build info: TiFlash\nRelease Version: v6.1.0\nEdition: Community\nGit Commit Hash: ebf7ce6d9fb4090011876352fe26b89668cbedc4\nGit Branch: heads/refs/tags/v6.1.0\nUTC Build Time: 2022-06-07 11:55:49\nEnable Features: jemalloc avx avx512 unwind\nProfile: RELWITHDEBINFO\n"] [thread_id=1]

[2022/11/10 08:09:55.756 +00:00] [INFO] [<unknown>] ["Application:starting up"] [thread_id=1]

[2022/11/10 08:09:55.757 +00:00] [INFO] [Server.cpp:363] ["Application:Got jemalloc version: 5.2.1-0-gea6b3e973b477b8061e0076bb257dbd7f3faa756"] [thread_id=1]

[2022/11/10 08:09:55.757 +00:00] [INFO] [Server.cpp:372] ["Application:Not found environment variable MALLOC_CONF"] [thread_id=1]

[2022/11/10 08:09:55.757 +00:00] [INFO] [Server.cpp:378] ["Application:Got jemalloc config: opt.background_thread false, opt.max_background_threads 4"] [thread_id=1]

[2022/11/10 08:09:55.757 +00:00] [INFO] [Server.cpp:382] ["Application:Try to use background_thread of jemalloc to handle purging asynchronously"] [thread_id=1]

[2022/11/10 08:09:55.757 +00:00] [INFO] [Server.cpp:385] ["Application:Set jemalloc.max_background_threads 1"] [thread_id=1]

[2022/11/10 08:09:55.757 +00:00] [INFO] [Server.cpp:388] ["Application:Set jemalloc.background_thread true"] [thread_id=1]

[2022/11/10 08:09:55.757 +00:00] [INFO] [Server.cpp:452] ["Application:start raft store proxy"] [thread_id=1]

[2022/11/10 08:09:55.757 +00:00] [INFO] [Server.cpp:1025] ["Application:wait for tiflash proxy initializing"] [thread_id=1]

[2022/11/10 08:09:55.758 +00:00] [ERROR] [BaseDaemon.cpp:377] [BaseDaemon:########################################] [thread_id=3]

[2022/11/10 08:09:55.758 +00:00] [ERROR] [BaseDaemon.cpp:378] ["BaseDaemon:(from thread 2) Received signal Aborted(6)."] [thread_id=3]

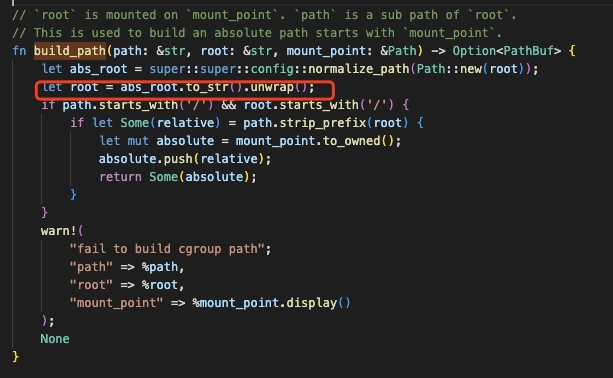

[2022/11/10 08:09:57.242 +00:00] [ERROR] [BaseDaemon.cpp:570] ["BaseDaemon:\n 0x1ed2661\tfaultSignalHandler(int, siginfo_t*, void*) [tiflash+32319073]\n \tlibs/libdaemon/src/BaseDaemon.cpp:221\n 0x7fe276364630\t<unknown symbol> [libpthread.so.0+63024]\n 0x7fe275da7387\tgsignal [libc.so.6+222087]\n 0x7fe275da8a78\t__GI_abort [libc.so.6+227960]\n 0x7fe278d134ea\tstd::sys::unix::abort_internal::haf36307751e7fe01 (.llvm.12916800362124319953) [libtiflash_proxy.so+34161898]\n 0x7fe278d0c6ba\trust_panic [libtiflash_proxy.so+34133690]\n 0x7fe278d0c329\tstd::panicking::rust_panic_with_hook::h9c6cab3d6f14fe6f [libtiflash_proxy.so+34132777]\n 0x7fe278d0bf60\tstd::panicking::begin_panic_handler::_$u7b$$u7b$closure$u7d$$u7d$::h227dbfeee564b658 [libtiflash_proxy.so+34131808]\n 0x7fe278d094e7\tstd::sys_common::backtrace::__rust_end_short_backtrace::h99435fb636049671 (.llvm.12916800362124319953) [libtiflash_proxy.so+34120935]\n 0x7fe278d0bce0\trust_begin_unwind [libtiflash_proxy.so+34131168]\n 0x7fe2779fb054\tcore::panicking::panic_fmt::h05626cefcc91481d [libtiflash_proxy.so+14139476]\n 0x7fe2779faec8\tcore::panicking::panic::h3bcdaac666fd7377 [libtiflash_proxy.so+14139080]\n 0x7fe2794ccc68\ttikv_util::sys::cgroup::build_path::h8c8024b5396924b2 [libtiflash_proxy.so+42261608]\n 0x7fe2794ca19e\ttikv_util::sys::cgroup::CGroupSys::cpuset_cores::h01751adb3bcda1d8 [libtiflash_proxy.so+42250654]\n 0x7fe2794cdec6\ttikv_util::sys::SysQuota::cpu_cores_quota::h8c8d162fbefeb10a [libtiflash_proxy.so+42266310]\n 0x7fe2792b8186\t_$LT$tikv..server..config..Config$u20$as$u20$core..default..Default$GT$::default::hdfa398ba89abecb8 [libtiflash_proxy.so+40079750]\n 0x7fe279444d41\t_$LT$tikv..server..config.._IMPL_DESERIALIZE_FOR_Config..$LT$impl$u20$serde..de..Deserialize$u20$for$u20$tikv..server..config..Config$GT$..deserialize..__Visitor$u20$as$u20$serde..de..Visitor$GT$::visit_map::h5f2ea3cbdf936511 [libtiflash_proxy.so+41704769]\n 0x7fe2793b7448\t_$LT$tikv..config.._IMPL_DESERIALIZE_FOR_TiKvConfig..$LT$impl$u20$serde..de..Deserialize$u20$for$u20$tikv..config..TiKvConfig$GT$..deserialize..__Visitor$u20$as$u20$serde..de..Visitor$GT$::visit_map::h33ab3cb55aa8dbcf [libtiflash_proxy.so+41124936]\n 0x7fe2791219e2\ttikv::config::TiKvConfig::from_file::h576988465b4da7d2 [libtiflash_proxy.so+38414818]\n 0x7fe2784350ba\tserver::proxy::run_proxy::he9e2085225ada8f8 [libtiflash_proxy.so+24862906]\n 0x1d694ff\tDB::RaftStoreProxyRunner::runRaftStoreProxyFFI(void*) [tiflash+30840063]\n \tdbms/src/Server/Server.cpp:462\n 0x7fe27635cea5\tstart_thread [libpthread.so.0+32421]"] [thread_id=3]

Config in the pod:

default_profile = "default"

display_name = "TiFlash"

http_port = 8123

interserver_http_port = 9009

listen_host = "0.0.0.0"

mark_cache_size = 5368709120

minmax_index_cache_size = 5368709120

path = "/data0/db"

path_realtime_mode = false

tcp_port = 9000

tmp_path = "/data0/tmp"

[application]

runAsDaemon = true

[flash]

compact_log_min_period = 200

overlap_threshold = 0.6

service_addr = "0.0.0.0:3930"

tidb_status_addr = "test-tidb.tidbstore.svc:10080"

[flash.flash_cluster]

cluster_manager_path = "/tiflash/flash_cluster_manager"

log = "/data0/logs/flash_cluster_manager.log"

master_ttl = 60

refresh_interval = 20

update_rule_interval = 10

[flash.proxy]

addr = "0.0.0.0:20170"

advertise-addr = "test-tiflash-0.test-tiflash-peer.tidbstore.svc:20170"

config = "/data0/proxy.toml"

data-dir = "/data0/proxy"

[logger]

count = 10

errorlog = "/data0/logs/error.log"

level = "information"

log = "/data0/logs/server.log"

size = "100M"

[profiles]

[profiles.default]

load_balancing = "random"

max_memory_usage = 10000000000

use_uncompressed_cache = 0

[profiles.readonly]

readonly = 1

[quotas]

[quotas.default]

[quotas.default.interval]

duration = 3600

errors = 0

execution_time = 0

queries = 0

read_rows = 0

result_rows = 0

[raft]

kvstore_path = "/data0/kvstore"

pd_addr = "test-pd.tidbstore.svc:2379"

storage_engine = "dt"

[status]

metrics_port = 8234

[users]

[users.default]

password = ""

profile = "default"

quota = "default"

[users.default.networks]

ip = "::/0"

[users.readonly]

password = ""

profile = "readonly"

quota = "default"

[users.readonly.networks]

ip = "::/0"