Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tiup升级tidb从5.4到6.1.2提示node_exporter无法stop

Bug Report

Clearly and accurately describe the issue you found. Providing any steps to reproduce the issue can help the development team address it promptly.

[TiDB Version]

tidb 5.4 tiup 1.11.1

[Impact of the Bug]

The upgrade process cannot be completed successfully. Attempted to stop all node_exporter nodes but still failed.

[Possible Steps to Reproduce]

[Observed Unexpected Behavior]

Failed to stop node_exporter

[Expected Behavior]

[Related Components and Specific Versions]

[Additional Background Information or Screenshots]

Upgrading component pd

Upgrading component tikv

Upgrading component tidb

Upgrading component prometheus

Upgrading component grafana

Upgrading component alertmanager

Stopping component node_exporter

Stopping instance 10.131.172.97

Stopping instance 10.131.188.16

Stopping instance 10.131.184.12

Stopping instance 10.131.176.240

Stopping instance 10.131.184.2

Stopping instance 10.131.172.76

Stopping instance 10.131.177.171

Stopping instance 10.131.177.177

Stopping instance 10.131.184.11

Stopping instance 10.131.188.12

Stopping instance 10.131.177.92

Stop 10.131.172.97 success

Stop 10.131.184.12 success

Stop 10.131.184.11 success

Stop 10.131.188.12 success

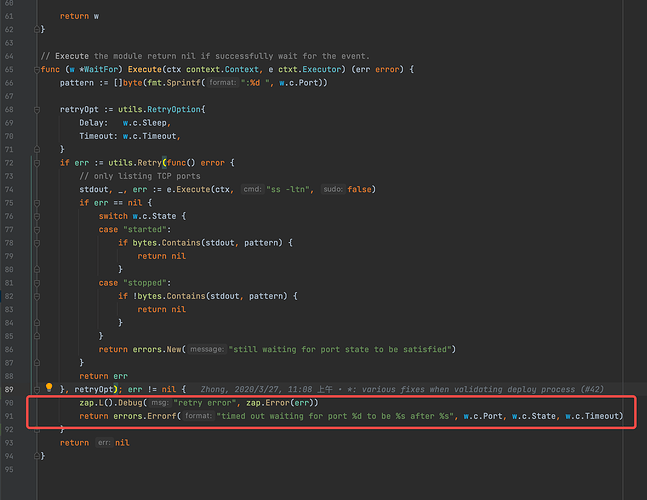

Error: failed to stop: 10.131.177.177 node_exporter-9100.service, please check the instance's log() for more detail.: timed out waiting for port 9100 to be stopped after 2m0s

Verbose debug logs have been written to /home/tidb/.tiup/logs/tiup-cluster-debug-2022-11-29-15-50-56.log.

Located the source code position through the logs, tiup source code location keeps reporting errors

Check how many node_exporter processes there are and the corresponding port usage.

Step by step troubleshooting initially had two, then turned off the root one, and then also turned off the node_exporter for the tidb user.

tiup cluster exec cvs_db --sudo --command “systemctl stop node_exporter-9100”

tiup cluster exec cvs_db --sudo --command “systemctl stop node_exporter”

What does black_exporter do, should it be killed?

The main focus is on monitoring network ping latency. The node_exporter does not conflict. Check if the node_exporter startup scripts under root conflict with those of TiDB.

The root has already been closed, confirmed several times.

It’s not about closing, it’s about calling certain paths that lead to executing root-related tasks, something like that.

If there are logs that can be seen, it would be easy to locate.

Do you have any troubleshooting ideas?

Check by running ls -l /etc/systemd/system/ | grep exporter.

The default value of the tidb_distsql_scan_concurrency parameter is 15. This parameter controls the number of concurrent threads used for scanning data in TiDB. Increasing this value can improve the performance of large queries, but it may also increase the load on the system.

Does shutting down blackbox_exporter affect the service? Maybe I should shut it down too.

No impact. At which step did the upgrade fail? Check if the relevant paths in ecporter-9100.service are correct. Use lsof -i:port to see if there is any exporter information.

There is no exporter information, the path is correct. TiKV, TiDB, and PD have all succeeded. Finally, some components of the monitoring restarted, but it got stuck on the restart of node_exporter.

The image is not visible. Please provide the text you need translated.

The node_exporter has been successfully restarted, and the information in the cluster within tiup will also be updated, so there should be no problem.

No, it’s still an issue with node_exporter. But TiDB is still usable at the moment.

Or can I just directly change the version in the meta file within tiup?