Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 3节点kv,3副本,想测试验证一下,在挂掉一个kv节点或者磁盘数据被清理时,整个tidb集群是否能够正常工作。

[Test Environment for TiDB] Testing

[TiDB Version] v6.5.0

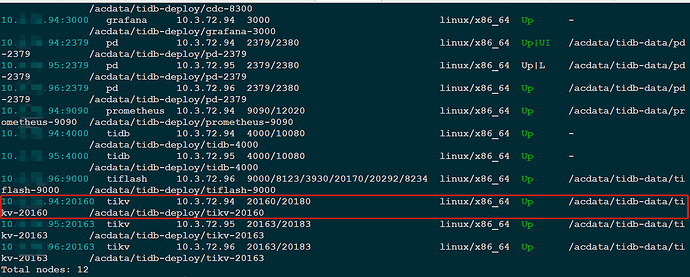

[Reproduction Path] Manually delete the storage path of one of the kv nodes: rm -rf /acdata/tidb-deploy/tikv-20163; rm -rf /acdata/tidb-data/tikv-20163

[Encountered Problem: Phenomenon and Impact] Stop the kv service of this node, then perform the scale-in operation using the following command:

# tiup cluster scale-in test-my-tidb-cluster --node 10.3.72.94:20163

Then, check the status of this kv node in the cluster, it remains in Pending Offline and cannot be recycled.

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

The official documentation provides a detailed introduction to the monitoring indicators of TiDB. You can refer to the following link: TiDB 监控框架概述 | PingCAP 文档中心.

Three replicas, high availability cluster, 3 nodes, can function normally even if one goes down.

Yes, it can indeed work normally. Now, I want to restore this KV node. First, scale down, then scale up. As a result, it got stuck and didn’t work. After using --force to forcibly clear it, scaling up failed.

One issue with TiDB is the relationship between the number of replicas and the number of nodes. You can check the official documentation.

When you have time, read the official documentation more. Your questions can all be resolved.

According to the key points I highlighted, the correct logic is: first scale out, then scale in the TiKV nodes.

Your issue is: scaling down TiKV nodes, the low regions cannot find other nodes to maintain three replicas.

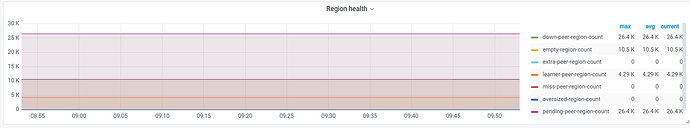

Currently, the health status of the region is as follows:

Can you now scale out a new KV node?

Since the node was manually deleted, you need to use -force to scale down.

What error is reported when scaling up fails?

First, add the node, then the Pending Offline node will release the region. It requires time for scheduling and eventually converting to the tombstone state before it can be properly taken offline.

At least 3 TiKV nodes are required for 3 replicas.

If you can scale out online, then scale out first. If you can’t scale out, then force it.

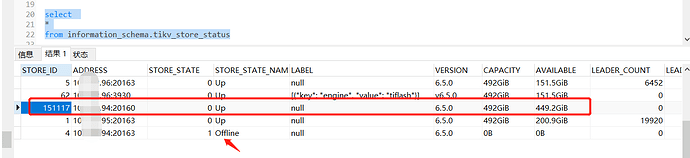

On the current problematic KV node, a new KV was successfully added. The status is as follows:

The one below that is already in the offline state cannot be cleaned up from this table. I directly changed the port to 20160.

Are you still there, pending offline?

Next, will the newly expanded KV node synchronize and replicate the replicas from the other two KV nodes to ultimately maintain a state of three replicas?

Currently, the disk space remains at 18G and hasn’t changed.

# df -h|grep acdata

/dev/mapper/acvg-lv_data 493G 18G 450G 4% /acdata

Pending offline is no longer there. I used the --force parameter to forcefully clear it.

Go to PD and check if the store is still there.