Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 表大批量删除数据后 使用不带任何条件的limit1非常慢

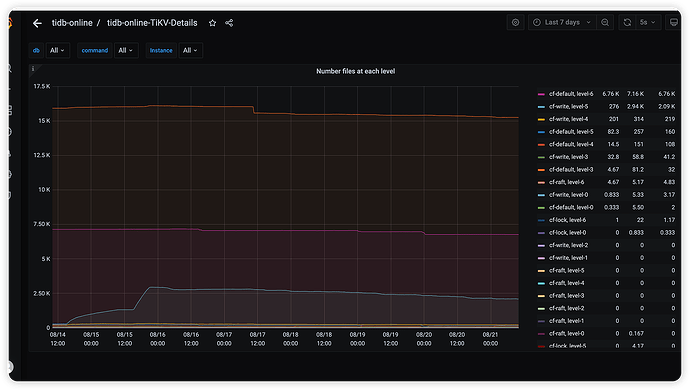

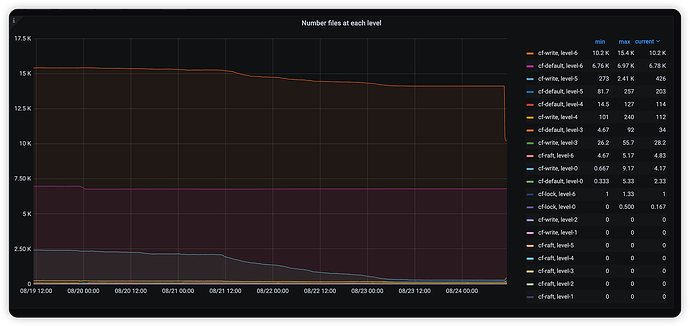

The background is a table with about 1.3 billion records. Approximately 1 billion records were deleted using non-transactional DML, leaving around 200 million records.

Now, executing select * from xxx limit 1 takes more than 3 minutes to return a result. However, using an index in the WHERE clause for queries works normally.

Execution plan:

Limit_7 1.00 1 root time:3m24.4s, loops:2 offset:0, count:1 N/A N/A

└─IndexReader_14 1.00 1 root time:3m24.4s, loops:1, cop_task: {num: 1829, max: 402.8ms, min: 134.9µs, avg: 111.7ms, p95: 246.4ms, max_proc_keys: 1, p95_proc_keys: 0, tot_proc: 3m22.7s, tot_wait: 42ms, rpc_num: 1829, rpc_time: 3m24.3s, copr_cache_hit_ratio: 0.46, distsql_concurrency: 1} index:Limit_13 326 Bytes N/A

└─Limit_13 1.00 1 cop[tikv] tikv_task:{proc max:472ms, min:33ms, avg: 186.8ms, p80:229ms, p95:280ms, iters:1829, tasks:1829}, scan_detail: {total_process_keys: 1, total_process_keys_size: 46, total_keys: 851728674, get_snapshot_time: 283.7ms, rocksdb: {delete_skipped_count: 19440216, key_skipped_count: 871167911, block: {cache_hit_count: 11891, read_count: 554620, read_byte: 5.93 GB, read_time: 2.09s}}} offset:0, count:1 N/A N/A

└─IndexFullScan_11 1.00 1 cop[tikv] table:h_equip_bin_box_status_history, index:idx_gmt_create(gmt_create) tikv_task:{proc max:472ms, min:33ms, avg: 186.8ms, p80:229ms, p95:280ms, iters:1829, tasks:1829} keep order:false N/A N/A