Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tikv节点从3个扩容到5个以后,Store Region score一直在波动

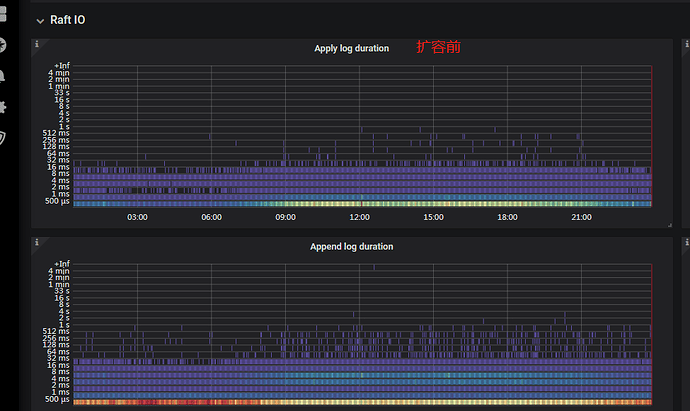

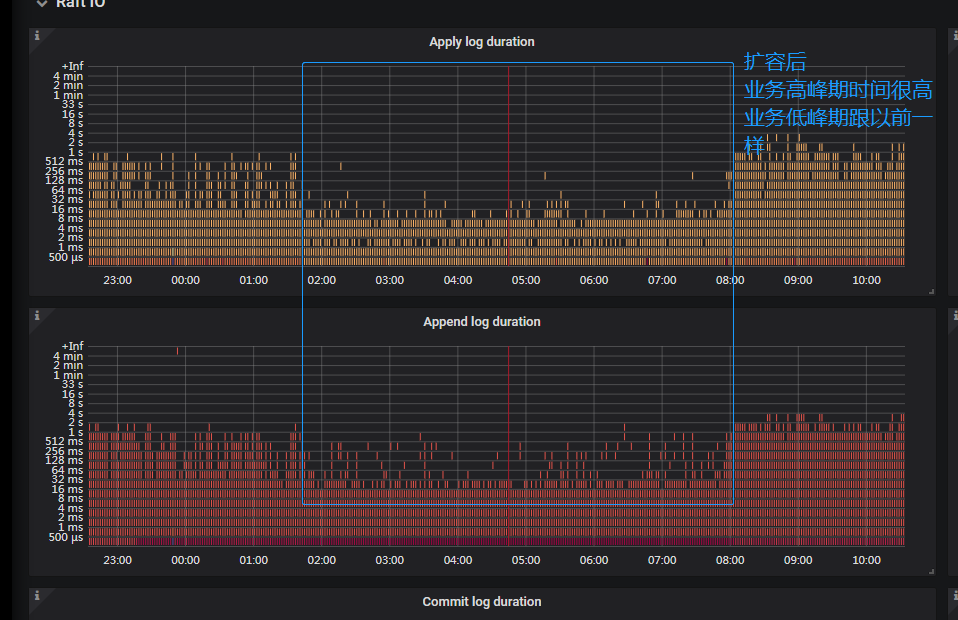

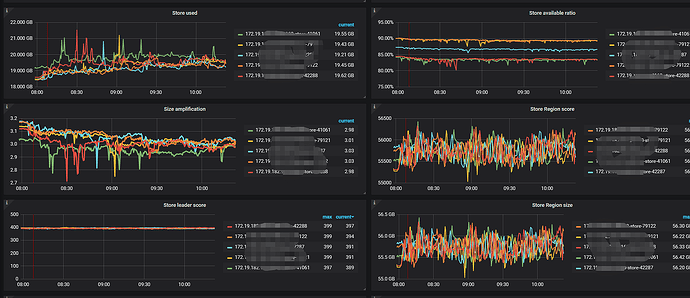

After expanding the TiKV nodes from 3 to 5, the Store Region score has been fluctuating, causing the apply log and append log times to increase several times.

After several attempts, reducing the TiKV nodes to 3 resolves the issue, but expanding to 5 nodes causes it to reappear.

How should this be resolved, or what troubleshooting steps should be taken?

【TiDB Environment】 Production

【TiDB Version】 4.0.10

Is it because the scheduling is not yet complete, thus affecting the IO?

Has concurrent writing increased during peak periods?

The scheduling was completed a few days ago, and the number of regions was not high to begin with. The expansion was completed in about an hour.

During peak business times, the query volume increases significantly, but the write volume is not large.

“Reducing TiKV to 3 nodes solves the problem, but expanding to 5 nodes causes it again.” Are the configurations of the TiKV nodes the same? Especially the disk IO capability.

Among the 5 TiKV nodes, there are two different capacity specifications, but the IO capability is the same.

Follow this link to export the PD monitoring pages before and after scaling out: https://metricstool.pingcap.com/#backup-with-dev-tools

Through monitoring, we found empty regions. We modified split-region-on-table and enable-cross-table-merge, and unified the disk capacity of each TiKV, but the effect is still not very noticeable.

After removing the scheduling of hot regions with scheduler remove balance-hot-region-scheduler, the region scheduling on each TiKV node has significantly decreased and stabilized. The apply log time has also reduced from 256-512ms to 64-128ms. However, compared to the 16-32ms before the expansion, it has still increased considerably.

After turning off hotspot scheduling, the region scheduling monitoring is no longer so chaotic! image|690x311

Let’s see what tiup mirror show is.

I didn’t install mirro. Yesterday afternoon around 3 PM, I turned off hotspot scheduling. By around 8 PM, the apply log time and query response time had returned to the levels before the expansion. It’s quite strange. Expanding TiKV from 3 to 5 nodes resulted in so much additional hotspot scheduling.

It feels like adding these two nodes has affected the scheduling algorithm.

Yes, now the hotspot scheduling has been directly turned off.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.