Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: kv节点剔除后,commit耗时增加

To improve efficiency, please provide the following information. Clear problem descriptions can lead to quicker resolutions:

[TiDB Usage Environment]

Production Business

[Overview] Scenario + Problem Overview

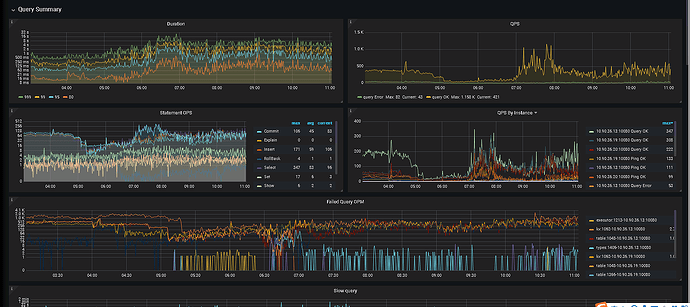

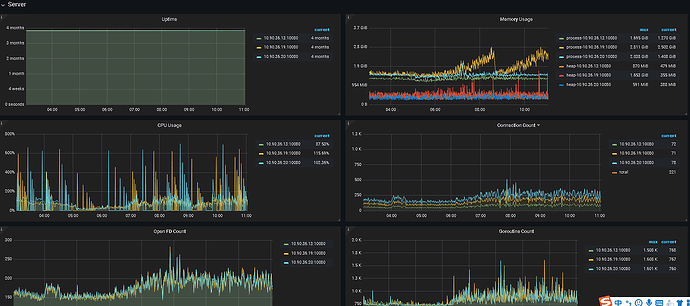

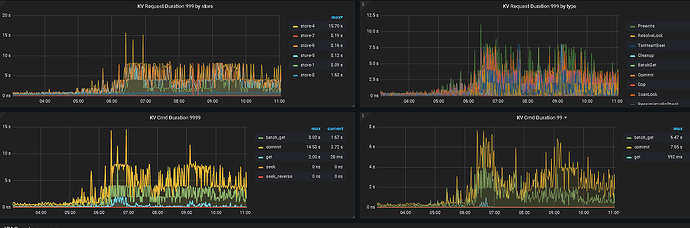

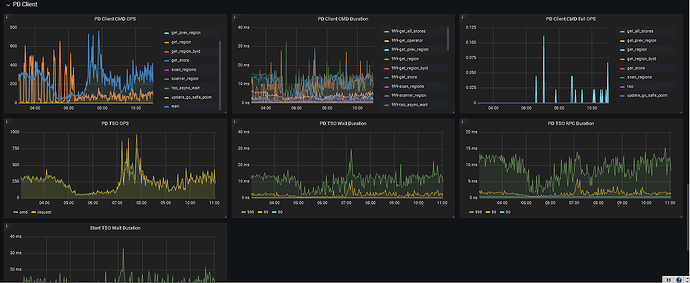

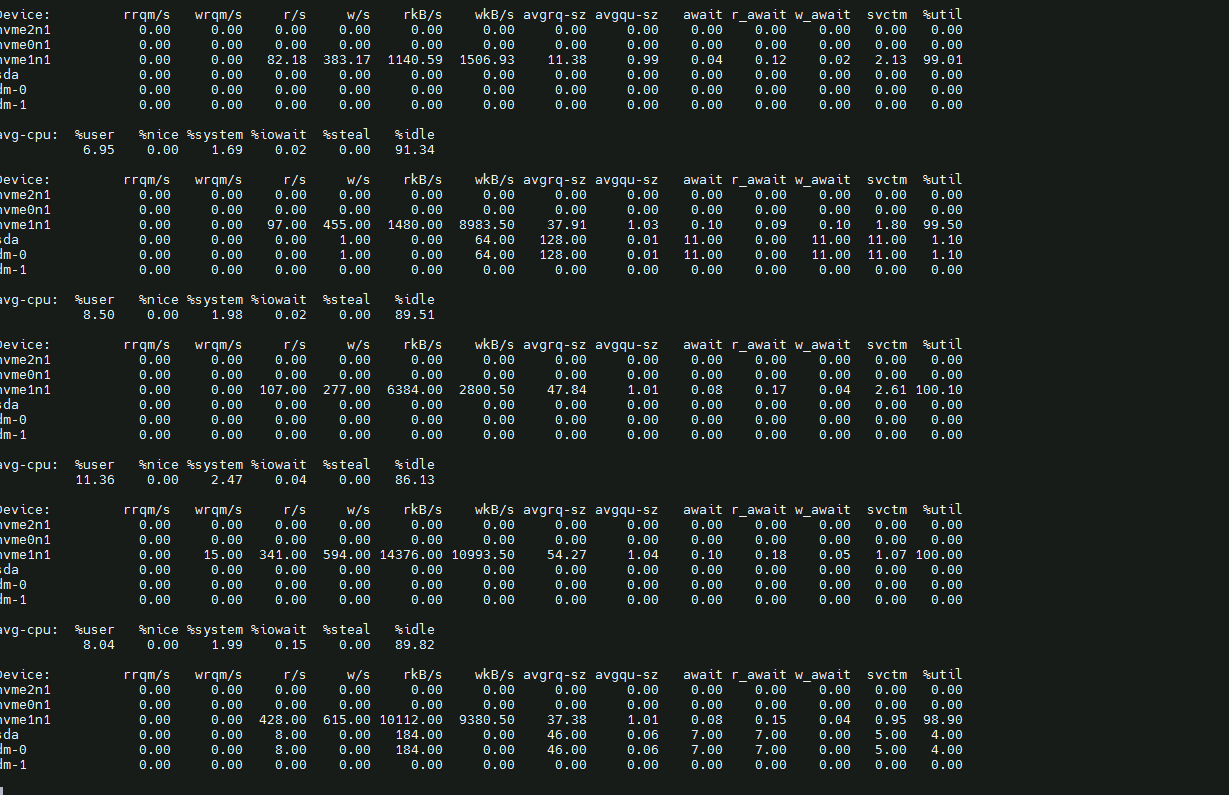

Due to hardware failure, a TiKV node was shut down. After shutting it down, the commit response time in the TiKV duration increased by nearly 10 times.

[Background] Operations performed

After the hardware failure of the TiKV node, the problematic TiKV was shut down and taken offline.

[Phenomenon] Business and database phenomena

The select and DML operations of the entire TiDB cluster have slowed down.

[Problem] Current issue encountered

The select and DML operations of the entire TiDB cluster have slowed down.

[Business Impact]

The select and DML operations of the entire TiDB cluster have slowed down.

[TiDB Version]

mysql> select tidb_version()

→ ;

±-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| tidb_version() |

±-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Release Version: v3.1.0

Git Commit Hash: 1347df814de0d603ef844d53b2c8d54fc760b75e

Git Branch: heads/refs/tags/v3.1.0

UTC Build Time: 2020-04-16 09:38:11

GoVersion: go version go1.13 linux/amd64

Race Enabled: false

TiKV Min Version: v3.0.0-60965b006877ca7234adaced7890d7b029ed1306

Check Table Before Drop: false |

[Application Software and Version]

[Attachments] Relevant logs and configuration information

- TiUP Cluster Display information

- TiUP Cluster Edit config information

Monitoring (https://metricstool.pingcap.com/)

- TiDB-Overview Grafana monitoring

- TiDB Grafana monitoring

- TiKV Grafana monitoring

- PD Grafana monitoring

- Corresponding module logs (including logs 1 hour before and after the issue)

For questions related to performance optimization and fault troubleshooting, please download the script and run it. Please select all and copy-paste the terminal output results for upload.