Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: PD扩缩容成功后,执行create index语句报错ERROR 1105 (HY000): DDL job rollback, error报错

[TiDB Usage Environment] Production Environment / Testing / Poc

[TiDB Version] v7.1.1

[Reproduction Path]

-

Initially, there were 3 PDs. We expanded with 3 new PDs, switched the leader to the new PDs, and scaled down the old 3 PDs. There were no anomalies during the scaling down process, and the cluster status now shows normal. When executing statements in TiDB, table creation, insert, update, delete, and normal alter statements are all fine, but any statement to add an index results in an error. ERROR 1105 (HY000): DDL job rollback, error msg: pd address (192.168.xxx:2379,192.168.xxx:2379,192.168.xxx:2379) not available, error is Get “http://192.168.xxx:2379/pd/api/v1/config/cluster-version”: dial tcp 192.168.xxx:2379: connect: connection refused, please check network. The IPs in the error message are the 3 PDs that have been scaled down.

-

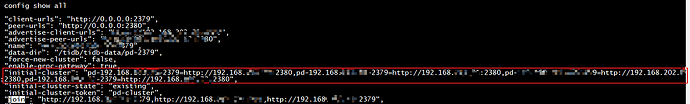

Checking the PD configuration:

The initial-cluster configuration still contains the old three PDs, and only one is a new machine.

The join configuration contains only the original 3 PDs, with no new machines.

[Encountered Problem: Phenomenon and Impact]

In the new cluster after scaling up and down, any statement to create an index results in the following error:

Execute pd ctl

member and how to switch to leader

Try setting the variable tidb_ddl_enable_fast_reorg to off.

Should the initial-cluster and join configurations include all PD nodes, including newly added and previously scaled-down ones?

This was switched before, and the PD leader is also on the new machine. The status looks normal in TiUP, but only the new machine, when entering PD, only the initial-cluster and join parameters show the old PD.

The current state is that the initial cluster has only 3 old nodes and one new node, with the new node currently being the leader. The other two new nodes are not present. Then, the 3 nodes joined afterward are all old nodes.

Will this parameter have an impact?

Take a screenshot of the member, then you can try member leader resign.

Can you still see the original three nodes when executing the following member with pdctl?

I can’t see it anymore. membe is normal, only the initial-cluster and join are abnormal.

If it really doesn’t work, you can only try reloading the PD node.

The issue has been resolved. The main reason for the problem was that after scaling up or down, all other nodes needed to be reloaded to update the latest configuration. Otherwise, they would still look for the old node’s configuration to operate. After reloading all nodes, there are no more errors.

Needing to reload shouldn’t be normal behavior; there must still be an issue somewhere.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.