Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: TiKV缩容后ReplicaChecker未调度Operator处理offline-region

【TiDB Usage Environment】Production Environment

【TiDB Version】v4.0.0-rc.2

【Encountered Issue】

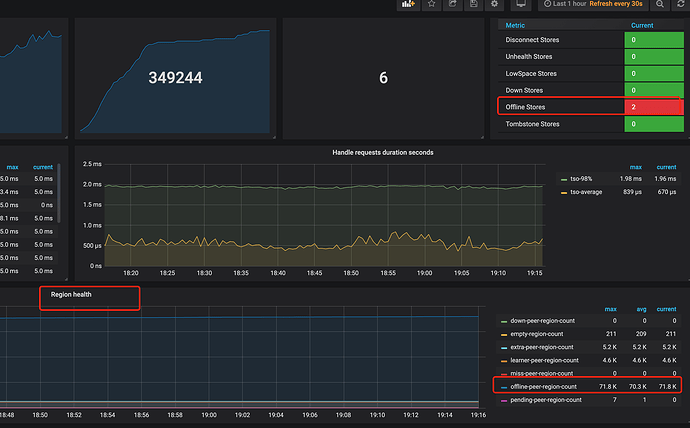

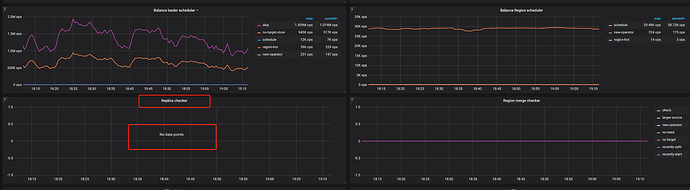

ReplicaChecker did not schedule an Operator to handle the offline Store.

First, two new TiKV machines were added, and then two old machines were removed to complete the replacement, with a total of 8 TiKV instances. The old machines are in Offline status, but ReplicaChecker did not schedule an Operator to transfer these extra offline-peer-regions.

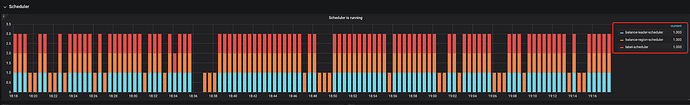

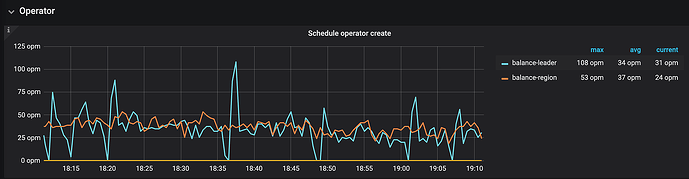

Through the dashboard, it was found that there are only balance-region and balance-leader in the Operator, and no replace-offline-replica and remove-extra-replica scheduling.

According to the documentation, ReplicaChecker cannot be turned off, only balance-leader-scheduler and balance-region-scheduler can be turned off.

Dear experts, please check how to start this ReplicaChecker to handle these offline-peer-regions.

【Reproduction Path】

Execute TiKV’s scale-in to go offline, observe that the status of 2 TiKV is Offline, but data transfer did not start automatically.

PD Configuration

{

“replication”: {

“enable-placement-rules”: “false”,

“location-labels”: “”,

“max-replicas”: 3,

“strictly-match-label”: “false”

},

“schedule”: {

“enable-cross-table-merge”: “false”,

“enable-debug-metrics”: “true”,

“enable-location-replacement”: “true”,

“enable-make-up-replica”: “true”,

“enable-one-way-merge”: “false”,

“enable-remove-down-replica”: “true”,

“enable-remove-extra-replica”: “true”,

“enable-replace-offline-replica”: “true”,

“high-space-ratio”: 0.8,

“hot-region-cache-hits-threshold”: 3,

“hot-region-schedule-limit”: 4,

“leader-schedule-limit”: 4,

“leader-schedule-policy”: “count”,

“low-space-ratio”: 0.9,

“max-merge-region-keys”: 200000,

“max-merge-region-size”: 20,

“max-pending-peer-count”: 16,

“max-snapshot-count”: 8,

“max-store-down-time”: “30m0s”,

“merge-schedule-limit”: 8,

“patrol-region-interval”: “100ms”,

“region-schedule-limit”: 32,

“replica-schedule-limit”: 64,

“scheduler-max-waiting-operator”: 5,

“split-merge-interval”: “1h0m0s”,

“store-balance-rate”: 15,

“store-limit-mode”: “manual”,

“tolerant-size-ratio”: 0

}

}

【Issue Phenomenon and Impact】

No Operator to transfer the offline TiKV Store.

【Attachments】