Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: Analyze Table 很慢,且报错wait recvLoop: context deadline exceeded

【TiDB Usage Environment】Production environment or Test environment or POC

【TiDB Version】5.4.1

【Encountered Problem】

【Reproduction Path】What operations were performed that led to the problem

【Problem Phenomenon and Impact】

[2022/06/28 10:40:16.649 +08:00] [ERROR] [analyze.go:148] [“analyze failed”] [error=“wait recvLoop: context deadline exceeded”]

【Attachments】

Please provide the version information of each component, such as cdc/tikv, which can be obtained by executing cdc version/tikv-server --version.

Could you share the information before and after this error?

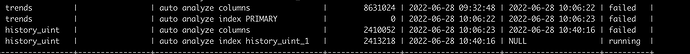

[2022/06/28 10:40:16.639 +08:00] [INFO] [coprocessor.go:844] [“[TIME_COP_PROCESS] resp_time:10.721943311s txnStartTS:434211115702943810 region_id:1435368 store_addr:10.74.139.12:20160 kv_process_ms:10718 kv_wait_ms:3 kv_read_ms:20 processed_versions:256 total_versions:257 rocksdb_delete_skipped_count:97 rocksdb_key_skipped_count:1 rocksdb_cache_hit_count:2585 rocksdb_read_count:0 rocksdb_read_byte:0”] [conn=555]

[2022/06/28 10:40:16.649 +08:00] [ERROR] [analyze.go:148] [“analyze failed”] [error=“wait recvLoop: context deadline exceeded”]

[2022/06/28 10:40:16.649 +08:00] [INFO] [analyze.go:130] [“analyze table zabbix.history_uint has failed”] [partition=] [“job info”=“auto analyze columns”] [“start time”=2022/06/28 10:06:23.541 +08:00] [“end time”=2022/06/28 10:40:16.649 +08:00] [cost=33m53.108587436s]

[2022/06/28 10:40:16.652 +08:00] [INFO] [coprocessor.go:844] [“[TIME_COP_PROCESS] resp_time:10.734656783s txnStartTS:434211115702943810 region_id:1435368 store_addr:10.74.139.12:20160 kv_process_ms:10730 kv_wait_ms:3 kv_read_ms:37 processed_versions:512 total_versions:512 rocksdb_delete_skipped_count:339 rocksdb_key_skipped_count:0 rocksdb_cache_hit_count:5041 rocksdb_read_count:0 rocksdb_read_byte:0”] [conn=555]

[2022/06/28 10:40:16.654 +08:00] [INFO] [coprocessor.go:844] [“[TIME_COP_PROCESS] resp_time:670.453138ms txnStartTS:434211110919077968 region_id:809288 store_addr:10.74.137.17:20160 kv_process_ms:661 kv_wait_ms:0 kv_read_ms:569 processed_versions:38754 total_versions:223936 rocksdb_delete_skipped_count:650 rocksdb_key_skipped_count:199139 rocksdb_cache_hit_count:949 rocksdb_read_count:5 rocksdb_read_byte:327197”] [conn=1540849]

history_uint | | 2022-06-28 11:02:14 | 13543122172 | 15993758471 |

The table is very large, and this analyze is slow and often fails, causing many frequently updated tables to not get automatically analyzed, which in turn makes queries very slow.

mysql> select tidb_version();

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| tidb_version() |

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Release Version: v5.4.1

Edition: Community

Git Commit Hash: be484475e76b3aaa2cad520111353c3327db35fa

Git Branch: heads/refs/tags/v5.4.1

UTC Build Time: 2022-05-07 04:31:32

GoVersion: go1.16.4

Race Enabled: false

TiKV Min Version: v3.0.0-60965b006877ca7234adaced7890d7b029ed1306

Check Table Before Drop: false |

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

1 row in set (0.00 sec)

If the table is large, slow table analysis is normal. However, the size of the table and the speed of the query are not directly related. Did the query use an index?

Is it normal to fail after 20 minutes? Is there a limit on the size of TIDB tables?

It failed in just 30 minutes. Is this a serial task? Can’t it be parallel? Won’t this block the analyze of other tables? Is this design reasonable?

The image is not visible. Please provide the text you need translated.

Does TiDB support the functionality to not analyze certain tables, like a table filtering feature?

You can’t avoid analyzing. Without analysis, SQL indexes won’t execute accurately. You can set the allowed time for analysis, such as in the morning, afternoon, or at night, to avoid peak periods.

history | | auto analyze index history_1 | 0 | 2022-06-28 13:06:03 | 2022-06-28 13:38:44 | failed

Processed_rows 0 in 40 minutes. What is going on here?

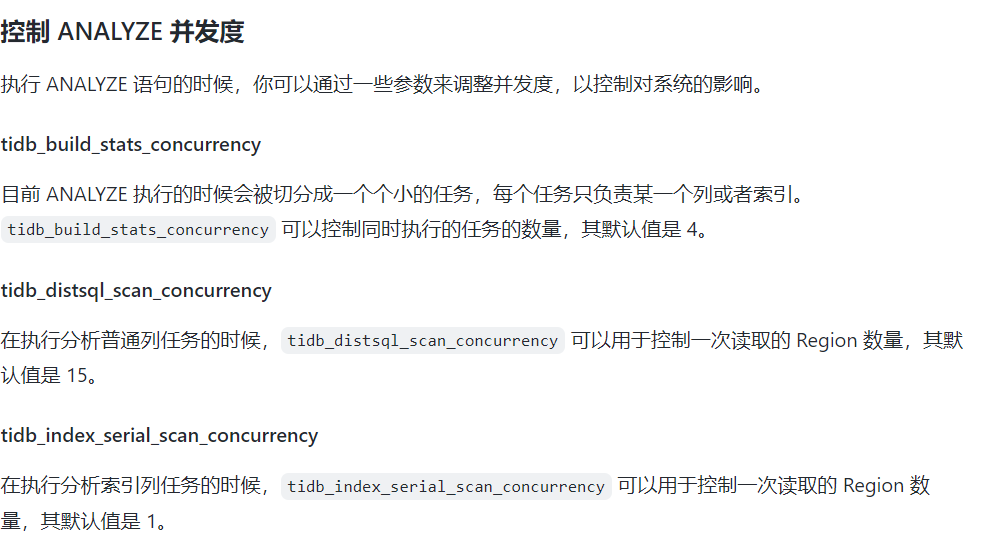

Try manual analyze, you can adjust the following parameters to speed up the process:

To achieve faster analysis speed, you can set tidb_enable_fast_analyze to 1 to enable the fast analyze feature. The default value of this parameter is 0.

Try setting SET GLOBAL tidb_enable_fast_analyze=1.