Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: TiDB集群对磁盘的硬件要求有没有具体的参数信息

【TiDB Usage Environment】

Production Environment

【TiDB Version】

v5.2.2

【Encountered Problem】

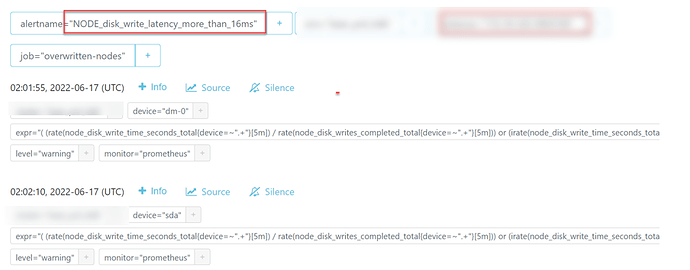

The TiDB cluster in the project frequently reports errors indicating high disk write latency. Compared to other projects, the disk performance is relatively poor (tested using the dd command). Can specific requirements for disk performance be provided, such as IOPS, read/write latency, etc.?

【Reproduction Path】What operations were performed to encounter the problem

Currently, the storage used is hyper-converged, and the underlying disks are SATA interface SSDs.

【Problem Phenomenon and Impact】

This needs to be evaluated based on the usage scenario. As far as I know:

-

Some use HDDs, the mechanical kind, but do not have high IO requirements, so slow performance is acceptable.

-

Some use regular SSDs and do not have special IO requirements.

-

Some use NVME for higher IO performance requirements.

In summary, you can set up non-performance clusters and high-performance clusters according to the needs of different scenarios to meet various requirements.

Sometimes customers request specific parameters such as incoming IOPS, read/write latency, etc. These parameters are not easy to provide.

Hello:

The following is for reference only.

Using

sudo fio -ioengine=psync -bs=32k -fdatasync=1 -thread -rw=randread -size=10G -filename=fio_randread_test -name='fio randread test' -iodepth=4 -runtime=60 -numjobs=4 -group_reporting --output-format=json --output=fio_randread_result.json

The tested rand read IOPS should not be less than 40000.

Using

sudo fio -ioengine=psync -bs=32k -fdatasync=1 -thread -rw=randrw -percentage_random=100,0 -size=10G -filename=fio_randread_write_test -name='fio mixed randread and sequential write test' -iodepth=4 -runtime=60 -numjobs=4 -group_reporting --output-format=json --output=fio_randread_write_test.json

The tested rand read IOPS should not be less than 10000.

The seq write IOPS should not be less than 10000.

Using

sudo fio -ioengine=psync -bs=32k -fdatasync=1 -thread -rw=randrw -percentage_random=100,0 -size=10G -filename=fio_randread_write_latency_test -name='fio mixed randread and sequential write test' -iodepth=1 -runtime=60 -numjobs=1 -group_reporting --output-format=json --output=fio_randread_write_latency_test.json

The tested rand read latency should not be higher than 250000.

The seq write latency should not be higher than 30000.

for NVMe interface solid-state drives.

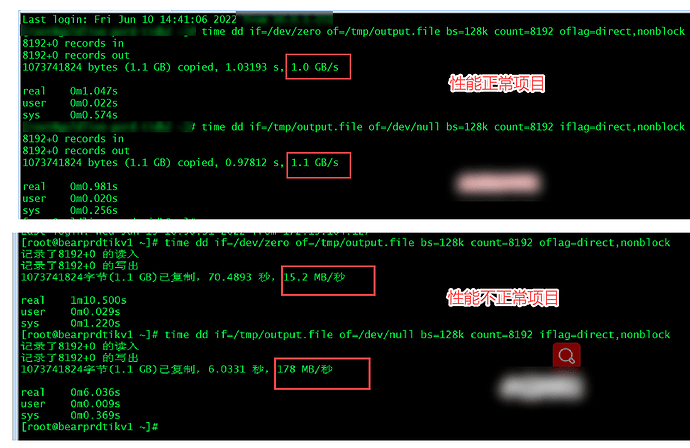

Your deployment is not just abnormal, it’s extremely poor. The write speed is only 15MB/s, which is even worse than a mechanical disk. A mechanical disk writes at 300MB/s. Your underlying SSDs are probably using RAID 5 redundancy. I suggest deploying TiDB directly on bare metal. A normal SSD writes at 500MB/s and reads at 500MB/s as well. Such a speed indicates that there is disk damage at the bottom of your RAID 5 setup.

The above didn’t make it clear that SATA interface SSDs have read/write speeds of 500MB/s, while M.2 interface SSDs have at least 1000MB/s, with high-end ones around 6000MB/s. U.2 interface enterprise drives have read/write speeds of 8000MB/s. So, bypass hyper-convergence. At the very least, the disk should be a passthrough SSD.

Using SATA SSD, a normal drive wouldn’t write as slowly as yours. There might be a hardware issue with the machine.

Brother, is the event you mentioned above in microseconds or nanoseconds?

I have also always believed that there is an issue with their disk performance. The people on the customer’s side are already testing their hyper-converged system.

Thank you, brothers, for the information as a reference.

This topic was automatically closed 1 minute after the last reply. No new replies are allowed.