Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 问一下tidb的br还原是否有可调参数

[TiDB Usage Environment] Production Environment / Testing / PoC

[TiDB Version]

[Reproduction Path] What operations were performed when the issue occurred

[Encountered Issue: Issue Phenomenon and Impact]

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachments: Screenshots/Logs/Monitoring]

Can the restoration speed be increased?

This is my backup speed at 33.3g, but the restore is exactly ten times slower. What could be the reason? Which parameter hasn’t been adjusted? Backup to S3 and restore from S3.

Where does it show that it’s slow? What was the original response time?

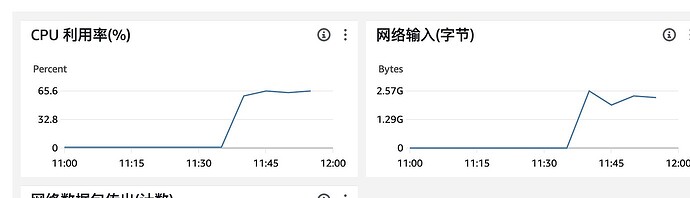

Backup speed is 33GB, but restore speed is only 2.5GB.

Is there any background information? I can’t see anything from this.

Where to back up from? Where to restore to?

I can only say that the restore speed is too slow. It might be due to the disk.

+00:00] [INFO] [collector.go:77] [“Full Restore success summary”] [total-ranges=246425] [ranges-succeed=246425] [ranges-failed=0] [split-region=8m29.304639926s] [restore-ranges=143985] [total-take=8h42m23.354499141s] [BackupTS=450047199759040513] [RestoreTS=450051441882038273] [total-kv=51476612568] [total-kv-size=6.522TB] [average-speed=208.1MB/s] [restore-data-size(after-compressed)=1.104TB] [Size=1104033608541]

Restoring 200MB per minute, a total of 6.5TB.

Starting from v8.0.0, the br command-line tool introduces the --tikv-max-restore-concurrency parameter to control the maximum number of download and ingest files per TiKV node. Additionally, by adjusting this parameter, you can control the maximum length of the job queue (maximum length of the job queue = 32 * number of TiKV nodes * --tikv-max-restore-concurrency), thereby controlling the memory consumption of the BR node. Typically, --tikv-max-restore-concurrency is automatically adjusted based on the cluster configuration and does not require manual setting. If you observe through the TiKV-Details > Backup & Import > Import RPC count monitoring metric in Grafana that the number of download files remains close to 0 for a long time while the number of ingest files is always at the upper limit, it indicates that there is a backlog of ingest file tasks and the job queue has reached its maximum length. In this case, you can take the following measures to alleviate the task backlog issue:

-

Set the --ratelimit parameter to limit the download speed, ensuring that the ingest file tasks have sufficient resources. For example, if the disk throughput of any TiKV node is x MiB/s and the network bandwidth for downloading backup files is greater than x/2 MiB/s, you can set the parameter --ratelimit x/2. If the disk throughput of any TiKV node is x MiB/s and the network bandwidth for downloading backup files is less than or equal to x/2 MiB/s, you can leave the --ratelimit parameter unset.

-

Increase the --tikv-max-restore-concurrency to extend the maximum length of the job queue.

Is the bottleneck in the hard drive or the network speed?

Please post the restore command.

Is the backup on the local machine, NFS, S3, or somewhere else? Check the network and disk performance metrics. If there are no bottlenecks, check the restore parameter settings. For example, if there are a large number of regions, see if there are settings like --granularity=“coarse-grained”.

The key here still depends on network and disk performance.

Restoring from S3 is either due to slow network speed or slow disk write speed.