Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: TIKV RawKV的备份和恢复,恢复时出现问题

[TiDB Usage Environment] Production Environment / Testing / Poc

[TiDB Version]

[Reproduction Path] What operations were performed when the issue occurred

[Encountered Issues: Problem Phenomenon and Impact]

[Resource Configuration]

[Attachments: Screenshots / Logs / Monitoring]

Question 1: The documentation does not mention how to back up full rawkv data. Is it done without adding the --start and --end parameters?

Question 2: As mentioned, the current test results show that backups to both local and s3 media are successful, but there are different issues during the restore process. The remote s3 does not report errors but shows 0 restored data; local restore reports errors.

1. Test Process - Remote s3 (ceph)

[root@nma07-304-d19-sev-r740-2u21 tls]# /opt/TDP/tidb-community-server-v6.3.0-linux-amd64/br backup raw --pd "127.0.0.1:2379" --ca ca.crt --cert client.crt --key client.pem --ratelimit 128 --cf default --storage "s3://juicefsmetabk/test1205?endpoint=http://10.37.70.2:8081&access-key=J5PSR9YQL0TJ4BBXFTWD&secret-access-key=xQlY47EWvA2URPwcBt7ZB9d72iKK7jss8Bb5PSS5" --send-credentials-to-tikv=true

Detail BR log in /tmp/br.log.2022-12-05T09.10.54+0800

Raw Backup <----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------> 100.00%

[2022/12/05 09:10:54.908 +08:00] [INFO] [collector.go:69] ["Raw Backup success summary"] [total-ranges=6] [ranges-succeed=6] [ranges-failed=0] [backup-total-regions=6] [total-take=392.84766ms] [total-kv=20437] [total-kv-size=16.72MB] [average-speed=42.55MB/s] [backup-data-size(after-compressed)=761.2kB]

- View 2000 data entries and store them for comparison with restored data

cd /root/.tiup/storage/cluster/clusters/csfl-cluster/tls/

tiup ctl:v6.3.0 tikv --ca-path ca.crt --cert-path client.crt --key-path client.pem --host 10.37.70.31:20160 --data-dir /software/tidb-data/tikv-20160 scan --from 'z' --limit 2000 --show-cf lock,default,write

- Clear all data from the cluster and restart the cluster

tiup cluster clean prod-cluster --data

tiup cluster start prod-cluster

- Import the backup data

/opt/TDP/tidb-community-server-v6.3.0-linux-amd64/br restore raw --pd "127.0.0.1:2379" --ca ca.crt --cert client.crt --key client.pem --ratelimit 128 --cf default --storage "s3://juicefsmetabk/test1205?endpoint=http://10.37.70.2:8081&access-key=J5PSR9YQL0TJ4BBXFTWD&secret-access-key=xQlY47EWvA2URPwcBt7ZB9d72iKK7jss8Bb5PSS5" --send-credentials-to-tikv=true

Raw Restore <---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------> 100.00%

[2022/12/05 14:02:28.700 +08:00] [INFO] [collector.go:69] [“Raw Restore success summary”] [total-ranges=0] [ranges-succeed=0] [ranges-failed=0] [restore-files=6] [total-take=482.410289ms] [Result=“Nothing to restore”] [total-kv=20437] [total-kv-size=16.72MB] [average-speed=34.65MB/s]

Shows 0 restored data

5. View 2000 data entries and compare with the previous data

tiup ctl:v6.3.0 tikv --ca-path ca.crt --cert-path client.crt --key-path client.pem --host 10.37.70.31:20160 --data-dir /software/tidb-data/tikv-20160 scan --from 'z' --limit 2000 --show-cf lock,default,write >2001

Found that data starting with jfs was indeed not restored

2. Test Process - Local Disk

- Perform local backup and test two scenarios with and without the start parameter for comparison, as the official documentation does not provide a full backup method. Attempt to delete the start and end parameters.

jfs encoded as 6A6673 as the start value. Not sure if this is the actual range data we need, but there is indeed data.

/opt/TDP/tidb-community-server-v6.3.0-linux-amd64/br backup raw --pd "127.0.0.1:2379" --ca ca.crt --cert client.crt --key client.pem --start 6A6673 --ratelimit 128 --cf default --storage "local:///home/tidb/backuprawzjfs6A6673"

[2022/12/06 10:01:08.036 +08:00] [INFO] [collector.go:69] ["Raw Backup success summary"] [total-ranges=2] [ranges-succeed=2] [ranges-failed=0] [backup-total-regions=2] [total-take=84.392519ms] [backup-data-size(after-compressed)=829.5kB] [total-kv=20853] [total-kv-size=19.03MB] [average-speed=225.5MB/s]

Without start and end parameters, success and data volume display are the same as above

/opt/TDP/tidb-community-server-v6.3.0-linux-amd64/br backup raw --pd "127.0.0.1:2379" --ca ca.crt --cert client.crt --key client.pem --ratelimit 128 --cf default --storage "local:///home/tidb/backupraw"

[2022/12/06 09:58:11.842 +08:00] [INFO] [collector.go:69] ["Raw Backup success summary"] [total-ranges=2] [ranges-succeed=2] [ranges-failed=0] [backup-total-regions=2] [total-take=84.355335ms] [total-kv=20853] [total-kv-size=19.03MB] [average-speed=225.6MB/s] [backup-data-size(after-compressed)=829.5kB]

- Clear all data from the cluster and restart the cluster

tiup cluster clean prod-cluster --data

tiup cluster start prod-cluster

- Import the backup data

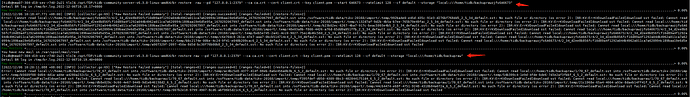

[tidb@nma07-304-d19-sev-r740-2u21 tls]$ /opt/TDP/tidb-community-server-v6.3.0-linux-amd64/br restore raw --pd "127.0.0.1:2379" --ca ca.crt --cert client.crt --key client.pem --start 6A6673 --ratelimit 128 --cf default --storage "local:///home/tidb/backuprawzjfs6A6673"

[tidb@nma07-304-d19-sev-r740-2u21 tls]$ /opt/TDP/tidb-community-server-v6.3.0-linux-amd64/br restore raw --pd "127.0.0.1:2379" --ca ca.crt --cert client.crt --key client.pem --ratelimit 128 --cf default --storage "local:///home/tidb/backupraw"