Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: BR 增量恢复失败

【TiDB Usage Environment】Production\Testing Environment\POC

Production

【TiDB Version】

4.0.15

【Encountered Problem】

Incremental recovery encountered unique key conflict

[2022/06/23 19:01:40.184 +08:00] [ERROR] [db.go:81] [“execute ddl query failed”] [query=“ALTER TABLE supplier_environment_total ADD UNIQUE uk_key_code(key_code)”] [db=supply_chain_factory] [historySchemaVersion=2360] [error=“[kv:1062]Duplicate entry ‘’ for key ‘uk_key_code’”] [errorVerbose=“[kv:1062]Duplicate entry ‘’ for key ‘uk_key_code’

github.com/pingcap/errors.AddStack

\tgithub.com/pingcap/errors@v0.11.5-0.20201126102027-b0a155152ca3/errors.go:174

github.com/pingcap/errors.Trace

\tgithub.com/pingcap/errors@v0.11.5-0.20201126102027-b0a155152ca3/juju_adaptor.go:15

github.com/pingcap/tidb/ddl.(*ddl).doDDLJob

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/ddl/ddl.go:578

github.com/pingcap/tidb/ddl.(*ddl).CreateIndex

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/ddl/ddl_api.go:4034

github.com/pingcap/tidb/ddl.(*ddl).AlterTable

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/ddl/ddl_api.go:2117

github.com/pingcap/tidb/executor.(*DDLExec).executeAlterTable

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/executor/ddl.go:366

github.com/pingcap/tidb/executor.(*DDLExec).Next

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/executor/ddl.go:86

github.com/pingcap/tidb/executor.Next

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/executor/executor.go:262

github.com/pingcap/tidb/executor.(*ExecStmt).handleNoDelayExecutor

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/executor/adapter.go:531

github.com/pingcap/tidb/executor.(*ExecStmt).handleNoDelay

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/executor/adapter.go:413

github.com/pingcap/tidb/executor.(*ExecStmt).Exec

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/executor/adapter.go:366

github.com/pingcap/tidb/session.runStmt

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/session/tidb.go:322

github.com/pingcap/tidb/session.(*session).ExecuteStmt

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/session/session.go:1381

github.com/pingcap/tidb/session.(*session).ExecuteInternal

\tgithub.com/pingcap/tidb@v1.1.0-beta.0.20210714111333-67b641d5036c/session/session.go:1132

github.com/pingcap/br/pkg/gluetidb.(*tidbSession).Execute

\tgithub.com/pingcap/br@/pkg/gluetidb/glue.go:109

github.com/pingcap/br/pkg/restore.(*DB).ExecDDL

\tgithub.com/pingcap/br@/pkg/restore/db.go:79

github.com/pingcap/br/pkg/restore.(*Client).ExecDDLs

\tgithub.com/pingcap/br@/pkg/restore/client.go:500

github.com/pingcap/br/pkg/task.RunRestore

\tgithub.com/pingcap/br@/pkg/task/restore.go:292

main.runRestoreCommand

\tgithub.com/pingcap/br@/cmd/br/restore.go:25

main.newFullRestoreCommand.func1

\tgithub.com/pingcap/br@/cmd/br/restore.go:97

github.com/spf13/cobra.(*Command).execute

\tgithub.com/spf13/cobra@v1.0.0/command.go:842

github.com/spf13/cobra.(*Command).ExecuteC

\tgithub.com/spf13/cobra@v1.0.0/command.go:950

github.com/spf13/cobra.(*Command).Execute

\tgithub.com/spf13/cobra@v1.0.0/command.go:887

main.main

\tgithub.com/pingcap/br@/cmd/br/main.go:56

runtime.main

\truntime/proc.go:203

runtime.goexit

\truntime/asm_amd64.s:1357”] [stack=“github.com/pingcap/br/pkg/restore.(*DB).ExecDDL

\tgithub.com/pingcap/br@/pkg/restore/db.go:81

github.com/pingcap/br/pkg/restore.(*Client).ExecDDLs

\tgithub.com/pingcap/br@/pkg/restore/client.go:500

github.com/pingcap/br/pkg/task.RunRestore

\tgithub.com/pingcap/br@/pkg/task/restore.go:292

main.runRestoreCommand

\tgithub.com/pingcap/br@/cmd/br/restore.go:25

main.newFullRestoreCommand.func1

\tgithub.com/pingcap/br@/cmd/br/restore.go:97

github.com/spf13/cobra.(*Command).execute

\tgithub.com/spf13/cobra@v1.0.0/command.go:842

github.com/spf13/cobra.(*Command).ExecuteC

\tgithub.com/spf13/cobra@v1.0.0/command.go:950

github.com/spf13/cobra.(*Command).Execute

\tgithub.com/spf13/cobra@v1.0.0/command.go:887

main.main

\tgithub.com/pingcap/br@/cmd/br/main.go:56

runtime.main

\truntime/proc.go:203”]

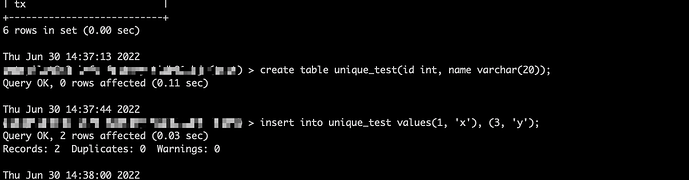

After investigation, during BR recovery, DDL is restored first, then data is restored. This logic works fine for full backup recovery but has issues with incremental backup recovery. For example,

A table

t1 performs DDL to add a column with a default value of 1

t2 modifies the default value to eliminate duplicates

t3 adds a unique index

Time relationship: t1 < t2 < t3

If DDL is executed first, i.e., adding the column, then adding the unique index, and finally restoring the data, it will result in a unique key conflict issue.

Regarding BR incremental recovery, I read an article stating that it is a formal feature in 4.x and 5.x, but it becomes an experimental feature in 6.x. Is this because a bug was discovered?

【Reproduction Path】What operations were performed to encounter the problem

【Problem Phenomenon and Impact】

【Attachments】

- Relevant logs, configuration files, Grafana monitoring (https://metricstool.pingcap.com/)

- TiUP Cluster Display information

- TiUP Cluster Edit config information

- TiDB-Overview monitoring

- Corresponding module Grafana monitoring (if any, such as BR, TiDB-binlog, TiCDC, etc.)

- Corresponding module logs (including logs one hour before and after the issue)

For questions related to performance optimization and fault troubleshooting, please download the script and run it. Please select all and copy-paste the terminal output results for upload.