Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: br restore数据,TIKV某节点数据严重不均衡

[TiDB Usage Environment] Production Environment

[TiDB Version]

Cluster version: v4.0.9

[Encountered Problem: Phenomenon and Impact]

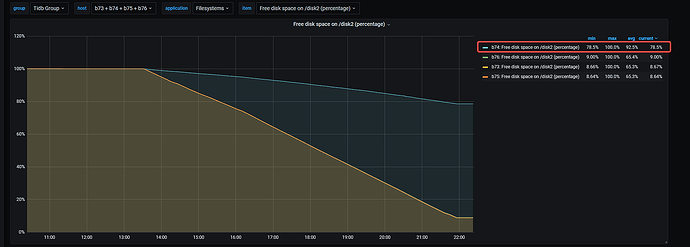

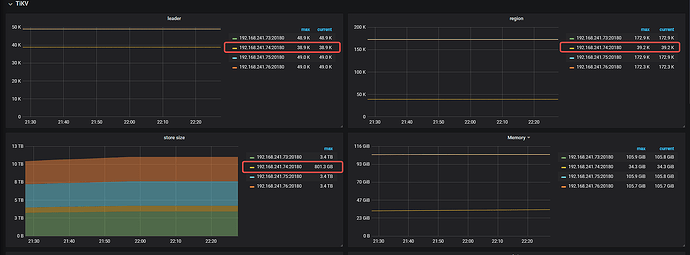

Deploying a new TiDB cluster, backing up data from the old cluster using BR, and then restoring it to the new cluster. During the restore process, it was found that the data storage on a certain TiKV node was severely unbalanced compared to other nodes.

As shown in the figure below: the number of regions on the 192.168.241.74 node is very small. The hardware, including storage configuration, of these TiKV nodes is exactly the same. It’s very strange why this problem occurs. I would like to ask how to troubleshoot this issue, thank you~

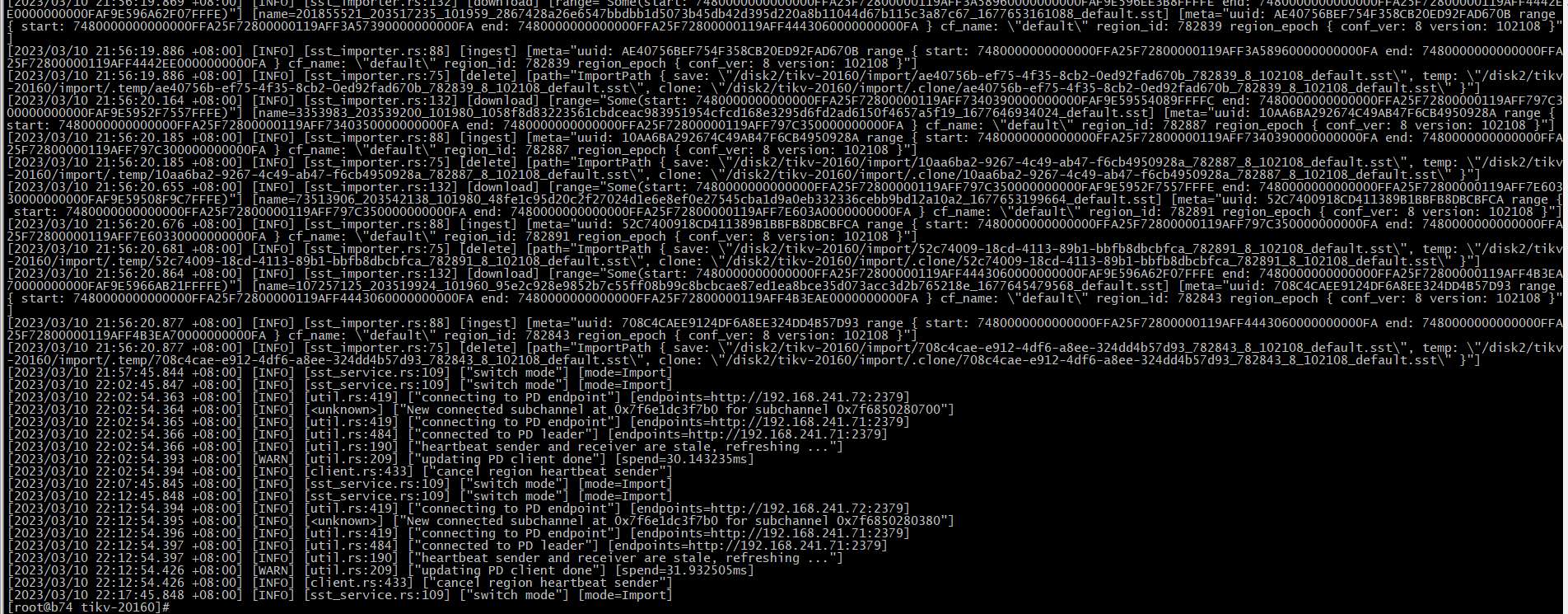

Below is a bit of the TiKV log from this node: