CREATE TABLE `perio_art_project` (

`record_id` int(11) DEFAULT NULL,

`article_id` varchar(255) DEFAULT NULL,

`project_seq` int(11) DEFAULT NULL,

`project_id` varchar(255) DEFAULT NULL,

`project_name` longtext DEFAULT NULL,

`batch_id` int(11) DEFAULT NULL,

`primary_partition` int(4) GENERATED ALWAYS AS ((crc32(`article_id`)) % 9999) STORED NOT NULL,

`last_modify_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

`spark_update_time` datetime DEFAULT NULL,

KEY `article_id` (`article_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin;

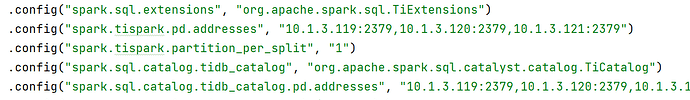

sparkSession

.sql(

"""select * from tidb_catalog.qk_chi.perio_art_project

|""".stripMargin)

.groupBy("project_name")

.count()

.explain()

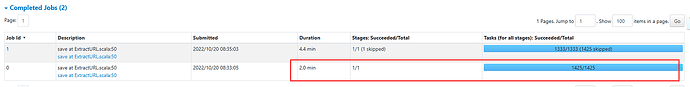

== 2.5 Physical Plan ==

AdaptiveSparkPlan isFinalPlan=false

+- HashAggregate(keys=[project_name#7], functions=[count(1)])

+- Exchange hashpartitioning(project_name#7, 8192), true, [id=#14]

+- HashAggregate(keys=[project_name#7], functions=[partial_count(1)])

+- TiKV CoprocessorRDD{[table: perio_art_project] TableScan, Columns: project_name@VARCHAR(4294967295), KeyRange: [([t\200\000\000\000\000\000\003\017_r\000\000\000\000\000\000\000\000], [t\200\000\000\000\000\000\003\017_s\000\000\000\000\000\000\000\000])], startTs: 437048830927044635} EstimatedCount:18072492

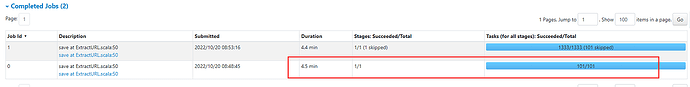

== 3.x Physical Plan ==

AdaptiveSparkPlan isFinalPlan=false

+- HashAggregate(keys=[project_name#7], functions=[specialsum(count(1)#34L, LongType, 0)])

+- Exchange hashpartitioning(project_name#7, 8192), true, [id=#13]

+- HashAggregate(keys=[project_name#7], functions=[partial_specialsum(count(1)#34L, LongType, 0)])

+- TiSpark RegionTaskExec{downgradeThreshold=1000000000,downgradeFilter=[]

+- TiKV FetchHandleRDD{[table: perio_art_project] IndexLookUp, Columns: project_name@VARCHAR(4294967295): { {IndexRangeScan(Index:article_id(article_id)): { RangeFilter: [], Range: [([t\200\000\000\000\000\000\003\017_i\200\000\000\000\000\000\000\001\000], [t\200\000\000\000\000\000\003\017_i\200\000\000\000\000\000\000\001\372])] }}; {TableRowIDScan, Aggregates: Count(1), First(project_name@VARCHAR(4294967295)), Group By: [project_name@VARCHAR(4294967295) ASC]} }, startTs: 437048801724203020}