Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 已进入 tombstone 的 store 是否可重加回 tidb cluster

To improve efficiency, please provide the following information. A clear problem description can help resolve the issue faster:

【TiDB Environment】k8s and tidb cluster

【TiDB Version】4.0.16

【Background】

During tkv scaling down, two stores had their leader count and region count stuck at 1 for over half an hour. We manually forced store 1002 and 1004 into tombstone state using:

curl -X POST ‘http://{pd_ip}:{pd_port}/pd/api/v1/store/{store_id}/state?state=Tombstone’

Afterwards, we discovered that region 10353493 was in an abnormal state, with one peer as a learner and the other two peers inaccessible due to their stores being set to tombstone. The Tidb cluster ready status is true. We have tried to bring store 1002 back up using:

curl -X POST ‘http://{pd_ip}:{pd_port}/pd/api/v1/store/{store_id}/state?state=Up’

We also attempted to expand the tikv replica again to create a new tikv node and mount the corresponding store 1002, but it seems to have no effect. The statefulset of tikv has not been modified accordingly.

Abnormal region status: by using ./pd-ctl region 10353493

{

"id": 10353493,

"start_key": "7480000000000000FF175F728000000000FF15D0A10000000000FA",

"end_key": "7480000000000000FF175F728000000000FF15D1720000000000FA",

"epoch": {

"conf_ver": 269722,

"version": 1216

},

"peers": [

{

"id": 10385018,

"store_id": 1002

},

{

"id": 10389925,

"store_id": 1004

},

{

"id": 10344516,

"store_id": 1013,

"is_learner": true

}

],

"leader": {

"id": 10385018,

"store_id": 1002

},

"down_peers": [

{

"peer": {

"id": 10344516,

"store_id": 1013,

"is_learner": true

},

"down_seconds": 300

}

],

"pending_peers": [

{

"id": 10344516,

"store_id": 1013,

"is_learner": true

}

],

"written_bytes": 0,

"read_bytes": 0,

"written_keys": 0,

"read_keys": 0,

"approximate_size": 1,

"approximate_keys": 0

}

【Business Impact】

Using SQL syntax select * from mysql.stats_histograms and select * from information_schema.tables; results in the following error:

(1105, ‘no available peers, region: {id:10353493 start_key:“t\200\000\000\000\000\000\000\027_r\200\000\000\000\000\025\320\241” end_key:“t\200\000\000\000\000\000\000\027_r\200\000\000\000\000\025\321r” region_epoch:<conf_ver:269722 version:1216 > peers:<id:10385018 store_id:1002 > peers:<id:10389925 store_id:1004 > }’)

Backup using br fails.

【Question】

I would like to ask:

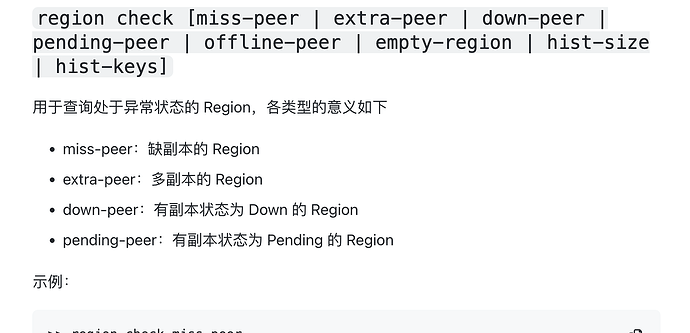

Since the current region has three peers in the states of tombstone, tombstone, and learner, is it possible to recover the region from tombstone, or directly remove the region?

Can the region be directly removed? (e.g., drop table)

Currently, the region belongs to the mysql.stats_histograms data, and losing it should not affect other data.

Thank you.