Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 不修改tikv_gc_life_time,br工具能否实现任意时间点增量备份

[TiDB Usage Environment] Production Environment / Testing / PoC

[TiDB Version]

[Reproduction Path] What operations were performed that led to the issue

[Encountered Issue: Issue Phenomenon and Impact]

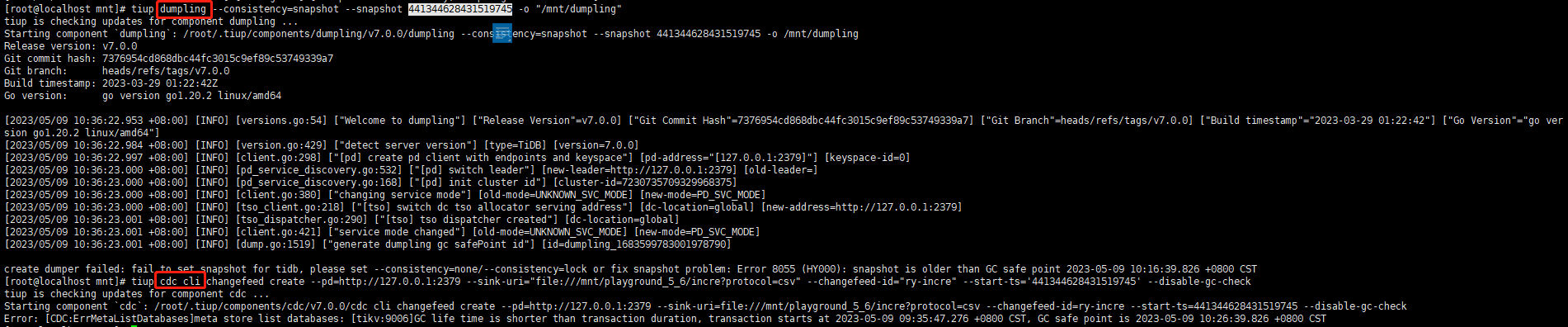

Because tikv_gc_life_time defaults to 10 minutes, if I perform a full backup at 9:00 and then plan to do an incremental backup at 9:20, specifying the previous backup time point as the timestamp of the full backup at 9:00, the incremental backup will fail because the GC safepoint has exceeded the timestamp of the full backup. Turning off GC would generate too much garbage data. Is it possible to use the BR tool to achieve incremental backups at any point in time without modifying tikv_gc_life_time?

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

Log backup: After snapshot backup, record all change logs. When restoring, you can specify a point in time to restore.

Thank you, it’s a method, but what I mainly want to ask is whether this GC issue can only be avoided by modifying tikv_gc_life_time.

Thank you. I mainly want to know if this GC issue can only be avoided by modifying tikv_gc_life_time.

Yes, but the previous versions of BR incremental backup have always been experimental features and are not recommended for use in production environments. Moreover, they will no longer be iterated in the future.

Alright, thanks. So because of this GC issue, I can’t trigger a log backup at any point in time, right?

Log backups are generally kept enabled continuously, and you just need to regularly clean up the expired ones.

Dumpling and CDC will also be blocked by this GC issue.

Regarding incremental backup and the GC mechanism, I have one more question. Since incremental backup only backs up the changed data from the last backup completion to the current time point, no matter how many times GC has been performed during this period, it should be considered “changed data.” Why is it that if the timestamp of the last backup exceeds the GC safepoint, incremental backup cannot be performed? Is there an inherent connection between the two?

If the historical versions of the data have all been garbage collected, then incremental backups are definitely not possible. For example, if you want to back up a full backup from a year ago to the present incrementally, there is definitely no place to find it. However, using log backups does not have this problem and is unrelated to garbage collection.

TiDB’s backup is a logical backup/restore. After GC, the data before the safepoint is gone, and without this data, it is impossible to “roll forward.” Unlike Oracle, which performs physical-level backups by data blocks.

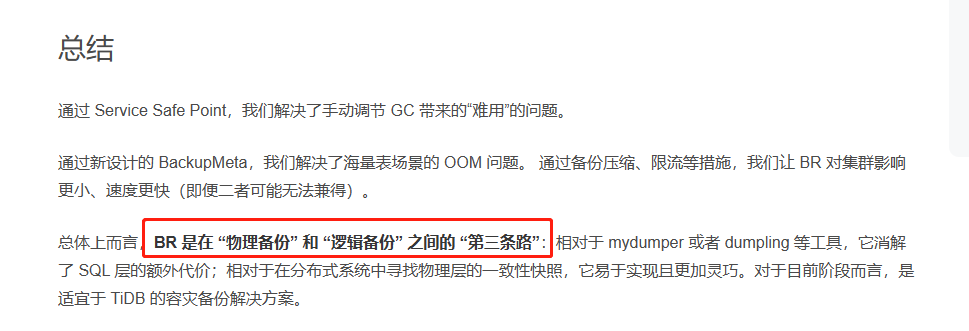

Huh? Is BR a logical backup? I saw the official blog describing it this way.

Backup “Operator Pushdown”: Introduction to TiDB BR | PingCAP

What do you mean? A full backup was done at 9:00, and the incremental backup at 9:20 will fail because the GC setting is only 10 minutes?

Sorry, maybe I didn’t describe it clearly. The direct cause of the incremental backup failure is not tikv_gc_life_time=10min, but because tikv_gc_life_time=10min causes the safe point to update every ten minutes. Therefore, when performing an incremental backup at 9:20, the safe time must have exceeded the timestamp of the full backup (this is the direct cause of the incremental backup failure).

Is BR not being updated anymore?

Only incremental backups have been replaced with log backups.

Once again, I would like to ask how to understand this “third way between physical and logical”?