Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

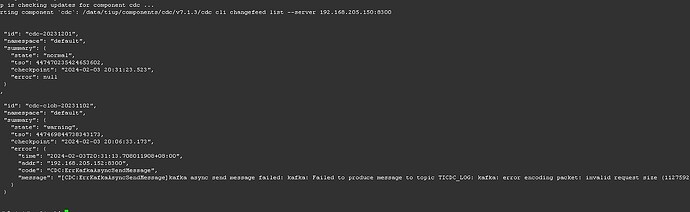

Original topic: cdc to kafka: error encoding packet: invalid request size

Bug Report

Clearly and accurately describe the issue you found. Providing any steps to reproduce the issue can help the development team address it promptly.

[TiDB Version] V7.1.3

[Impact of the Bug]

The error occurred in a changefeed monitoring two tables with large fields. After upgrading from v7.1.1 to v7.1.3, the error shown in the image below appeared. This issue did not occur in v7.1.1. The error does not seem to be due to message size (the Kafka message size limit far exceeds the reported value).

[Possible Steps to Reproduce the Issue]

Upgrade from v7.1.1 to v7.1.3, with tables containing longtext, possibly resulting in large messages.

[Additional Background Information or Screenshots]

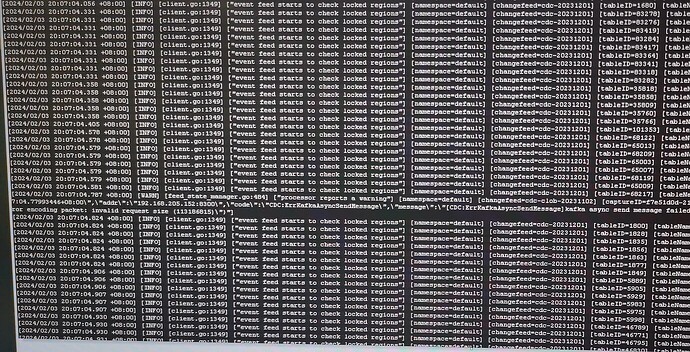

The logs do not provide more information:

How about increasing the Kafka message size?

The size of the Kafka message exceeds more than twice the error size, so it doesn’t seem to be an issue with this parameter.

Is there anything in the Kafka logs?

+1, many times the details of TiCDC alerts are in the logs, which provide richer context.

You can’t tell from this, check the specific CDC logs.

Could you please provide the CDC and Kafka logs?

The CDC logs have been supplemented, and Kafka hasn’t been touched, so it shouldn’t be a Kafka issue.

During the upgrade, no adjustments were made to the Kafka configuration, so it should not be a Kafka issue.

Will this error continue to occur after restarting the changefeed?

Your guess is correct, it should be due to the large message size.

This situation has not been tested, and the site has already been lost.

How was it finally resolved?

It is estimated that it has not been resolved yet… Let’s wait and see…

If you haven’t changed the Kafka configuration and the synchronization was normal before the TiDB upgrade but errors occurred after the upgrade, it is recommended to check whether Kafka is functioning properly. During the upgrade phase, check if there were any changes in the table structure or if new tables were added for synchronization. Also, check the corresponding topics.

Before the upgrade, synchronization was normal, but after the upgrade, an error occurred. It is recommended to check if Kafka is functioning properly.

Knowing that large fields are prone to issues, I specifically created a separate changefeed for tables with large fields. Additionally, all other tables use a single changefeed. The non-large field changefeed tables are numerous and run normally, and no adjustments were made to the Kafka parameters, so it should not be a Kafka issue. It is likely that the message size limit was imposed somewhere after the upgrade.

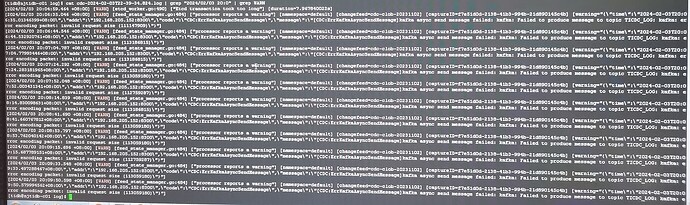

The issue reoccurs, and restarting is ineffective.

The upstream and downstream of TiCDC are the same cluster, with the only difference being the synchronization tasks. This indicates that the upstream TiDB cluster and downstream Kafka should be functioning normally.

Creating a separate changefeed for tables with large fields results in issues with that task; whereas, changefeeds for tables without large fields, which are numerous, run normally without issues. This phenomenon indicates that the problem is triggered by large fields. Additionally, the error logs show that TiCDC encounters an error when sending log messages to downstream Kafka due to an invalid request size, preventing the messages from being produced and sent downstream properly.

Therefore, there should be an internal check logic that controls the threshold for message size determination. You can look at the source code to see which parameter controls this and then make targeted adjustments.