I am constantly getting “connection reset by peer” for a query that uses TiFlash.

I run this query around 3 times every hour as a cron job, and often times I encounter this error in my error monitoring tool along with the job failing.

When I run it manually it usually takes from 100~300ms.

But sometimes when the job fails it shows up in the slow queries section and one recent fail took 11 sec according to it.

The table in question is setup to work with 2 replicas of TiFlash.

And a quick check to information_schema.tiflash_replica says that it’s available (AVAILABLE: 1, PROGRESS: 1).

The table is indexed, but since it’s being executed by TiFlash it does a full scan to the currently 15973 total number of records (which is not really “a lot”).

Also it took around 247 ms for a simple COUNT to the table in question.

I thought it would be a bit more faster to run COUNT on a table with just 15973 rows in TiFlash.

A EXPLAIN ANALYZE in Chat2Query shows that the execution time for the COUNT was about 123.8 ms (a more realistic number).

But the Query Log shows that the total duration for the query was higher (247ms, notice it’s almost about 123.8 * 2 for some reason).

It shows similar numbers in repeated executions.

Does it have to do with locations maybe?

Do I have to specify the TiFlash replica location to be the same as the TiDB cluster region?

How can I check where are the TiFlash replicas? information_schema.tiflash_replica shows nothing.

I couldn’t find much information on that, specially for TiDB cloud serverless.

Application environment:

TiDB Cloud serverless tier (production)

TiDB version:

v7.1.1

Reproduction method:

This is the query I’m running.

Which is just getting the top 200 scores from a table.

SELECT

*

FROM

`title_user_score`

WHERE

`title_user_score`.`title_score_id` = ?

AND `title_user_score`.`score` > ?

ORDER BY

`title_user_score`.`score` DESC

LIMIT

?;

Problem:

Getting this kind of error:

error returned from database: 1105 (HY000): rpc error: code = Unavailable desc = error reading from server: read tcp 10.0.109.108:50028->10.0.113.162:3930: read: connection reset by peer

Attachment:

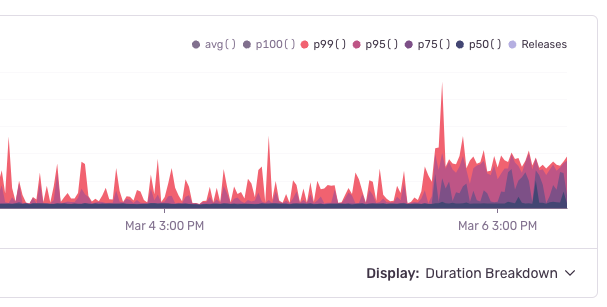

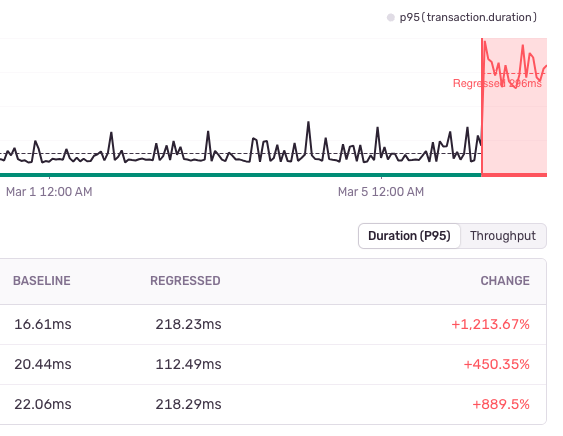

One of the recent slow queries.

Query Time 11.0 s

(everything else is in µs or ns, and most of them are 0, which shows it’s some connection problem rather than a bad optimized query)

Is Success? No

Max Memory 4.9 KiB

Plan

| id | task | estRows | operator info | actRows | execution info | memory | disk | |

|---|---|---|---|---|---|---|---|---|

| TopN_10 | root | 100 | ca_server_db.title_user_score.score:desc, offset:0, count:100 | 0 | time:10.8s, loops:1 | 0 Bytes | N/A | |

| └─TableReader_22 | root | 100 | MppVersion: 1, data:ExchangeSender_21 | 0 | time:10.8s, loops:1 | 4.87 KB | N/A | |

| └─ExchangeSender_21 | cop[tiflash] | 100 | ExchangeType: PassThrough | 0 | N/A | N/A | ||

| └─TopN_20 | cop[tiflash] | 100 | ca_server_db.title_user_score.score:desc, offset:0, count:100 | 0 | N/A | N/A | ||

| └─Selection_19 | cop[tiflash] | 7539.86 | gt(ca_server_db.title_user_score.score, 0) | 0 | N/A | N/A | ||

| └─TableFullScan_18 | cop[tiflash] | 7539.86 | table:title_user_score, pushed down filter:eq(ca_server_db.title_user_score.title_score_id, 1), keep order:false | 0 | N/A | N/A |