Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 持续执行ANALYZE TABLE好几天

[TiDB Usage Environment] Production Environment

[TiDB Version] 5.2.2

[Reproduction Path] Operations performed that led to the issue

[Encountered Issue: Issue Phenomenon and Impact]

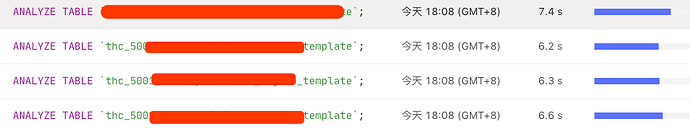

Observed a slow log continuously executing a SQL statement for several days without completion:

ANALYZE TABLE thc_5001_democqzs.form_engine_template;

[Resource Configuration] Mixed resource deployment

[Attachment: Screenshot/Log/Monitoring]

Is this an automatically executed job?

I’ve encountered this before, where the automatic execution for large tables keeps failing, but manual execution is quick. You might want to try running it manually to see.

Okay, I’ll give it a try.

Analyze table is generally very fast (even for tables with billions of rows) because analyze uses a sampling ratio, not a full table scan.

If the table is large, it is recommended to collect manually. You can use WITH FLOAT_NUM SAMPLERATE to analyze a small sample of data. If there are many large tables in the entire database and you do not want to incur too much overhead from collecting statistics, you can set tidb_enable_fast_analyze to 1 to enable the fast analyze feature. However, this is not recommended. Instead, you can increase the automatic threshold by adjusting tidb_auto_analyze_ratio and then deploy manual tasks to perform small sample analysis on large tables.

The automatic analyze is slow because it runs in a single thread. Is the data in this table changing frequently?

Not frequently, we provide private deployment for our customers. After the initial MySQL synchronization to TiDB, it continuously executes analyze automatically.

Okay, thanks, I’ll try this parameter.

The automatic running of the large table keeps failing. Could you please tell me why it is failing?

Excuse me, teacher, will the automatically executed analyze statement be controlled by the max_execution_time system variable? If the automatically executed analyze statement can be controlled by the max_execution_time system variable, then can we set the max_execution_time to an acceptable value?

Automatic running is done in a single thread, so it’s definitely slow, which is why collection fails.

Could it be that the collection failed because it was slow? Shouldn’t it keep running? Is there some kind of timeout mechanism, or did something go wrong while it was running?

There is a window period, otherwise running continuously would affect your normal business.

Did you achieve this window period through max_execution_time?

tidb_auto_analyze_start_time and tidb_auto_analyze_end_time specify the start and end times for automatic analysis. For large tables, it is not recommended to use automatic collection. Instead, you can deploy scheduled tasks and use multi-threaded small sample collection; otherwise, it will be impossible to complete the collection for very large tables.

Got it, thank you, teacher.

Actually, it is also controlled by this variable, with multiple conditions interfering with each other. What tidb newbie said is also correct.

Check if the log shows the error “GC life time is shorter than transaction duration”? If so, it is likely a large table, and the analyze operation exceeded the GC life time. You can perform the analyze operation manually or create a crontab task to handle it.