Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 请问TiKV的SlowStoreScore机制的原理,以及是否可以手动调整

[TiDB Usage Environment] Production Environment

[TiDB Version] 6.5.3

[Reproduction Path] What operations were performed that caused the issue

[Encountered Issue: Issue Phenomenon and Impact] Could you please explain the principle of TiKV’s SlowStoreScore mechanism? Is there a way to prevent it from being set to 100, which leads to Leader migration? From observing CPU, memory, and IO, this issue shouldn’t occur. What is the upper limit for setting [raftstore.inspect-interval], and can it be set to a value like 24 hours to prevent PD from migrating the Leader?

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachments: Screenshots/Logs/Monitoring]

The SlowStoreScore mechanism in TiKV is used to detect whether a TiKV node is slowing down. When the SlowStoreScore value of a TiKV node exceeds 80, PD will mark the node as a slow node and attempt to migrate the Leader on that node to other nodes. The higher the SlowStoreScore value, the worse the performance of the node.

The SlowStoreScore is calculated by monitoring metrics such as disk read/write latency, disk usage, and CPU usage of the TiKV node. If the disk read/write latency of the TiKV node is too high, or the disk usage and CPU usage are too high, it may lead to an increase in the SlowStoreScore value.

If the CPU, memory, IO, and other metrics of your TiKV node are normal, but the SlowStoreScore value is still high, it may be due to other reasons. You can solve this problem by:

- Checking the disk status of the TiKV node to see if there are any disk failures or abnormal disk IO issues.

- Checking the network status of the TiKV node to see if there are any network jitters or congestion issues.

- Checking the logs of the TiKV node to see if there are any abnormal logs or error messages.

The threshold and detection interval of the SlowStoreScore can be set by modifying the TiKV configuration file. Specifically, you can set the detection interval by modifying the raftstore.inspect-interval parameter, which defaults to 1s. If you want to set the detection interval to 24 hours, you can set this parameter to 86400s. Additionally, the threshold for the SlowStoreScore is 80 and currently cannot be modified through the configuration file.

Finally, if you want to prevent PD from migrating the Leader, you can achieve this by setting the max-pending-peer-count parameter. This parameter means that when the number of Pending Peers in a Region exceeds this value, PD will no longer perform Leader migration. You can set this parameter to a larger value to prevent PD from performing Leader migration.

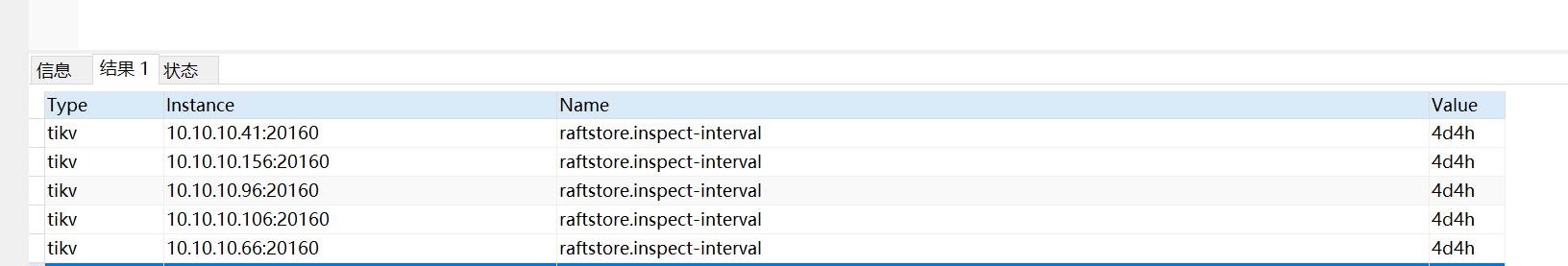

Hello, teacher. I tried setting raftstore.inspect-interval to 4d, as shown in the picture:

However, it doesn’t seem to take effect. It still performs inspections, causing high scores and triggering Leader migration. Is there a maximum limit for this value?

The image you provided is not accessible. Please provide the text you need translated.

Thank you for the explanation, teacher. I roughly understand the principle. Setting raftstore.inspect-interval to 4d doesn’t seem to take effect. What could be the reason for this? Actually, we just want to reduce the frequency of slow detection. We believe our disk and CPU are fine and don’t want leader migration to happen so quickly.

Try changing this parameter to a few hours first, and then test it with the value in ms.

It is the set ms value, it will automatically display as n days or n hours.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.