Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: replace into 后丢失数据

[TiDB Usage Environment] Production Environment

[TiDB Version] v7.1.2

[Reproduction Path] Operations performed that led to the issue

[Encountered Issue: Phenomenon and Impact]

- Phenomenon One: Using Golang programming, multiple

REPLACE INTOstatements are executed within a transaction, resulting in data loss. The SQL statements are as follows:

REPLACE INTO ri_cg_report(`tenant`, `reportid`, `storeid`, `pla

REPLACE INTO ri_cg_report_info(`tenant`, `reportid`, `templatei

REPLACE INTO ri_cg_report_item(`tenant`, `itemid`, `reportid`,

REPLACE INTO ri_cg_report_item(`tenant`, `itemid`, `reportid`,

.... // Several lines omitted here

REPLACE INTO ri_cg_report_summary_item_relation(`tenant`, `repo

REPLACE INTO ri_item_disqualified(`tenant`, `disqualified_id`,

REPLACE INTO ri_item_disqualified(`tenant`, `disqualified_id`,

After executing the above transaction, 35 records should be inserted into 4 tables, but it was found that the first and second tables had no inserts, the third table had some missing data, and the fourth table was complete. The transaction did not report any errors!

- Phenomenon Two: Executing

REPLACE INTO table1 SELECT * FROM table2in the code returns a modified record count that does not match the actual count. Upon investigation, the inserted data does not match the actual data, but no errors were reported.

The above two issues: Phenomenon Two occurred a few times in version 6, but after upgrading to version 7, it hasn’t happened in the past two months. Phenomenon One was just discovered, and it’s uncertain if it occurred previously.

It’s unclear whether the issue is with Golang or TiDB. The critical point is that it doesn’t report any errors!!! It’s very frustrating.

Has anyone encountered similar issues? Could you provide some troubleshooting suggestions?

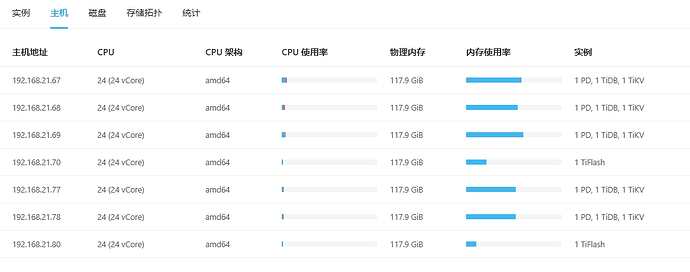

[Resource Configuration] Navigate to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachments: Screenshots/Logs/Monitoring]