Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: DDL卡住不动

[TiDB Usage Environment] Production Environment

[TiDB Version] 5.2.2

[Encountered Problem] DDL execution gets stuck at a certain point for a long time

[Reproduction Path] Creating index DDL

[Problem Phenomenon and Impact]

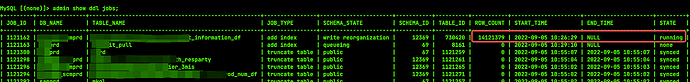

The table has about 170 million rows of data. Adding an index gets stuck at around 140 million rows for half an hour without moving.

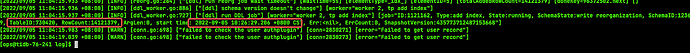

Checked the DDL worker logs and found no errors

[Attachment]

First, check if there are too many data versions and if the GC hasn’t cleaned them up. There was a post discussing this issue a few days ago:

900 million table adding index hasn’t finished executing after more than a day, how to troubleshoot? - TiDB - TiDB Q&A Community (asktug.com)

At that time, the GC was set to 10 minutes, and there weren’t a large number of data versions.

Following the post’s instructions didn’t work either.

It feels like this problem is quite common. I’ve seen several people experiencing this issue.

When adding an index to a table with existing data, there will be an index rebuilding step. If it’s too slow, you can adjust the backfill speed. However, this will have a significant performance impact on the cluster…

Tried 3 times, adjusted the parameters, but it didn’t work. It always gets stuck at the screenshot part and doesn’t proceed.

Tried 3 times, waited for several hours each time, but it didn’t work.

Create a new table with the same structure, build all the indexes, move the data over… then delete the original table, and rename the new one…

Is it possible to do session tracking or Linux process tracking to see where it is stuck?

I feel that this issue is still affected by a bug. If possible, upgrade to 5.2.4.

The method I mentioned can help you get past it…

But to completely resolve it, you still need to upgrade…

Will our upgrade introduce any new issues? It seems like all the problems I’m encountering require an upgrade to resolve. I checked the 5.2.4 fix list and didn’t see this bug being fixed.

These are all old issues with data processing

I recommend upgrading to 5.2.4, as it has fixed some known issues.

I am concerned that upgrading from my previous version 5.0.6 to a version with a large gap might introduce additional bugs. I am not sure which version would be appropriate to upgrade to.

If you’re concerned, you can set up a resource and run a POC to base your evaluation on the results.

The time and cost are also an issue, especially for a large cluster over 50T. Adding an index alone is too big of an action.

Just sorted out, you can refer to it