Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: dm不同步,但是也不报错。

[Test Environment] Testing environment

[TiDB Version]

tidb: 6.1

dm: 6.1

[Encountered Issue]

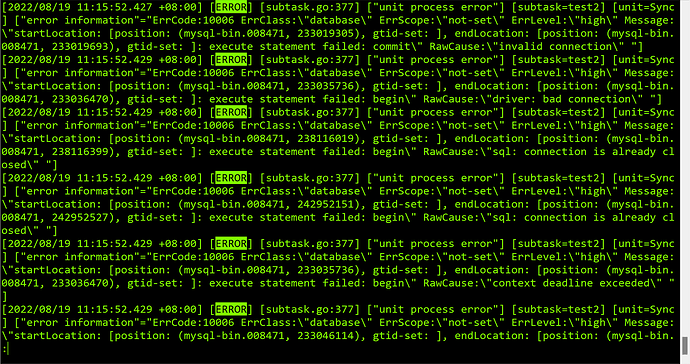

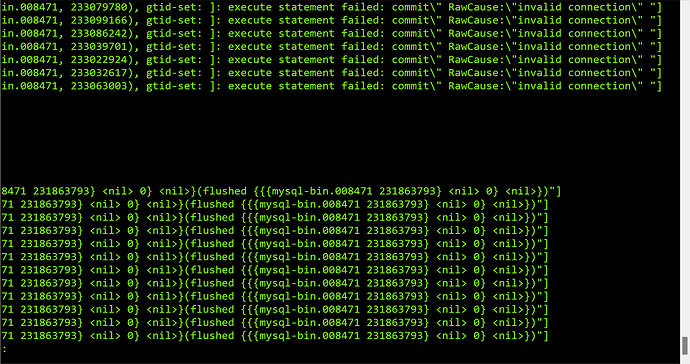

Found that dm is not syncing in the testing environment, checked the dm-worker logs

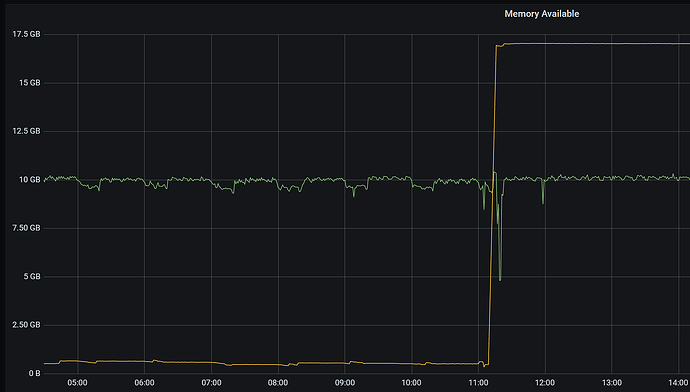

Monitoring shows that a tikv node restarted (oom) at the same time

[Actions Taken]

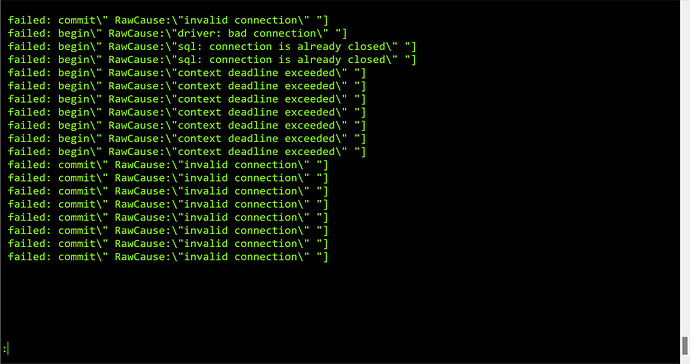

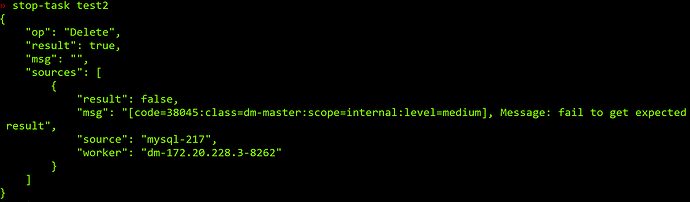

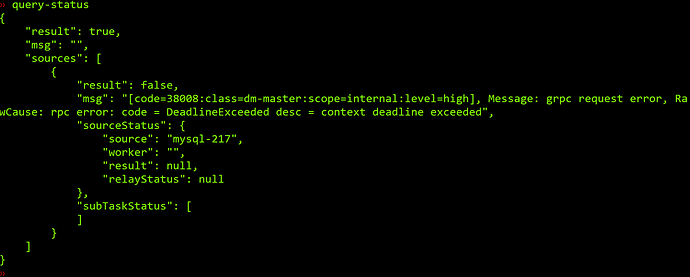

After stop-task and start-task again, the error shown in the picture occurred

The last image should have detailed error information.

Is there any other place to check the error information besides the error message shown in the picture?

The same error is present in the dm-master logs.

Check if the upstream and downstream of the DM configuration can be connected normally.

The upstream and downstream connections are normal.

The image you provided is not visible. Please provide the text you need translated.

The image is not visible. Please provide the text you need translated.

Could you please tell me how to check this issue?

Oh, then you can check what the expert mentioned above, check if the ports are accessible, telnet dm-master port, telnet dm-worker port, see if they can access each other.

Then you can try restarting dm-worker, see if it has any effect, not the task but the worker.

If it still doesn’t work, you can try enabling relay-log, then pull the error binlog to the relay-log directory to see if it succeeds.

Check all communication ports related to DM to see if there are any anomalies.

There is no problem with the port of DM.

There is no issue with the DM port, I’ll try restarting the worker.

There should be an issue with the service.

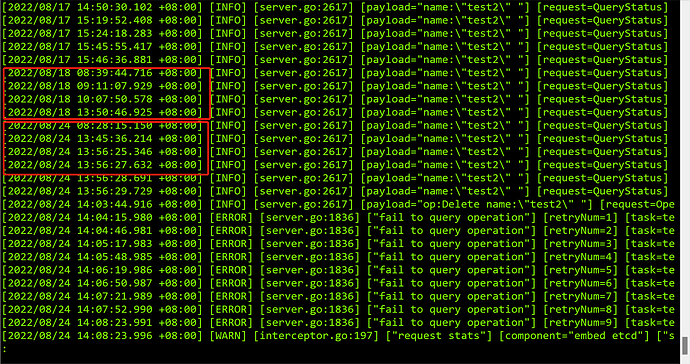

After restarting DM, there are indeed no errors, but it is not syncing.

The syncerBinlog has not changed, and the threads in the upstream database are also in a sleep state.

After restarting DM, there are no error messages, but it just doesn’t synchronize.

Do you still have the binlog from your upstream? Check with show binary logs. Then restart the dm-worker and try stopping and starting the task again. If that doesn’t work, try using the relay-log method.

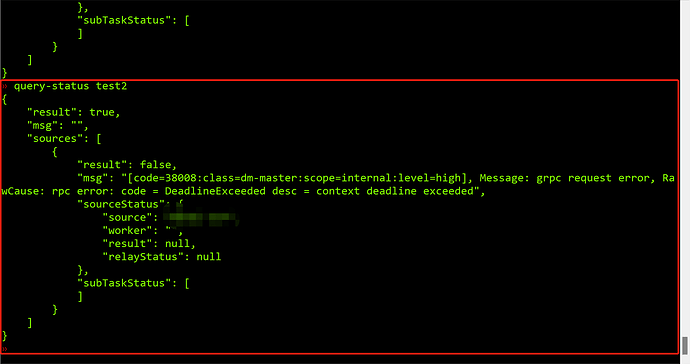

It seems that there is an issue with DM. The stop-task command is stuck for a long time and then reports the following error:

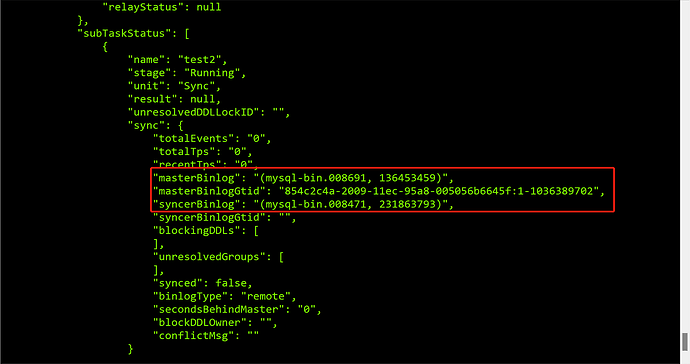

When querying the status again, the above problem appears: