Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: DM同步突然中断

DM Version: v2.0.7

TiDB: v4.0.11

MySQL: 5.6.32

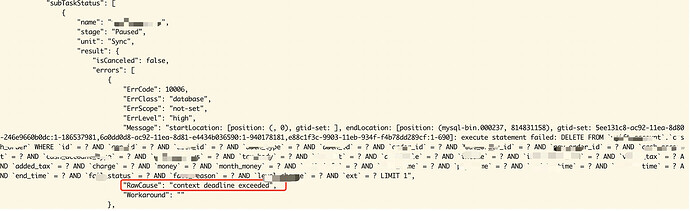

DM-Worker error:

Attempted recovery:

- pause-task to pause the task

- resume-task to resume the task

Still encountering errors

The synchronization has been running for a long time and suddenly encountered an error.

You can check the actual work logs.

The worker logs keep reporting the following errors:

[2022/12/20 15:01:15.429 +08:00] [WARN] [task_checker.go:393] [“backoff skip auto resume task”] [component=“task checker”] [task=vgift_account] [latestResumeTime=2022/12/20 14:58:45.429 +08:00] [duration=5m0s]

[2022/12/20 15:01:20.429 +08:00] [WARN] [task_checker.go:393] [“backoff skip auto resume task”] [component=“task checker”] [task=vgift_account] [latestResumeTime=2022/12/20 14:58:45.429 +08:00] [duration=5m0s]

[2022/12/20 15:01:20.480 +08:00] [INFO] [syncer.go:2912] [“binlog replication status”] [task=exchange_order] [unit=“binlog replication”] [total_events=488748531] [total_tps=30] [tps=0] [master_position=“(mysql-bin.000229, 792724909)”] [master_gtid=3d063b5d-ac92-11ea-8d7f-ecf4bbdee7d4:1-186586092,48230336-ac92-11ea-8d80-e4434b036590:1-933946527,538fe0da-ac92-11ea-8d80-246e966090ac:1,9efc0181-e451-11eb-be59-b4055d643320:1-1023] [checkpoint=“position: (mysql-bin.000016, 449314866), gtid-set: 3d063b5d-ac92-11ea-8d7f-ecf4bbdee7d4:1-186586092,48230336-ac92-11ea-8d80-e4434b036590:1-714173783,538fe0da-ac92-11ea-8d80-246e966090ac:1(flushed position: (mysql-bin.000016, 449314866), gtid-set: 3d063b5d-ac92-11ea-8d7f-ecf4bbdee7d4:1-186586092,48230336-ac92-11ea-8d80-e4434b036590:1-714173783,538fe0da-ac92-11ea-8d80-246e966090ac:1)”]

Check if the upstream and downstream are normal, and post the TiDB logs.

TiDB is functioning normally. Not only is this work connected to TiDB, but many other work synchronizations are also normal.

You can refer to these two issues~

I remember TiDB is compatible with MySQL 5.7, you can try upgrading the MySQL version~

It should have nothing to do with compatibility. It has been running for a long time, and the problematic statement is a common DML statement “delete”.

Did you see this question?

Similar to this issue, I adjusted the GC time and then restarted the synchronization, but the error still occurred.

If the downstream table does not have a primary key or unique index, finding the row during deletion will be very slow, leading to operation timeout. You can try reducing the batch-size in the task configuration.

Does batch-size refer to this parameter? batch? I see that this parameter does not support dynamic updates after the task is created. My task has a large amount of data, and synchronization takes a long time. There is indeed no primary key or unique index. If I add a unique index now, can it solve the problem?

Yes, adding a unique index to this batch should improve the issue.

Is there another full synchronization task in progress when this error occurs? If so, wait for the full synchronization to complete and then observe whether the task returns to normal.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.