Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 扩容TIKV 这样算是平衡了吗

[TiDB Usage Environment] Production Environment

[TiDB Version] 4.0.13

[Encountered Problem: Problem Phenomenon and Impact]

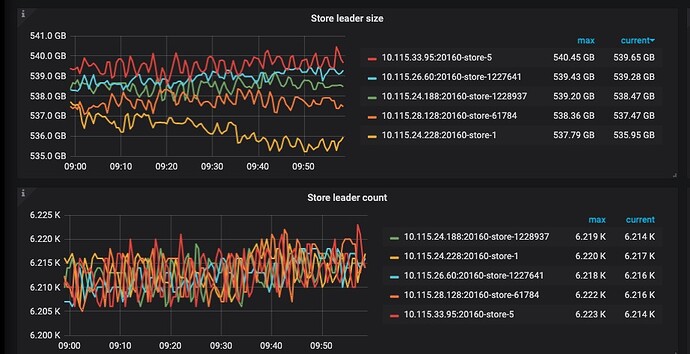

Experts, is this considered as expansion completed? The leader size and leader count are almost balanced. However, the store used and store region count differ significantly. Is it because the old node space hasn’t been released? Can we proceed with contraction?

[Attachment: Screenshot/Log/Monitoring]

Just wait, leader balancing is much faster than region balancing.

Do we need to wait for the store region count to balance?

There are several acceleration configurations. If it is an offline cluster, you can consider setting the following values using pd-ctl:

There are several acceleration configurations. If it is an offline cluster, you can consider setting the following values using pd-ctl:

Modified values:

config set max-pending-peer-count 256

config set replica-schedule-limit 512

store limit all 180 add-peer

store limit all 180 remove-peer

Original values:

config set max-pending-peer-count 64

config set replica-schedule-limit 64

store limit all 15 add-peer

store limit all 15 remove-peer

Scale down, it will automatically go offline. Once it successfully goes offline, you can clean it up.

If you want to scale down the old nodes, you can directly evict all the leaders from the old nodes and then scale down.

Yes, just wait patiently. Eventually, the slanted line will become a straight line, or you can adjust the speed as mentioned above.

In the production environment, we won’t adjust the speed anymore. Let’s just wait patiently.

This can only be done after the new expansion is completed, right?

After the store region count is balanced, do we need to pay attention to store_used? Does it also need to be balanced?

No need, just add a leader eviction scheduler to the node you want to take offline with scheduler add evict-leader-scheduler 1. The leaders on this node will be transferred to other nodes. Once the transfer is complete, you can take it offline directly. In fact, your new node is already providing services.

I haven’t operated it before. Should I first expand the capacity and wait for it to balance, then shrink it one by one? This might be more suitable for beginners.

Sure, region balancing is definitely slower than leader balancing because the amount of data being moved is different. Just wait a bit longer.

By default, balancing is very slow and requires parameter adjustments to speed up. Theoretically, with three replicas, TiKV should be scaled down one by one.

Be patient and let KV fly for a while.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.