Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: drainer out of memory

[TiDB Usage Environment] Production Environment

[TiDB Version] V3.0.9

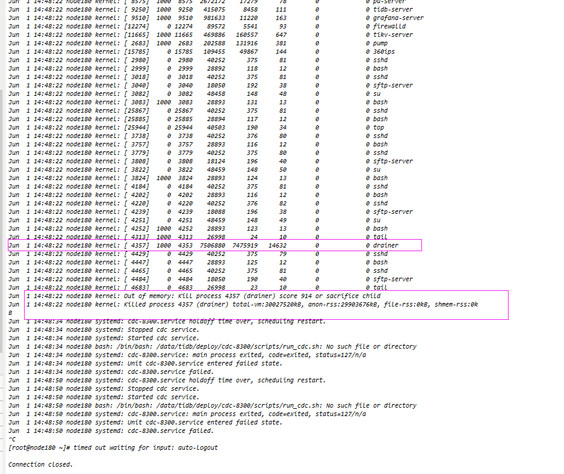

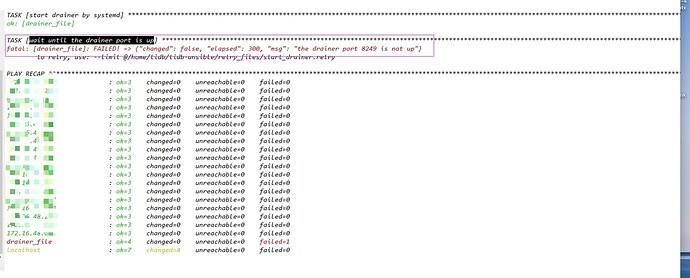

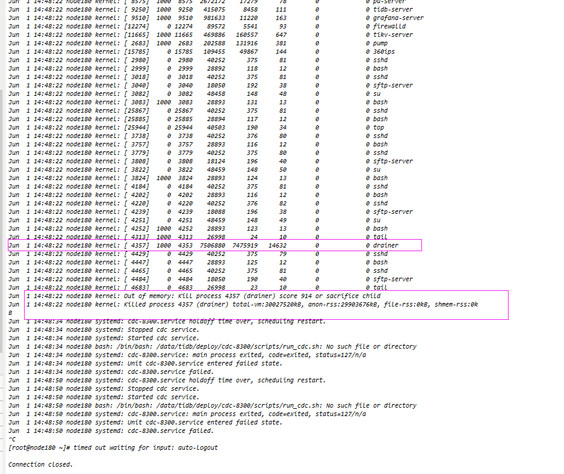

[Reproduction Path] Adding a drainer node with ansible, there is a process at startup but no port.

[Encountered Problem: Symptoms and Impact]

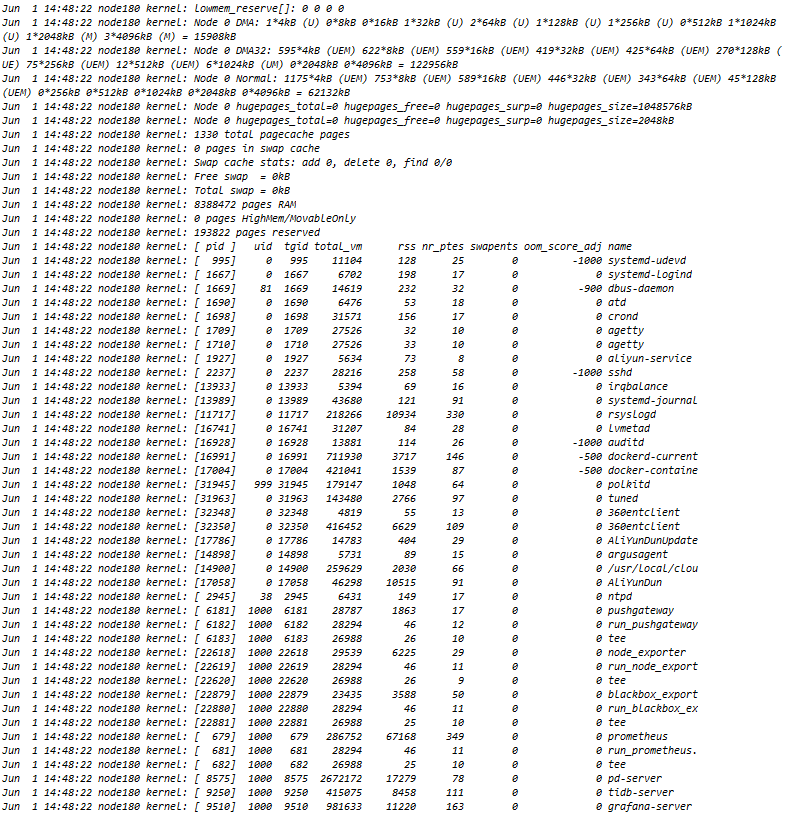

During the drainer startup process, memory usage spikes, leading to OOM and the system kills the drainer.

The downstream is writing files to the local directory, not a database.

[Resource Configuration] 16 cores, 32G memory

[Attachments: Screenshots/Logs/Monitoring]

Has this database been around for a long time? Are there many DDLs? If so, it might be a known issue.

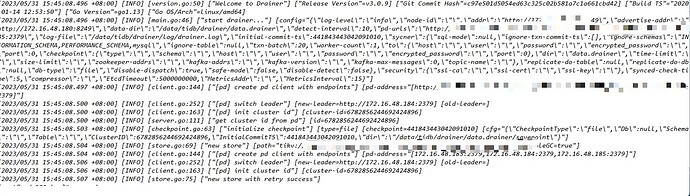

Yes, it’s been a long time, with many DDL operations. Recently, I wanted to upgrade to a higher version, so I backed up and synchronized the data first. Therefore, I enabled binlog and added a drainer node. As a result, the memory was exhausted before it even finished starting up.

Is the downstream binlog consumer a file, or another TiDB or MySQL?

That’s a very old version…

The minor version of 3.0.x has reached 20, and it has probably fixed a lot of bugs. You might want to consider upgrading.

It’s best to upgrade to the mainline version, as there will be fewer issues.

Drainer needs to pull the full history of DDL when starting. It seems that you have too much historical DDL history.

This could be caused by a large transaction. In the drainer code, there is no mechanism for archiving large transactions to disk. You can ensure that on the basis of a full backup, manually edit the commitTS in the drainer’s savepoint file, then restart the drainer to skip that large transaction.

The first time I started drainer, it didn’t start, and no savepoint was generated. Can I manually write one and put it up, then start it?

Is there no savepoint file for drainer? This was added later, right? Normally, there shouldn’t always be large transactions, should there?

Yes, it was added later, and it is currently up and running (using 32GB RAM + 250GB SWAP, which took more than 10 hours). When drainer starts, it will load historical DDL, so it requires a lot of memory. If there is not enough memory, it will result in an OOM and the program will be killed by the system. Currently, data synchronization cannot catch up, and the delay is significant. SWAP still cannot be used.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.