Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: drainer同步text字段时报error=“Error 1406: Data too long for column ‘’ at row 1”

[TiDB Usage Environment] Production Environment

[TiDB Version]

Upstream is v5.4.1

Downstream is v6.5.0

Data is synchronized from v5.4.1 to v6.5.0 via drainer

[Reproduction Path] What operations were performed to encounter the issue

Today, the downstream TiDB was upgraded to v6.5.0. The downstream was also v5.4.1 before the upgrade.

[Encountered Issue: Problem Phenomenon and Impact]

After the upgrade, drainer reported an error,

[error=“Error 1406: Data too long for column ‘xxxx’ at row 1”]

[2023/03/20 16:58:59.522 +08:00] [ERROR] [executor.go:135] [“Exec fail, will rollback”] [query="REPLACE INTO xxxx.xxx_xxxx_log(

There are two columns of type text in the table. I tried to mysqldump the upstream table and then import it into my personal test version v6.5.1, and it imported successfully.

Therefore, could you please check if there is a compatibility issue with version v6.5.0?

How can I skip this record? (I can manually skip this table, but if other tables have similar issues, skipping tables won’t be a viable solution.)

During the upgrade, should we upgrade the upstream first or the downstream first? How can we avoid such issues?

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

It’s a production environment, so I cannot provide table, column, IP, data, etc., information on the page.

Check if this is useful for you?

Modifications related to statistics were made in version v5.3.

My environment is v5.4.1 and v6.5.0, and I have also checked the system table structure. All three columns are already of the longblob type.

When checking upstream, the data size of the problematic id in the table was found to be 71797.

select length(json_result) from xxxx.xxx_xxxx_log where oper_id=xxxx;

+---------------------+

| length(json_result) |

+---------------------+

| 71797 |

+---------------------+

1 row in set (0.01 sec)

After exporting with mysqldump and manually importing into the personal test environment v6.5.1, the same id size was found to be 65535.

select length(json_result) from xxxx.xxx_xxxx_log where oper_id=xxxx;

+---------------------+

| length(json_result) |

+---------------------+

| 65535 |

+---------------------+

1 row in set (0.00 sec)

So, is there some limitation on text in version v6.5.1?

There are some incompatibilities with drainer support for version 5.X, and as for version 6.X, the differences are too significant… it can’t be supported.

I suggest you switch to ticdc…

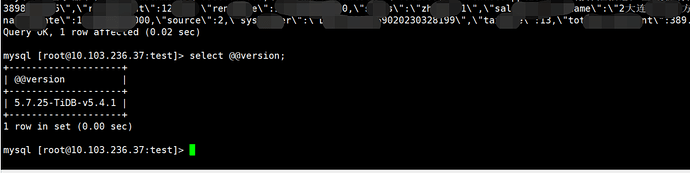

I copied the problematic record into an insert statement and tested it in two environments. In v5.4.1, I can manually insert the SQL, as shown in the image below:

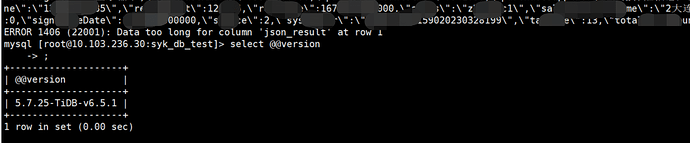

In version v6.5.1, an error occurs when manually inserting, as shown in the image below:

In the documentation:

How can I adjust the data type limit? Increase the text limit to more than 6M.

In the documentation:

There is a txn-entry-size-limit parameter. After adjusting this parameter in v6.5.1, the same insert SQL still reports an error.

In v5.4.1, it is possible to insert records exceeding 65535 rows, which is technically a bug.

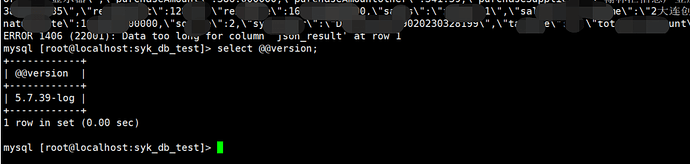

I conducted an insert test in mysql5.7.39 and found that it also resulted in “data too long,” which is the same behavior as in v6.5.1.

Explanation:

For the text type, it can exceed 65535, which is considered a bug. This bug was fixed in v6.5.1.

The following image shows the test in MySQL:

How did you adjust this parameter, can you provide a screenshot?

It is not much related to this parameter; it is an issue with the length of the text type. The behavior in version v6.5.1 is consistent with MySQL. In previous versions, values greater than 65535 would be successfully inserted.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.