Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: Flink 集成 flink-tidb-connector-1.14 创建catalog出错

To improve efficiency, please provide the following information. A clear problem description will help resolve the issue faster:

[TiDB Usage Environment]

Self-built TIDB6.1

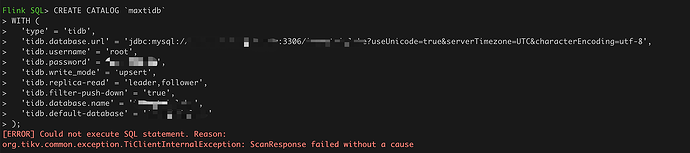

[Overview] Scenario + Problem Overview

Plan to create tidb-catalog in Flink to reduce manual table creation using tidb-cdc for subsequent real-time ETL.

[Flink + TiDB Upstream and Downstream Relationship and Logic]

Self-built TIDB, self-built Flink, only plan to create tidb-catalog for lookup join (only create batch processing mode, not adding Kafka part)

[Background] Operations performed

Local debug log check

[Phenomenon] Business and database phenomenon

Unable to create flink-catalog

[Problem] Current issue encountered

2023-06-22 20:28:46,787 WARN org.tikv.common.region.AbstractRegionStoreClient - no followers of region[71] available, retry

2023-06-22 20:28:46,789 DEBUG org.tikv.common.region.RegionCache - invalidateRegion ID[71]

2023-06-22 20:28:46,789 WARN org.tikv.common.operation.RegionErrorHandler - request failed because of: DEADLINE_EXCEEDED: deadline exceeded after 59.996343708s. [buffered_nanos=275858542, remote_addr=/127.0.0.1:7890]

2023-06-22 20:28:46,795 DEBUG org.tikv.shade.io.grpc.netty.NettyClientHandler - [id: 0x6d25bde3, L:/127.0.0.1:62411 - R:/127.0.0.1:7890] OUTBOUND HEADERS: streamId=373 headers=GrpcHttp2OutboundHeaders[:authority: 172.16.229.95:20160, :path: /grpc.health.v1.Health/Check, :method: POST, :scheme: http, content-type: application/grpc, te: trailers, user-agent: grpc-java-netty/1.38.0, grpc-accept-encoding: gzip, grpc-timeout: 99822583n] streamDependency=0 weight=16 exclusive=false padding=0 endStream=false

2023-06-22 20:28:46,795 DEBUG org.tikv.shade.io.grpc.netty.NettyClientHandler - [id: 0x6d25bde3, L:/127.0.0.1:62411 - R:/127.0.0.1:7890] OUTBOUND DATA: streamId=373 padding=0 endStream=true length=5 bytes=0000000000

2023-06-22 20:28:46,799 DEBUG org.tikv.common.region.RegionCache - invalidateRegion ID[71]

2023-06-22 20:28:46,824 DEBUG org.tikv.shade.io.grpc.netty.NettyClientHandler - [id: 0x1352407b, L:/127.0.0.1:62375 - R:/127.0.0.1:7890] OUTBOUND HEADERS: streamId=405 headers=GrpcHttp2OutboundHeaders[:authority: 172.16.229.95:20160, :path: /grpc.health.v1.Health/Check, :method: POST, :scheme: http, content-type: application/grpc, te: trailers, user-agent: grpc-java-netty/1.38.0, grpc-accept-encoding: gzip, grpc-timeout: 99911625n] streamDependency=0 weight=16 exclusive=false padding=0 endStream=false

2023-06-22 20:28:46,824 DEBUG org.tikv.shade.io.grpc.netty.NettyClientHandler - [id: 0x1352407b, L:/127.0.0.1:62375 - R:/127.0.0.1:7890] OUTBOUND DATA: streamId=405 padding=0 endStream=true length=5 bytes=0000000000

2023-06-22 20:28:46,807 WARN org.apache.flink.table.client.cli.CliClient - Could not execute SQL statement.

org.apache.flink.table.client.gateway.SqlExecutionException: Could not execute SQL statement.

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:208) ~[flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.cli.CliClient.executeOperation(CliClient.java:639) ~[flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.cli.CliClient.callOperation(CliClient.java:473) [flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.cli.CliClient.executeOperation(CliClient.java:372) [flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.cli.CliClient.getAndExecuteStatements(CliClient.java:329) [flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.cli.CliClient.executeInteractive(CliClient.java:280) [flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.cli.CliClient.executeInInteractiveMode(CliClient.java:228) [flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.SqlClient.openCli(SqlClient.java:151) [flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:95) [flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.SqlClient.startClient(SqlClient.java:187) [flink-sql-client-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:161) [flink-sql-client-1.16.2.jar:1.16.2]

Caused by: org.apache.flink.table.api.ValidationException: Could not execute CREATE CATALOG: (catalogName: [maxtidb], properties: [{tidb.write_mode=upsert, tidb.database.name=ipengtai_lake, tidb.password=max-999100, tidb.replica-read=leader,follower, tidb.default-database=ipengtai_lake, tidb.filter-push-down=true, tidb.username=root, type=tidb, tidb.database.url=jdbc:mysql://max-tidb.utoper.com:3306/ipengtai_lake?useUnicode=true&serverTimezone=UTC&characterEncoding=utf-8}])

at org.apache.flink.table.api.internal.TableEnvironmentImpl.createCatalog(TableEnvironmentImpl.java:1435) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:1172) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:206) ~[flink-sql-client-1.16.2.jar:1.16.2]

… 10 more

Caused by: org.apache.flink.table.catalog.exceptions.CatalogException: Can not init client session

at io.tidb.bigdata.flink.connector.TiDBCatalog.initClientSession(TiDBCatalog.java:132) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.flink.connector.TiDBCatalog.open(TiDBCatalog.java:140) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:211) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.createCatalog(TableEnvironmentImpl.java:1431) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:1172) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:206) ~[flink-sql-client-1.16.2.jar:1.16.2]

… 10 more

Caused by: org.tikv.common.exception.TiClientInternalException: Error scanning data from region.

at org.tikv.common.operation.iterator.ScanIterator.cacheLoadFails(ScanIterator.java:123) ~[flink-sql-connector-tidb-cdc-2.3.0.jar:2.3.0]

at org.tikv.common.operation.iterator.ConcreteScanIterator.hasNext(ConcreteScanIterator.java:105) ~[flink-sql-connector-tidb-cdc-2.3.0.jar:2.3.0]

at io.tidb.bigdata.tidb.codec.MetaCodec.hashGetFields(MetaCodec.java:125) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.CatalogTransaction.getDatabases(CatalogTransaction.java:75) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.Catalog$CatalogCache.loadDatabases(Catalog.java:211) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.Catalog$CatalogCache.(Catalog.java:151) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.Catalog$CatalogCache.(Catalog.java:139) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.Catalog.(Catalog.java:49) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.ClientSession.(ClientSession.java:127) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.ClientSession.create(ClientSession.java:631) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.flink.connector.TiDBCatalog.initClientSession(TiDBCatalog.java:130) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.flink.connector.TiDBCatalog.open(TiDBCatalog.java:140) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:211) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.createCatalog(TableEnvironmentImpl.java:1431) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:1172) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:206) ~[flink-sql-client-1.16.2.jar:1.16.2]

… 10 more

Caused by: org.tikv.common.exception.TiClientInternalException: ScanResponse failed without a cause

at org.tikv.common.region.RegionStoreClient.isScanSuccess(RegionStoreClient.java:315) ~[flink-sql-connector-tidb-cdc-2.3.0.jar:2.3.0]

at org.tikv.common.region.RegionStoreClient.scan(RegionStoreClient.java:306) ~[flink-sql-connector-tidb-cdc-2.3.0.jar:2.3.0]

at org.tikv.common.region.RegionStoreClient.scan(RegionStoreClient.java:346) ~[flink-sql-connector-tidb-cdc-2.3.0.jar:2.3.0]

at org.tikv.common.operation.iterator.ConcreteScanIterator.loadCurrentRegionToCache(ConcreteScanIterator.java:80) ~[flink-sql-connector-tidb-cdc-2.3.0.jar:2.3.0]

at org.tikv.common.operation.iterator.ScanIterator.cacheLoadFails(ScanIterator.java:86) ~[flink-sql-connector-tidb-cdc-2.3.0.jar:2.3.0]

at org.tikv.common.operation.iterator.ConcreteScanIterator.hasNext(ConcreteScanIterator.java:105) ~[flink-sql-connector-tidb-cdc-2.3.0.jar:2.3.0]

at io.tidb.bigdata.tidb.codec.MetaCodec.hashGetFields(MetaCodec.java:125) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.CatalogTransaction.getDatabases(CatalogTransaction.java:75) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.Catalog$CatalogCache.loadDatabases(Catalog.java:211) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.Catalog$CatalogCache.(Catalog.java:151) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.Catalog$CatalogCache.(Catalog.java:139) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.catalog.Catalog.(Catalog.java:49) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.ClientSession.(ClientSession.java:127) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.tidb.ClientSession.create(ClientSession.java:631) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.flink.connector.TiDBCatalog.initClientSession(TiDBCatalog.java:130) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at io.tidb.bigdata.flink.connector.TiDBCatalog.open(TiDBCatalog.java:140) ~[flink-tidb-connector-1.14-0.0.5-SNAPSHOT.jar:?]

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:211) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.createCatalog(TableEnvironmentImpl.java:1431) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:1172) ~[flink-table-api-java-uber-1.16.2.jar:1.16.2]

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeOperation(LocalExecutor.java:206) ~[flink-sql-client-1.16.2.jar:1.16.2]

… 10 more

[Business Impact]

[TiDB Version]

TIDB6.1

[Flink Version]

flink-1.16.2

[Attachments] Related logs and monitoring (https://metricstool.pingcap.com/)

flink-xiaolinwu-sql-client-…-.log (11.3 MB)