Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: br 执行全量恢复,报错 Coprocessor task terminated due to exceeding the deadline

[TiDB Usage Environment] Production Environment / Testing

[TiDB Version] TiDB v5.3.0, BR v5.3.0

[Reproduction Path]

When performing a restore test with a full backup set from the production environment, an error occurred: Coprocessor task terminated due to exceeding the deadline.

[Encountered Problem: Problem Phenomenon and Impact]

Full restore <----------------------------------------------------------------------------------------------------------------------------------..............................................> 73.56%

Full restore <-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------> 100.00%

[2023/07/24 11:05:53.139 +08:00] [INFO] [collector.go:65] ["Full restore failed summary"] [total-ranges=46104] [ranges-succeed=46104] [ranges-failed=0] [restore-checksum=5h11m42.859911719s] [split-region=1m47.011917972s] [restore-ranges=30688]

Error: other error: Coprocessor task terminated due to exceeding the deadline

["Full restore failed summary"] [total-ranges=46104] [ranges-succeed=46104] [ranges-failed=0] [restore-checksum=5h11m42.859911719s] [split-region=1m47.011917972s] [restore-ranges=30688]

[2023/07/24 11:05:53.139 +08:00] [ERROR] [restore.go:34] ["failed to restore"] [error="other error: Coprocessor task terminated due to exceeding the deadline"]

[Resource Configuration]

- Production Environment (Physical Machine Deployment)

3 TiDB (64C/256G), 3 TiKV (64C/256G), 3 PD (64C/64G)

- Testing Environment (PVE Virtualization Deployment)

3 TiDB (4C/8G), 3 TiKV (4C/8G), 3 PD (4C/4G)

- Data Volume

Snapshot size 265G.

- Restore Command Executed

export PD_ADDR="10.0.32.145:2379"

export BAKDIR="/tidb-bak/20230716"

nohup tiup br restore full --pd "${PD_ADDR}" --storage "local://${BAKDIR}" --ratelimit 64 --log-file restorefull_`date +%Y%m%d`.log &

[Attachments: Screenshots/Logs/Monitoring]

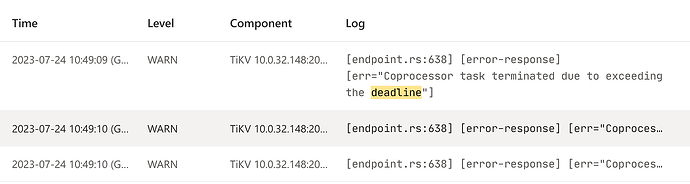

Error logs related to TiKV in the dashboard are as follows:

Are the versions of the test cluster and the production cluster consistent? Did the test cluster have databases and tables with the same names before the restoration?

It should be that the resources in the test environment are too low. This error is generally caused by excessive pressure on TiKV.

The newly deployed software version is consistent with the production environment, as well as the number of roles for each node.

The only differences are: the hardware resources in the test environment are slightly inferior, and the operating system is openEuler 22.03 (which has passed the OS check by tiup). The OS in the production environment is Centos 7.9.

My initial judgment is also that the issue is due to insufficient resources in the test environment. I re-executed the recovery once and added the --ratelimit 64 parameter to limit the rate. Not sure if it will be effective.

Is there a time limit for scheduled configurations?

There are no restrictions. It’s a brand new environment, a test environment deployed with the official basic configuration template.

Added --ratelimit 64 still reports the same error. I’ll try allocating more resources.

There is a case with the same error.

Added -L debug, it prompts that the SST file cannot be found. The initial judgment is that there is a corruption issue during the backup file copying process. However, the backup set was compressed, and there were no errors during the decompression process. In a few days, I will try mounting NFS directly to see if the error still occurs.

I performed an MD5 checksum on each SST file in the backup sets of both the test and production environments, and the files are not corrupted. Upon checking the backup logs in the production environment, there were 2 errors [pd] fetch pending tso requests error] at the end of the backup. However, it finally indicated Full backup success summary. When using these backup sets for BR recovery, the process always stops at around 75%, immediately indicating that the SST file cannot be found.

When executing the BR restore command, if you encounter the error “Coprocessor task terminated due to exceeding the deadline,” it is usually because the default timeout period of the BR tool is too short. You can try increasing the --timeout parameter to extend the timeout period of the BR tool. For example:

tiup br restore full --pd "${PD_ADDR}" --storage "local://${BAKDIR}" --ratelimit 64 --log-file restorefull_`date +%Y%m%d`.log --timeout 7200s

The above command sets the timeout period of the BR tool to 7200 seconds (2 hours). You can adjust it according to your actual situation.

You can try the following methods for troubleshooting:

- Check if the SST files in the backup set are complete

Although you have performed an MD5 checksum on each SST file in the backup set, there may still be file corruption. You can try rechecking the SST files in the backup set to ensure they are complete. If you find any corrupted files, you can try re-backing up.

- Check if the BR tool version is correct

The version of the BR tool is related to the version of the TiDB cluster. If the versions do not match, the BR tool may not correctly recognize the SST files in the backup set. You can check if the BR tool version matches the TiDB cluster version. If the versions do not match, you can try upgrading the BR tool or downgrading the TiDB cluster version.

- Check if the parameters of the BR tool are correct

When executing the BR restore command, you need to specify some parameters, such as the address of the PD and the storage path of the backup files. If these parameters are not set correctly, the BR tool may not correctly recognize the SST files in the backup set. You can check if the parameters in the BR restore command are set correctly.

- Check if the disk space of the TiKV nodes is sufficient

During the BR restore process, the TiKV nodes need to write the SST files from the backup set to the disk. If the disk space of the TiKV nodes is insufficient, the BR restore may fail. You can check if the disk space of the TiKV nodes is sufficient.

The limit is restricted to 50, I haven’t tried the timeout.

I have checked all 4 steps.