Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: tikv获取catalog报错

[TiDB Usage Environment] Production Environment / Testing / PoC

[TiDB Version] 5.4.1

[Encountered Problem] Trying to use CDCClient to synchronize TiDB in real-time, but an error occurs when calling TiSession.create(tiConfiguration).getCatalog()

Exception in thread “main” java.lang.RuntimeException: org.tikv.common.exception.GrpcException: retry is exhausted.

at com.aliyun.qijun.App.main(App.java:91)

Caused by: org.tikv.common.exception.GrpcException: retry is exhausted.

at org.tikv.common.util.ConcreteBackOffer.logThrowError(ConcreteBackOffer.java:235)

at org.tikv.common.util.ConcreteBackOffer.doBackOffWithMaxSleep(ConcreteBackOffer.java:220)

at org.tikv.common.util.ConcreteBackOffer.doBackOff(ConcreteBackOffer.java:160)

at org.tikv.common.operation.PDErrorHandler.handleRequestError(PDErrorHandler.java:82)

at org.tikv.common.policy.RetryPolicy.callWithRetry(RetryPolicy.java:97)

at org.tikv.common.AbstractGRPCClient.callWithRetry(AbstractGRPCClient.java:89)

at org.tikv.common.PDClient.getTimestamp(PDClient.java:160)

at org.tikv.common.TiSession.getTimestamp(TiSession.java:320)

at org.tikv.common.TiSession.createSnapshot(TiSession.java:326)

at org.tikv.common.catalog.Catalog.(Catalog.java:44)

at org.tikv.common.TiSession.getCatalog(TiSession.java:357)

at com.aliyun.qijun.App.main(App.java:89)

Caused by: org.tikv.shade.io.grpc.StatusRuntimeException: DEADLINE_EXCEEDED: deadline exceeded after 0.199831333s. [buffered_nanos=201331500, waiting_for_connection]

at org.tikv.shade.io.grpc.stub.ClientCalls.toStatusRuntimeException(ClientCalls.java:287)

at org.tikv.shade.io.grpc.stub.ClientCalls.getUnchecked(ClientCalls.java:268)

at org.tikv.shade.io.grpc.stub.ClientCalls.blockingUnaryCall(ClientCalls.java:175)

at org.tikv.common.AbstractGRPCClient.lambda$callWithRetry$0(AbstractGRPCClient.java:92)

at org.tikv.common.policy.RetryPolicy.callWithRetry(RetryPolicy.java:88)

… 7 more

[Reproduction Path] Steps taken that led to the problem

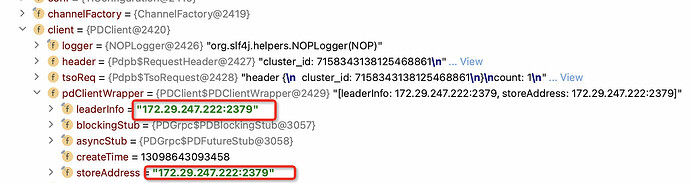

- TiDB was set up on an Alibaba Cloud ECS using tiup cluster, including 1 TiDB, 1 TiKV, 1 PD, 1 TiFlash. Database-related functions are normal, and http://<public_ip>:2379/dashboard can also be opened normally.

- When connecting using the following code, the above exception is thrown at session.getCatalog(). Ports 2379 for PD, 20160 and 20180 for TiKV are open. When I package this part of the code into a jar and execute it on the ECS where TiDB is deployed (also using the public IP), it works.

TiConfiguration tiConfiguration = TiConfiguration.createDefault(“http://public_ip:2379”);

TiSession session = TiSession.create(tiConfiguration);

TiTableInfo table = null;

try {

Catalog catalog = session.getCatalog();

table = catalog.getTable(“test”, “test_1024_1”);

} catch (Exception e) {

throw new RuntimeException(e);

}

[Problem Phenomenon and Impact]

[Attachments]

Has anyone encountered a similar problem?