Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 批量插入的预处理语句的执行计划和执行计划缓存

[TiDB Usage Environment] Production Environment

[TiDB Version] 6.5.0

[Reproduction Path] Operations performed that led to the issue

JDBC prepared statements, with the following options enabled:

useServerPrepStmts

rewriteBatchedStatements

tidb_enable_prepared_plan_cache

tidb_ignore_prepared_cache_close_stmt

Batch insert of 500 rows at a time, causing the execution plan cache to become huge and frequently leading to TiDB OOM due to too many parameters. tidb_prepared_plan_cache_size is set to only 1.

[Phenomenon]

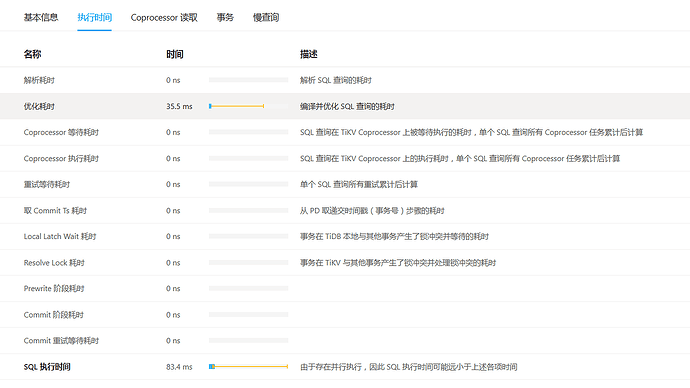

The execution time of the statement during insertion is as follows:

Task Type: root

Operator Info: N/A

Root Basic Exec Info:

{

"loops":"1"

"time":"18.8ms"

}

Root Group Exec Info:

[

0:{

"insert":"12.2ms"

"prepare":"6.62ms"

}

1:"lock_keys: {time:10.9ms, region:1, keys:500, slowest_rpc: {total: 0.010s, region_id: 308132995, store: 10.120.33.65:20160, tikv_wall_time: 9.8ms, scan_detail: {get_snapshot_time: 11.1µs, rocksdb: {block: {cache_hit_count: 4016}}}, }, lock_rpc:10.320771ms, rpc_count:1}"

2:NULL

]

[Question]

Is it possible to reduce the execution plan generation time for batch insert prepared statements (I don’t quite understand why an execution plan needs to be generated every time for insert statements)? Additionally, are there any mechanism changes in higher versions for the execution plan of batch inserts?

Reduce the number of rows and see if it still results in OOM.

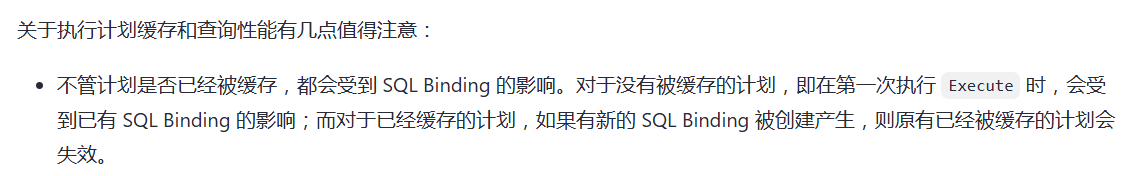

The difference is not very significant because we open multiple connections simultaneously for insertion. According to the documentation, this issue should always occur. Fundamentally, the bind for batch-insert prepared statements is almost always different each time. However, I don’t quite understand whether SQL binding here refers to the parameters of the prepared statement or SPM, because the SPM documentation does not mention that execution plans can be bound for insert statements.

What’s rather strange is that some of the commits seem to use the plan cache, but this does not align with the OOM phenomenon and the documentation description.

Not using features like execution plan caching works well. We even globally disabled execution plan caching before. However, now we actually want to know if it’s possible to optimize away the overhead of generating execution plans for insert statements.

Try this, but your version is 6.5, this parameter should solve the problem of a single statement occupying too much plancache due to inserting a large amount of data.

The memory issue in version 6.5 can be controlled using tidb_prepared_plan_cache_size, but the problem is that a significant portion of the execution time for these statements is still spent on compilation.

Setting tidb_prepared_plan_cache_size to 1 means that the vast majority of statements won’t benefit from the plan cache. In that case, it might be better to just disable it altogether. This setting is fine for testing, but it has no practical significance in a production environment.

With this value set to 1, most SQL statements will undergo compilation, which will definitely involve compilation.

Therefore, the parameter in version 7.1 is designed to handle situations where a large number of values are inserted, preventing them from entering the plan cache. Normal SQL statements can enter the plan cache to solve this issue.

Additionally, all statements must generate an execution plan, as data access operations require an execution plan. This is the same for all databases (although some databases do not have execution plan caching).

However, for batch insert statements, this session will only execute one prepared statement. If TiDB’s execution plan cache does not store and validate parameter values, shouldn’t it be possible to use the same cache every time? If the cache contains parameter bindings at execution time, wouldn’t it be inherently difficult to use the execution plan cache for batch insert statements? Additionally, what information does the execution plan for an insert statement contain? It doesn’t have different paths like query statements do.

If TiDB’s execution plan cache does not store and verify parameter values, then the same cache can be used every time? –Yes

If the cache contains parameter bindings at execution time, then it would be difficult to use the execution plan cache for batch insert statements, right? –Very difficult, because each time you prepare the statement, it becomes different (with parameter values), so it will frequently be eliminated from the execution plan, potentially causing OOM.

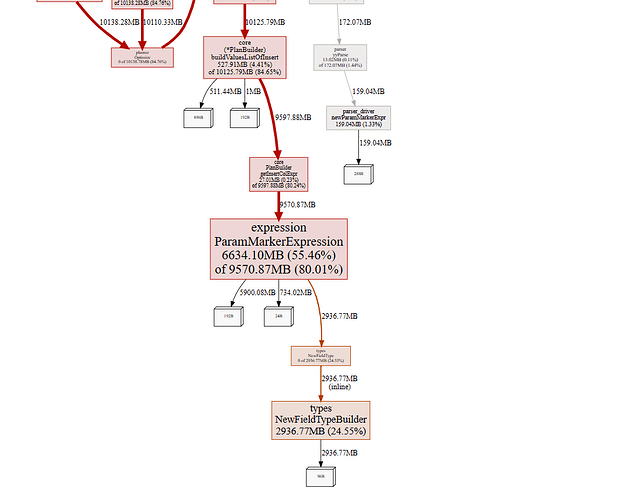

Moreover, what information does the execution plan of an insert statement contain? It’s not like query statements that have different paths. –During execution, it doesn’t contain much information, so the execution plan information retained in the plan cache is very simple. The largest object stored is actually the syntax tree structure generated by stmtid (which contains a large number of values), occupying a lot of memory. I remember that in a certain version, insert statements were directly ignored by the plan cache, but I forgot which version. You can check the issue. Additionally, for simple insert into statements, entering the plan cache is still very effective because it avoids hard parsing, significantly reducing CPU time.

Hmm, in that case, for batch inserts with parameterized prepared statements, the compile time is unavoidable and cannot be resolved through execution plan caching?

How did you determine that the OOM issue was caused by the execution plan cache?

In Grafana, I noticed that the plan cache memory usage under TiDB executor increased rapidly after batch insertion started, and it was almost entirely occupied by it. Additionally, a dump was performed at that time.

For your version, regarding batch-related sessions, I suggest:

- Set

tidb_prepared_plan_cache_size to the default value of 100.

- Ensure that the types of statements in the session during batch processing do not exceed

tidb_prepared_plan_cache_size, preferably not more than 10. This way, situations where many rows are inserted into values can reside in the plan cache. Be sure not to mix this with other non-batch operations to avoid frequent execution of non-batch statements, which could cause large batch statements to be evicted from the plan cache, triggering GC issues.

- Control the session for batch statements to be a separate connection (do not place it in the same connection pool as normal operations, or if placed together, do not release the connection. Otherwise, it may contaminate other connections, causing all connections to cache the execution plan of this “large statement,” leading to excessive overall memory usage).

- Ensure that the insert statement uses the form

statement="insert into xx values (?,?,?...)" with bound variables, which can use the plan cache.

Regarding your issue “batch insert of 500 rows at a time, causing frequent execution plan cache due to too many parameters, leading to TiDB OOM”, there are two possible reasons for TiDB OOM:

- The SQL text is indeed too large, and multiple connections have cached the execution plan of this statement, leading to excessive overall memory usage.

- The large statement is frequently evicted from the plan cache, causing excessive GC pressure on the backend Golang, which may lead to excessive memory usage and eventually OOM if GC is not timely.