Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 外部应用访问K8s搭建的Tidb集群失败

【TiDB Usage Environment】Production\Test Environment\POC

Test Environment

【TiDB Version】

5.4.1

【Encountered Problem】

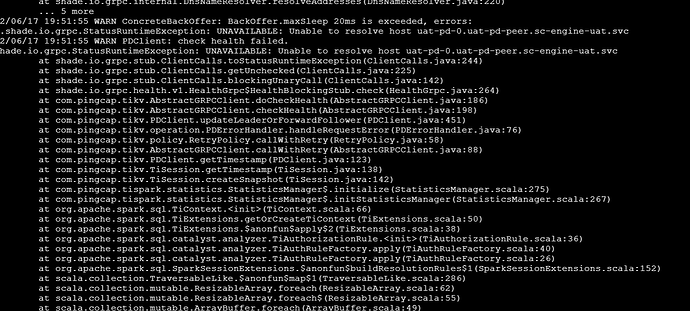

An external Spark cluster needs to access the TiDB cluster deployed on K8s. There is an issue with domain name resolution.

【Reproduction Path】

【Problem Phenomenon and Impact】

【TiDB Operator Version】:

1.3

【K8s Version】:

【Attachments】:

The TiDB domain name in k8s is for internal access within the k8s cluster. If you need to access it externally, you first need to map the k8s access address.

Do we need to map all deployed pods? What IP should they be mapped to? If they are mapped to the pod, how do we solve the pod IP drift problem?

NodePort, create the service yourself, and use the selector to select the corresponding component. You are using TiSpark to access PD. If it’s on YARN, there might be an issue because PD returns internal addresses, and you need to know the host on all nodes. If it’s on K8s, there won’t be any problem.

In the on yarn mode, because it is not in the same cluster, there will be parsing issues. So two problems need to be addressed. 1. DNS resolution of internal domain names in k8s, which has already been resolved. 2. The issue of pod IP drift, where the pod’s IP changes after being restarted. Do you have any good suggestions?

Does TiDB Operator support TiSpark?

This topic was automatically closed 1 minute after the last reply. No new replies are allowed.