Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 实验环境部署TiDB 6.1 NUMA绑核失败

【TiDB Usage Environment】Experimental environment

【TiDB Version】v6.1.0, operating system is openEuler 22.03 LTS

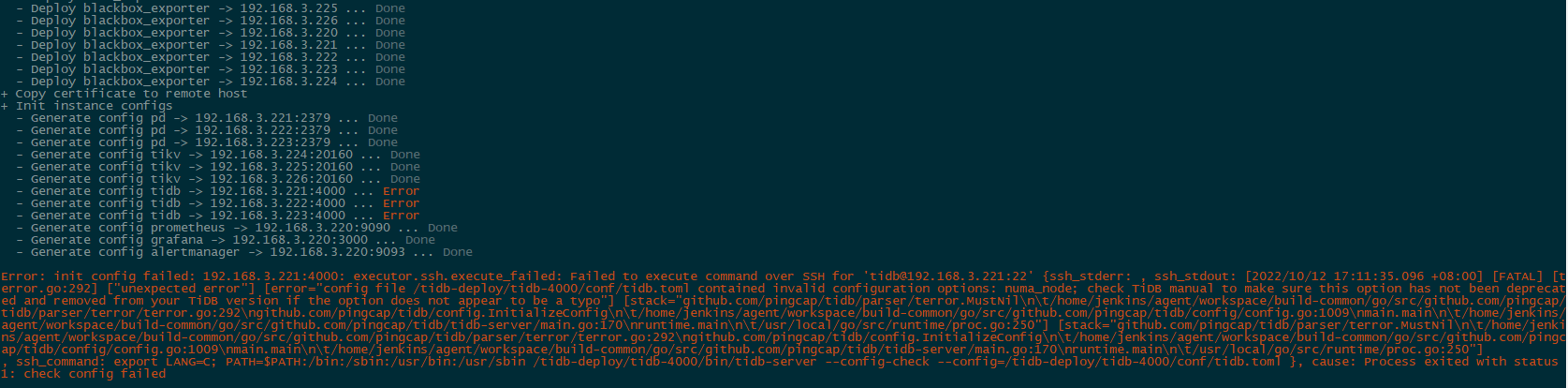

【Encountered Problem】numactl is installed. TiDB and PD components are deployed together. TiDB numa binding failed, PD binding succeeded.

【Reproduction Path】

- Topology configuration file is as follows:

global:

user: "tidb"

ssh_port: 22

deploy_dir: "/tidb-deploy"

data_dir: "/tidb-data"

arch: "amd64"

server_configs:

tidb:

new_collations_enabled_on_first_bootstrap: true

numa_node: "0"

pd:

numa_node: "1"

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

pd_servers:

- host: 192.168.3.221

- host: 192.168.3.222

- host: 192.168.3.223

tidb_servers:

- host: 192.168.3.221

- host: 192.168.3.222

- host: 192.168.3.223

tikv_servers:

- host: 192.168.3.224

- host: 192.168.3.225

- host: 192.168.3.226

monitoring_servers:

- host: 192.168.3.220

grafana_servers:

- host: 192.168.3.220

alertmanager_servers:

- host: 192.168.3.220

- numa information

[root@localhost ~]# numactl --hardware

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3

node 0 size: 2013 MB

node 0 free: 1722 MB

node 1 cpus: 4 5 6 7

node 1 size: 1403 MB

node 1 free: 1010 MB

node distances:

node 0 1

0: 10 20

1: 20 10

【Problem Phenomenon and Impact】

It seems that the numa_node cannot be configured under config, you should put this parameter in the configuration of each tidb.

I’ll leave work first and try again when I get home. The NUMA for PD was successful, and executing systemctl can start it.

After adjusting the numa_node position, there were no issues. However, it’s strange that the PD core binding settings can be written into server_configs and deployed normally.

The configuration is as follows:

global:

user: "tidb"

ssh_port: 22

deploy_dir: "/tidb-deploy"

data_dir: "/tidb-data"

arch: "amd64"

server_configs:

tidb:

new_collations_enabled_on_first_bootstrap: true

pd:

numa_node: "1"

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

pd_servers:

- host: 192.168.3.221

- host: 192.168.3.222

- host: 192.168.3.223

tidb_servers:

- host: 192.168.3.221

numa_node: "0"

- host: 192.168.3.222

numa_node: "0"

- host: 192.168.3.223

numa_node: "0"

tikv_servers:

- host: 192.168.3.224

- host: 192.168.3.225

- host: 192.168.3.226

monitoring_servers:

- host: 192.168.3.220

grafana_servers:

- host: 192.168.3.220

alertmanager_servers:

- host: 192.168.3.220

Check the PD startup script to see if there is a numactl command and verify if it is truly bound.

Posted in the wrong place

It was only written in the PD configuration file, but it had no effect (and even had a counterproductive effect) because TiUP only checks if the config is in key: value structure.

The `numa_node=1` will be written into the pd's toml configuration file. Although it doesn't take effect, it doesn't report an error either. Verified that the configurations in `server_configs` will be written into the component's `{deploy-dir}/conf/toml` configuration file. The configurations in the component will be written into the component's run.sh script.

The expert upstairs has done very detailed research.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.