Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: k8s部署tidb失败,没有报错

[TiDB Usage Environment] Production Environment / Testing / Poc

[TiDB Version]

[Reproduction Path] What operations were performed when the issue occurred

[Encountered Issue: Issue Phenomenon and Impact]

[Resource Configuration]

[TiDB Operator Version]: v1.5.2

[K8s Version]: v1.29.2

[Attachments: Screenshots / Logs / Monitoring]

tidb-operator tidb-admin 1 2024-03-13 13:36:47.522594135 +0800 CST deployed tidb-operator-v1.5.2 v1.5.2

tidb-operator pod logs:

E0313 06:17:24.355669 1 reflector.go:138] k8s.io/client-go@v0.20.15/tools/cache/reflector.go:167: Failed to watch *v1alpha1.TidbDashboard: failed to list *v1alpha1.TidbDashboard: the server could not find the requested resource (get tidbdashboards.pingcap.com)

E0313 06:18:09.206798 1 reflector.go:138] k8s.io/client-go@v0.20.15/tools/cache/reflector.go:167: Failed to watch *v1alpha1.TidbDashboard: failed to list *v1alpha1.TidbDashboard: the server could not find the requested resource (get tidbdashboards.pingcap.com)

E0313 06:19:03.449916 1 reflector.go:138] k8s.io/client-go@v0.20.15/tools/cache/reflector.go:167: Failed to watch *v1alpha1.TidbDashboard: failed to list *v1alpha1.TidbDashboard: the server could not find the requested resource (get tidbdashboards.pingcap.com)

E0313 06:19:52.099712 1 reflector.go:138] k8s.io/client-go@v0.20.15/tools/cache/reflector.go:167: Failed to watch *v1alpha1.TidbDashboard: failed to list *v1alpha1.TidbDashboard: the server could not find the requested resource (get tidbdashboards.pingcap.com)

kubectl apply -f tidb-test.yaml

tidbcluster.pingcap.com/tidb-test created

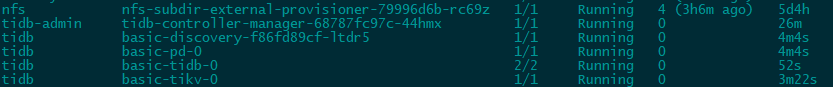

But the pod was not created. No error messages were seen either.

Where should I check to find out what went wrong?