Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: Tidb使用br全量备份数据失败

[TiDB Usage Environment] Testing

[TiDB Version]

[Reproduction Path] What operations were performed to encounter the issue

[Encountered Issue: Issue Phenomenon and Impact]

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachment: Screenshot/Log/Monitoring]

Command used: ./br backup full --pd “10.33.65.73:2379” --storage “local:///tidb-backup/test-tidb/” --log-file test-backupfull.log

Detail BR log in test-backupfull.log

[2023/11/02 16:59:54.194 +08:00] [INFO] [collector.go:77] [“Full Backup failed summary”] [total-ranges=0] [ranges-succeed=0] [ranges-failed=0]

Error: running BR in incompatible version of cluster, if you believe it’s OK, use --check-requirements=false to skip.: rpc error: code = Unavailable desc = connection error: desc = “transport: Error while dialing: dial tcp: lookup test-tidb-pd-1.test-tidb-pd-peer.base-server.svc on 127.0.0.53:53: no such host”

The local path is mounted on an NFS system on the local machine.

TiDB version 7.1.0, BR version 7.1.0

Can the machine executing the backup command access port 2379 of 10.33.65.73?

This IP is for the backend listener of a load balancer I set up for PD on port 2379. Running nc -z 10.33.65.73 2379 shows no issues.

It is recommended to use the BACKUP CRD method for backups in a K8S environment.

Just add the parameter --check-requirements=false to ignore it.

lookup test-tidb-pd-1.test-tidb-pd-peer.base-server.svc on 127.0.0.53:53: no such host This is not right

Refer to this, k8s backup is different from physical machines, 备份 TiDB 集群到持久卷 | PingCAP 文档中心

I’ll try using the backup CRD.

PD does not require load balancing.

At first, I wanted to use SQL to back up through PD, but since PD is within the cluster and I am connecting remotely, I added a load balancer for external access. However, it didn’t seem to work well, so I ended up using the backup CRD.

After starting the job through CRD, this issue appears in the job logs. It seems to be due to the role not having the necessary permissions. I tried re-binding the role, but it still doesn’t work.

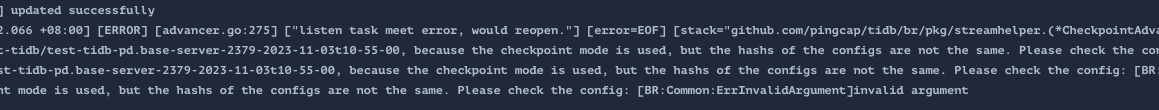

Another issue occurred during the backup process using CRD. The log error information is as follows:

[2023/11/03 11:55:12.102 +08:00] [ERROR] [backup.go:54] [“failed to backup”] [error=“failed to backup to file:////test-tidb/test-tidb-pd.base-server-2379-2023-11-03t10-55-00, because the checkpoint mode is used, but the hashes of the configs are not the same. Please check the config: [BR:Common:ErrInvalidArgument]invalid argument”] [errorVerbose=“[BR:Common:ErrInvalidArgument]invalid argument\nfailed to backup to file:////test-tidb/test-tidb-pd.base-server-2379-2023-11-03t10-55-00, because the checkpoint mode is used, but the hashes of the configs are not the same. Please check the config\ngithub.com/pingcap/tidb/br/pkg/backup.(*Client).CheckCheckpoint\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/backup/client.go:267\ngithub.com/pingcap/tidb/br/pkg/task.RunBackup\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/pkg/task/backup.go:447\nmain.runBackupCommand\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/cmd/br/backup.go:53\nmain.newFullBackupCommand.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/cmd/br/backup.go:143\ngithub.com/spf13/cobra.(*Command).execute\n\t/go/pkg/mod/github.com/spf13/cobra@v1.6.1/command.go:916\ngithub.com/spf13/cobra.(*Command).ExecuteC\n\t/go/pkg/mod/github.com/spf13/cobra@v1.6.1/command.go:1044\ngithub.com/spf13/cobra.(*Command).Execute\n\t/go/pkg/mod/github.com/spf13/cobra@v1.6.1/command.go:968\nmain.main\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/cmd/br/main.go:58\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:250\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1598”] [stack=“main.runBackupCommand\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/cmd/br/backup.go:54\nmain.newFullBackupCommand.func1\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/cmd/br/backup.go:143\ngithub.com/spf13/cobra.(*Command).execute\n\t/go/pkg/mod/github.com/spf13/cobra@v1.6.1/command.go:916\ngithub.com/spf13/cobra.(*Command).ExecuteC\n\t/go/pkg/mod/github.com/spf13/cobra@v1.6.1/command.go:1044\ngithub.com/spf13/cobra.(*Command).Execute\n\t/go/pkg/mod/github.com/spf13/cobra@v1.6.1/command.go:968\nmain.main\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/br/br/cmd/br/main.go:58\nruntime.main\n\t/usr/local/go/src/runtime/proc.go:250”]

Reassigning the role and rolebinding resolved this issue.

Teacher, there is currently an error in the job that is causing the backup task to fail.

I can’t figure it out.

There seems to be an issue with the display parameters. Can you show the YAML configuration file you are currently using?

After I changed the configuration, another issue started to occur.