Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 通过tidb operator对tiflash扩容无法扩容出新的节点,operator出现如下报错

[TiDB Usage Environment] Production Environment / Testing / Poc

[TiDB Version] v6.1.0

[Reproduction Path] What operations were performed when the issue occurred

[Encountered Issue: Issue Phenomenon and Impact]

[Resource Configuration]

[Attachments: Screenshots / Logs / Monitoring]

kubectl logs -f pod/tidb-controller-manager-75b6df5c47-4fv87 -ntidb-admin | grep yz

E1206 09:43:13.154628 1 tidbcluster_control.go:85] failed to update TidbCluster: [tidb/yz], error: Operation cannot be fulfilled on tidbclusters.pingcap.com “yz”: the object has been modified; please apply your changes to the latest version and try again

E1206 09:43:13.154682 1 tidb_cluster_controller.go:126] TidbCluster: tidb/yz, sync failed Operation cannot be fulfilled on tidbclusters.pingcap.com “yz”: the object has been modified; please apply your changes to the latest version and try again, requeuing

I1206 09:43:14.841514 1 pv_control.go:192] PV: [tidb-cluster-r24-pv04] updated successfully, : tidb/yz

E1206 09:43:15.155950 1 tidbcluster_control.go:85] failed to update TidbCluster: [tidb/yz], error: Operation cannot be fulfilled on tidbclusters.pingcap.com “yz”: the object has been modified; please apply your changes to the latest version and try again

E1206 09:43:15.155986 1 tidb_cluster_controller.go:126] TidbCluster: tidb/yz, sync failed Operation cannot be fulfilled on tidbclusters.pingcap.com “yz”: the object has been modified; please apply your changes to the latest version and try again, requeuing

I have encountered the same problem. After upgrading to version 5.4.0, the problem was resolved.

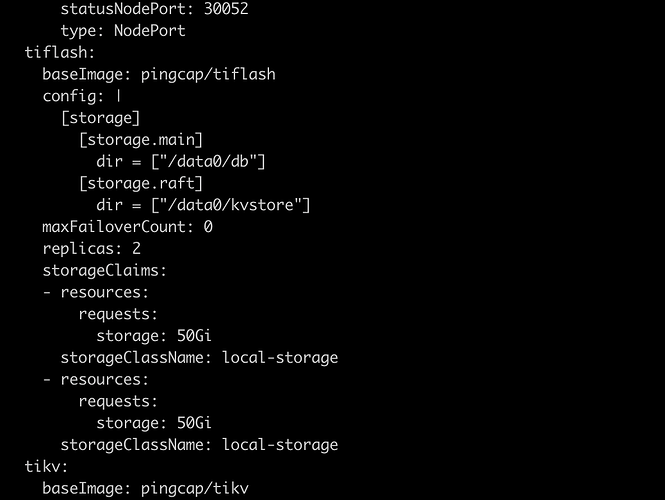

After investigation, it was found that there was an issue with the TiFlash configuration file, resulting in the following error:

“I1206 10:09:03.063579 1 event.go:282] Event(v1.ObjectReference{Kind:“TidbCluster”, Namespace:“tidb”, Name:“yz”, UID:“50b1bdbc-629c-424e-9247-e36426ebd23c”, APIVersion:“pingcap.com/v1alpha1”, ResourceVersion:“52683256”, FieldPath:“”}): type: ‘Warning’ reason: ‘FailedUpdateTiFlashSTS’ contains volumeMounts that do not have matched volume: map[data1:{data1 false /data1 }]

E1206 10:09:16.330147 1 tidb_cluster_controller.go:126] TidbCluster: tidb/yz, sync failed contains volumeMounts that do not have matched volume: map[data1:{data1 false /data1 }], requeuing

I1206 10:09:16.330561 1 event.go:282] Event(v1.ObjectReference{Kind:“TidbCluster”, Namespace:“tidb”, Name:“yz”, UID:“50b1bdbc-629c-424e-9247-e36426ebd23c”, APIVersion:“pingcap.com/v1alpha1”, ResourceVersion:“52683256”, FieldPath:“”}): type: ‘Warning’ reason: ‘FailedUpdateTiFlashSTS’ contains volumeMounts that do not have matched volume: map[data1:{data1 false /data1 }]”

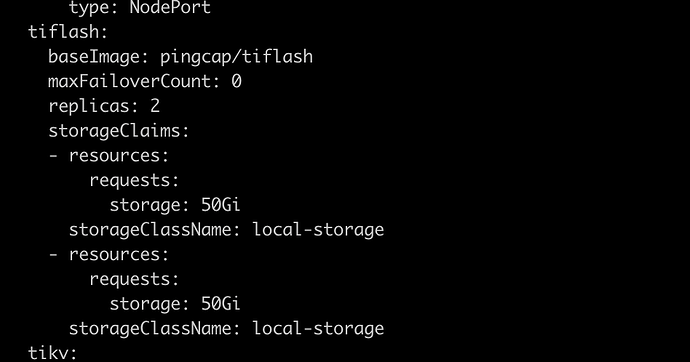

Later, the configuration file was modified online, retaining the following:

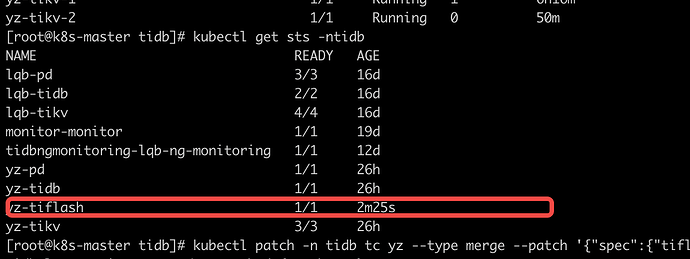

The error disappeared, and the pod could be scaled normally. The scaling is as follows:

[root@k8s-master tidb]# kubectl patch -n tidb tc yz --type merge --patch '{"spec":{"tiflash":{"replicas":3}}}'

tidbcluster.pingcap.com/yz patched

[root@k8s-master tidb]# kubectl get pod -ntidb

tidbngmonitoring-lqb-ng-monitoring-0 1/1 Running 0 138m

yz-discovery-6c89b45d5d-nkps7 1/1 Running 1 26h

yz-pd-0 1/1 Running 2 26h

yz-tidb-0 2/2 Running 2 26h

yz-tidb-1 2/2 Running 0 4m15s

yz-tiflash-0 4/4 Running 0 29m

yz-tiflash-1 4/4 Running 0 4m15s

yz-tiflash-2 4/4 Running 0 7s

yz-tikv-0 1/1 Running 1 26h

yz-tikv-1 1/1 Running 1 6h43m

yz-tikv-2 1/1 Running 0 77m

Problem solved.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.