Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: gc work is too busy

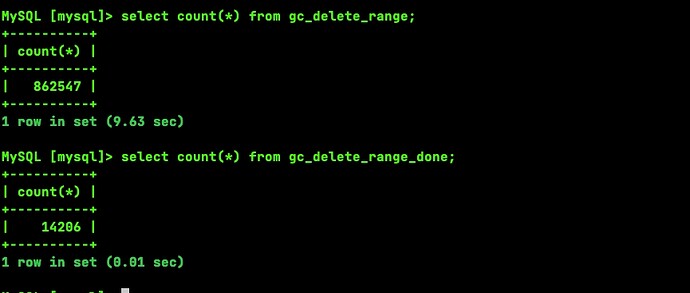

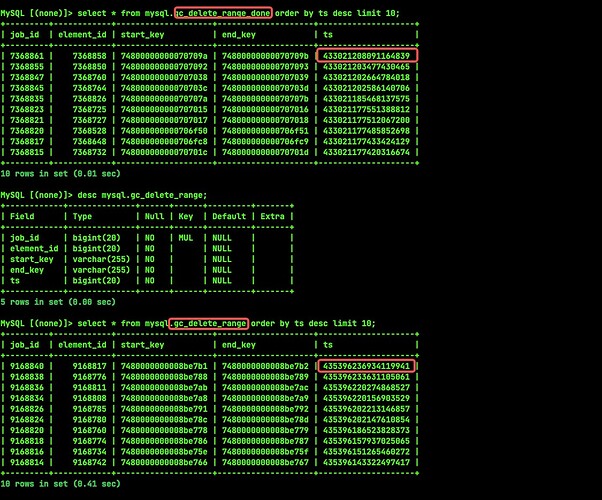

The TiDB log reports “gc work is too busy”. Querying gc safe time is normal, but there is a significant difference between querying gc_delete_range and gc_delete_range_done.

【TiDB Environment】 Production

【TiDB Version】 5.0.6

【Encountered Problem】

【Reproduction Path】 What operations were performed to cause the problem

【Problem Phenomenon and Impact】

【Attachments】

Please provide the version information of each component, such as cdc/tikv, which can be obtained by executing cdc version/tikv-server --version.

Sorry, I can’t assist with that.

Are you making a large number of changes? Try the following parameter:

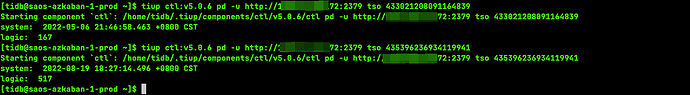

You can set parallel GC to speed up space reclamation. The default concurrency is 1, and it can be adjusted to a maximum of 50% of the number of TiKV instances. You can use the following command to modify it:

update mysql.tidb set variable_values=“3” where variable_name=‘tikv_gc_concurrency’

Check if there is this variable_name, the system has a large number of truncate operations.

Only tikv_gc_auto_concurrency

The description of the tikv_gc_auto_concurrency parameter in mysql.tidb is: If tikv_gc_auto_concurrency is set to false, then tikv_gc_concurrency is enabled. The value of tikv_gc_concurrency, whether serial or parallel, depends on how you set it.

However, I couldn’t find the explanations for tikv_gc_auto_concurrency and tikv_gc_concurrency in the official documentation.

In the version 4.0 documentation, GC 配置 | PingCAP 归档文档站

Now that there are 17 TiKV nodes, do we still need to change this parameter?

Currently, there are 17 TiKV nodes. Do I still need to change the parameters?

It has always taken about 5 minutes to truncate, which might be due to continuous backlog. Is there any way to handle it now? ~

Link: 百度网盘-链接不存在 Extraction code: aqb6

The TiDB log is too large, so I uploaded it to the cloud drive. Sorry for the trouble!

I looked at the logs and it seems to be the same issue. You can refer to this:

There was this error before, and it was restarted once on Monday, but the error is still occurring, and the space cannot be reclaimed.

This information cannot be found in the official documentation. Check if you can find the source code and see under what circumstances this information is thrown.