Here is the explain analyze information for the old database v6.0.5:

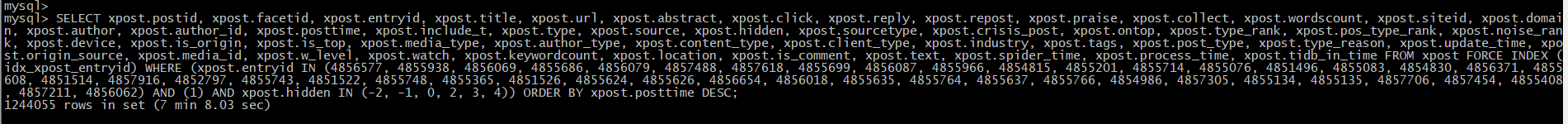

mysql>

mysql> explain analyze SELECT xpost.postid, xpost.facetid, xpost.entryid, xpost.title, xpost.url, xpost.abstract, xpost.click, xpost.reply, xpost.repost, xpost.praise, xpost.collect, xpost.wordscount, xpost.siteid, xpost.domain, xpost.author, xpost.author_id, xpost.posttime, xpost.include_t, xpost.type, xpost.source, xpost.hidden, xpost.sourcetype, xpost.crisis_post, xpost.ontop, xpost.type_rank, xpost.pos_type_rank, xpost.noise_rank, xpost.device, xpost.is_origin, xpost.is_top, xpost.media_type, xpost.author_type, xpost.content_type, xpost.client_type, xpost.industry, xpost.tags, xpost.post_type, xpost.type_reason, xpost.update_time, xpost.origin_source, xpost.media_id, xpost.w_level, xpost.watch, xpost.keywordcount, xpost.location, xpost.is_comment, xpost.text, xpost.spider_time, xpost.process_time, xpost.tidb_in_time FROM xpost FORCE INDEX (idx_xpost_entryid) WHERE (xpost.entryid IN (4856577, 4855938, 4856069, 4855686, 4856079, 4857488, 4857618, 4855699, 4856087, 4855966, 4854815, 4855201, 4855714, 4855076, 4851496, 4855083, 4854830, 4856371, 4855608, 4851514, 4857916, 4852797, 4855743, 4851522, 4855748, 4855365, 4851526, 4855624, 4855626, 4856654, 4856018, 4855635, 4855764, 4855637, 4855766, 4854986, 4857305, 4855134, 4855135, 4857706, 4857454, 4855408, 4857211, 4856062) AND (1) AND xpost.hidden IN (-2, -1, 0, 2, 3, 4)) ORDER BY xpost.posttime DESC \G;

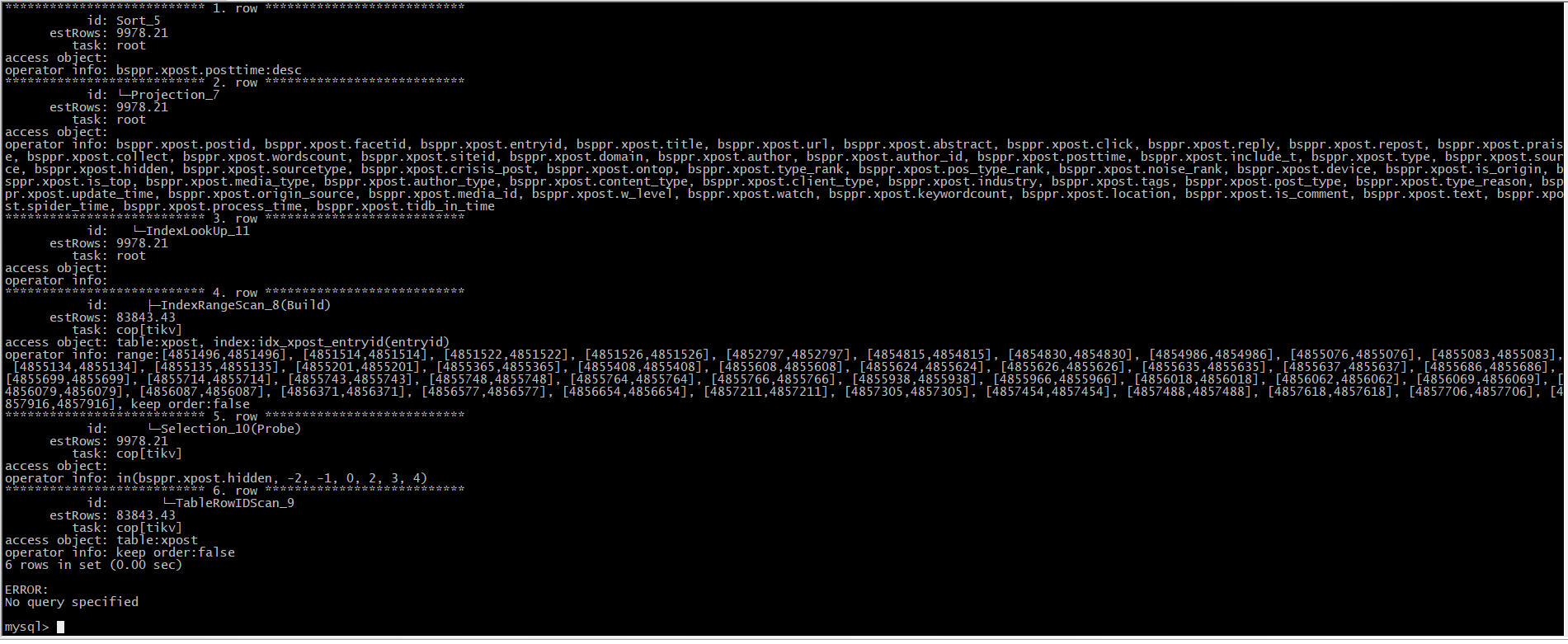

*************************** 1. row ***************************

id: Sort_5

estRows: 9978.21

actRows: 1244055

task: root

access object:

execution info: time:7m37.3s, loops:1216

operator info: bsppr.xpost.posttime:desc

memory: 956.7 MB

disk: 1.06 GB

*************************** 2. row ***************************

id: └─Projection_7

estRows: 9978.21

actRows: 1244055

task: root

access object:

execution info: time:7.45s, loops:1216, Concurrency:5

operator info: bsppr.xpost.postid, bsppr.xpost.facetid, bsppr.xpost.entryid, bsppr.xpost.title, bsppr.xpost.url, bsppr.xpost.abstract, bsppr.xpost.click, bsppr.xpost.reply, bsppr.xpost.repost, bsppr.xpost.praise, bsppr.xpost.collect, bsppr.xpost.wordscount, bsppr.xpost.siteid, bsppr.xpost.domain, bsppr.xpost.author, bsppr.xpost.author_id, bsppr.xpost.posttime, bsppr.xpost.include_t, bsppr.xpost.type, bsppr.xpost.source, xpost.hidden, xpost.sourcetype, xpost.crisis_post, xpost.ontop, xpost.type_rank, xpost.pos_type_rank, xpost.noise_rank, xpost.device, xpost.is_origin, xpost.is_top, xpost.media_type, xpost.author_type, xpost.content_type, xpost.client_type, xpost.industry, xpost.tags, xpost.post_type, xpost.type_reason, xpost.update_time, xpost.origin_source, xpost.media_id, xpost.w_level, xpost.watch, xpost.keywordcount, xpost.location, xpost.is_comment, xpost.text, xpost.spider_time, xpost.process_time, xpost.tidb_in_time

memory: 4.49 MB

disk: N/A

*************************** 3. row ***************************

id: └─IndexLookUp_11

estRows: 9978.21

actRows: 1244055

task: root

access object:

execution info: time:7.83s, loops:1216, index_task: {total_time: 7.32s, fetch_handle: 131.1ms, build: 197.4µs, wait: 7.18s}, table_task: {total_time: 38s, num: 65, concurrency: 5}, next: {wait_index: 2.97ms, wait_table_lookup_build: 1.05ms, wait_table_lookup_resp: 3.67s}

operator info:

memory: 181.9 MB

disk: N/A

*************************** 4. row ***************************

id: ├─IndexRangeScan_8(Build)

estRows: 83843.43

actRows: 1244114

task: cop[tikv]

access object: table:xpost, index:idx_xpost_entryid(entryid)

execution info: time:27.3ms, loops:1237, cop_task: {num: 138, max: 194.7ms, min: 1.23ms, avg: 24ms, p95: 106.5ms, max_proc_keys: 50144, p95_proc_keys: 50144, tot_proc: 930ms, tot_wait: 127ms, rpc_num: 138, rpc_time: 3.31s, copr_cache_hit_ratio: 0.09, distsql_concurrency: 15}, tikv_task:{proc max:59ms, min:0s, avg: 7.15ms, p80:9ms, p95:28ms, iters:1742, tasks:138}, scan_detail: {total_process_keys: 1192678, total_process_keys_size: 54863188, total_keys: 1482570, get_snapshot_time: 134.4ms, rocksdb: {delete_skipped_count: 19, key_skipped_count: 1482423, block: {cache_hit_count: 2451, read_count: 5, read_byte: 83.5 KB, read_time: 207.5µs}}}

operator info: range:[4851496,4851496], [4851514,4851514], [4851522,4851522], [4851526,4851526], [4852797,4852797], [4854815,4854815], [4854830,4854830], [4854986,4854986], [4855076,4855076], [4855083,4855083], [4855134,4855134], [4855135,4855135], [4855201,4855201], [4855365,4855365], [4855408,4855408], [4855608,4855608], [4855624,4855624], [4855626,4855626], [4855635,4855635], [4855637,4855637], [4855686,4855686], [4855699,4855699], [4855714,4855714], [4855743,4855743], [4855748,4855748], [4855764,4855764], [4855766,4855766], [4855938,4855938], [4855966,4855966], [4856018,4856018], [4856062,4856062], [4856069,4856069], [4856079,4856079], [4856087,4856087], [4856371,4856371], [4856577,4856577], [4856654,4856654], [4857211,4857211], [4857305,4857305], [4857454,4857454], [4857488,4857488], [4857618,4857618], [4857706,4857706], [4857916,4857916], keep order:false

memory: N/A

disk: N/A

*************************** 5. row ***************************

id: └─Selection_10(Probe)

estRows: 9978.21

actRows: 1244055

task: cop[tikv]

access object:

execution info: time:37s, loops:1354, cop_task: {num: 8457, max: 1.28s, min: 728.6µs, avg: 51.8ms, p95: 218.7ms, max_proc_keys: 16590, p95_proc_keys: 636, tot_proc: 56.4s, tot_wait: 12.9s, rpc_num: 8457, rpc_time: 7m17.7s, copr_cache_hit_ratio: 0.09, distsql_concurrency: 15}, tikv_task:{proc max:620ms, min:0s, avg: 7.78ms, p80:7ms, p95:35ms, iters:15378, tasks:8457}, scan_detail: {total_process_keys: 1078696, total_process_keys_size: 739576989, total_keys: 1227196, get_snapshot_time: 12.6s, rocksdb: {delete_skipped_count: 16, key_skipped_count: 413543, block: {cache_hit_count: 11354691, read_count: 5, read_byte: 80.2 KB, read_time: 1.37ms}}}

operator info: in(bsppr.xpost.hidden, -2, -1, 0, 2, 3, 4)

memory: N/A

disk: N/A

*************************** 6. row ***************************

id: └─TableRowIDScan_9

estRows: 83843.43

actRows: 1244114

task: cop[tikv]

access object: table:xpost

execution info: tikv_task:{proc max:620ms, min:0s, avg: 7.76ms, p80:7ms, p95:35ms, iters:15378, tasks:8457}

operator info: keep order:false

memory: N/A

disk: N/A

6 rows in set (7 min 37.55 sec)

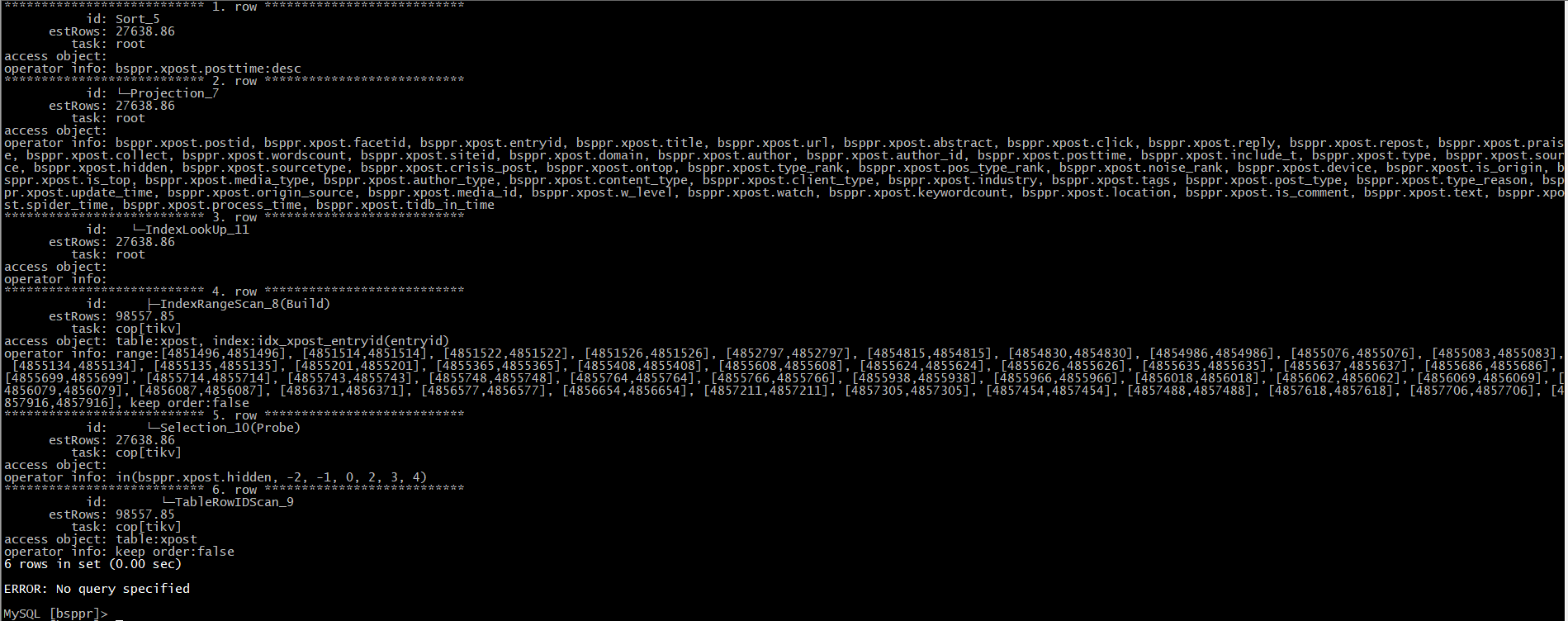

Since the old database has been upgraded and cannot be rolled back to explain, here is the explain analyze information for the production database v4.0.9:

MySQL [bsppr]>

MySQL [bsppr]>

MySQL [bsppr]> explain analyze SELECT xpost.postid, xpost.facetid, xpost.entryid, xpost.title, xpost.url, xpost.abstract, xpost.click, xpost.reply, xpost.repost, xpost.praise, xpost.collect, xpost.wordscount, xpost.siteid, xpost.domain, xpost.author, xpost.author_id, xpost.posttime, xpost.include_t, xpost.type, xpost.source, xpost.hidden, xpost.sourcetype, xpost.crisis_post, xpost.ontop, xpost.type_rank, xpost.pos_type_rank, xpost.noise_rank, xpost.device, xpost.is_origin, xpost.is_top, xpost.media_type, xpost.author_type, xpost.content_type, xpost.client_type, xpost.industry, xpost.tags, xpost.post_type, xpost.type_reason, xpost.update_time, xpost.origin_source, xpost.media_id, xpost.w_level, xpost.watch, xpost.keywordcount, xpost.location, xpost.is_comment, xpost.text, xpost.spider_time, xpost.process_time, xpost.tidb_in_time FROM xpost FORCE INDEX (idx_xpost_entryid) WHERE (xpost.entryid IN (4856577, 4855938, 4856069, 4855686, 4856079, 4857488, 4857618, 4855699, 4856087, 4855966, 4854815, 4855201, 4855714, 4855076, 4851496, 4855083, 4854830, 4856371, 4855608, 4851514, 4857916, 4852797, 4855743, 4851522, 4855748, 4855365, 4851526, 4855624, 4855626, 4856654, 4856018, 4855635, 4855764, 4855637, 4855766, 4854986, 4857305, 4855134, 4855135, 4857706, 4857454, 4855408, 4857211, 4856062) AND (1) AND xpost.hidden IN (-2, -1, 0, 2, 3, 4)) ORDER BY xpost.posttime DESC\G;

*************************** 1. row ***************************

id: Sort_5

estRows: 27643.42

actRows: 1244179

task: root

access object:

execution info: time:24.614187157s, loops:1217

operator info: bsppr.xpost.posttime:desc

memory: 982.008674621582 MB

disk: 1.2011287668719888 GB

*************************** 2. row ***************************

id: └─Projection_7

estRows: 27643.42

actRows: 1244179

task: root

access object:

execution info: time:7.16124316s, loops:1217, Concurrency:4

operator info: bsppr.xpost.postid, bsppr.xpost.facetid, bsppr.xpost.entryid, bsppr.xpost.title, bsppr.xpost.url, bsppr.xpost.abstract, bsppr.xpost.click, bsppr.xpost.reply, bsppr.xpost.repost, bsppr.xpost.praise, bsppr.xpost.collect, bsppr.xpost.wordscount, bsppr.xpost.siteid, bsppr.xpost.domain, bsppr.xpost.author, bsppr.xpost.author_id, bsppr.xpost.posttime, bsppr.xpost.include_t, bsppr.xpost.type, bsppr.xpost.source, xpost.hidden, xpost.sourcetype, xpost.crisis_post, xpost.ontop, xpost.type_rank, xpost.pos_type_rank, xpost.noise_rank, xpost.device, xpost.is_origin, xpost.is_top, xpost.media_type, xpost.author_type, xpost.content_type, xpost.client_type, xpost.industry, xpost.tags, xpost.post_type, xpost.type_reason, xpost.update_time, xpost.origin_source, xpost.media_id, xpost.w_level, xpost.watch, xpost.keywordcount, xpost.location, xpost.is_comment, xpost.text, xpost.spider_time, xpost.process_time, xpost.tidb_in_time

memory: 3.3519744873046875 MB

disk: N/A

*************************** 3. row ***************************

id: └─IndexLookUp_11

estRows: 27643.42

actRows: 1244179

task: root

access object:

execution info: time:7.18731351s, loops:1217, index_task:35.031861ms, table_task:{num:64, concurrency:4, time:28.32257032s}

operator info:

memory: 115.38516521453857 MB

disk: N/A

*************************** 4. row ***************************

id: ├─IndexRangeScan_8(Build)

estRows: 98574.13

actRows: 1244179

task: cop[tikv]

access object: table:xpost, index:idx_xpost_entryid(entryid)

execution info: time:6.61509143s, loops:64, cop_task: {num: 18, max: 446.377326ms, min: 1.085309ms, avg: 56.777582ms, p95: 446.377326ms, max_proc_keys: 601446, p95_proc_keys: 601446, tot_proc: 685ms, tot_wait: 24ms, rpc_num: 18, rpc_time: 1.021157901s, copr_cache_hit_ratio: 0.00}, tikv_task:{proc max:284ms, min:0s, p80:50ms, p95:284ms, iters:1288, tasks:18}

operator info: range:[4851496,4851496], [4851514,4851514], [4851522,4851522], [4851526,4851526], [4852797,4852797], [4854815,4854815], [4854830,4854830], [4854986,4854986], [4855076,4855076], [4855083,4855083], [4855134,4855134], [4855135,4855135], [4855201,4855201], [4855365,4855365], [4855408,4855408], [4855608,4855608], [4855624,4855624], [4855626,4855626], [4855635,4855635], [4855637,4855637], [4855686,4855686], [4855699,4855699], [4855714,4855714], [4855743,4855743], [4855748,4855748], [4855764,4855764], [4855766,4855766], [4855938,4855938], [