Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 求助:6.0的tidb-lightning加了filter参数,导入csv文件会报table xxx schema not found

【TiDB Usage Environment】Test Environment

【TiDB Version】6.0.0

【Encountered Issue】

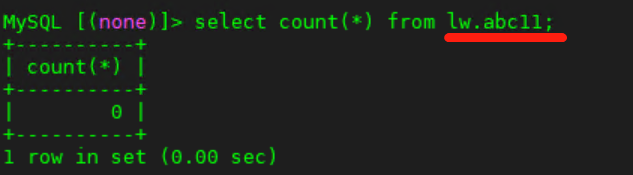

The 6.0 version of tidb-lightning added the filter parameter, and importing CSV files reports “table xxx schema not found.”

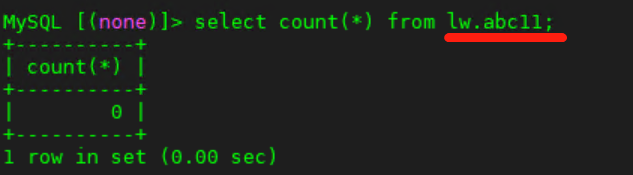

The naming format of the CSV is: lw.abc11.csv

The abc11 table in the lw database does indeed exist. I also tried adding the following in the parameter configuration file:

filter = [‘.’, ‘!mysql.', '!sys.’, ‘!INFORMATION_SCHEMA.', '!PERFORMANCE_SCHEMA.’, ‘!METRICS_SCHEMA.', '!INSPECTION_SCHEMA.’]

Also tried

filter = [‘.’]

Error logs are attached.

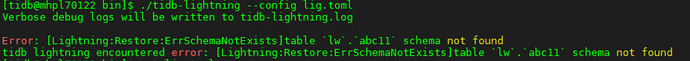

If changed to a non-existent database table format, for example, changing the CSV naming format to: 123456.csv

It throws this kind of error

May I ask, what parameters need to be added to avoid this issue?

Or are there any CSV import examples that can be referenced, thank you~

I have referred to some cases online, which say that lightning has some bugs, causing it to not find the table due to case sensitivity issues, but this database table is all lowercase, so that issue should not exist.

log.txt (6.2 KB)

Because the lighting is not configured, a normal lighting import requires creating the table first and then importing the data. Since the CSV does not have a table structure, you need to configure it in the configuration file. Try setting the no-schema option to true to avoid importing the table structure.

This has already been set to true, but it’s still the same

Send your configuration file over. It seems like there might be a configuration error, and it’s recognizing the whole thing as a database name.

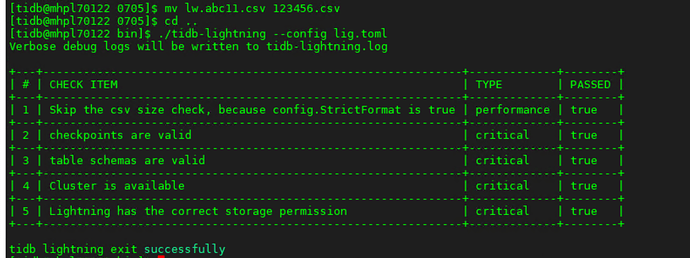

I have deleted your previous reply because the connection information was not desensitized, posing a risk of information leakage. Let me check the specific issue. Try changing the CSV file name to something like this: lw.abc11.0000csv. Also, switch the mode and clear the breakpoints first:

bin/tidb-lightning-ctl -config ./lighting.toml --switch-mode=normal

tidb-lightning-ctl -config ./lighting.toml --checkpoint-error-destroy='`schema`.`table`' # =all means all databases and tables

Then try the following methods:

- Write the file name like this: lw.abc11.0000csv

- Change the import mode to tidb

Check if each method works and if there are any errors.

Thank you, boss, it works now! Thanks.

Which method did you use to solve it? Could you please share?

After rechecking, there are a few issues:

- The sorted-kv-dir used /tmp, which is not an empty directory.

- The status-port used the default, but it is actually not 10080.

- After using this:

./tidb-lightning-ctl -config ./lig.toml --switch-mode=tidb

./tidb-lightning-ctl -config ./lig.toml --checkpoint-error-destroy=all

The sorted-kv-dir folder gets deleted and needs to be recreated.

- The format needs to be written as: lw.abc11.csv

If written as lw.abc11.0000csv, it won’t report an error, but no data will be imported.

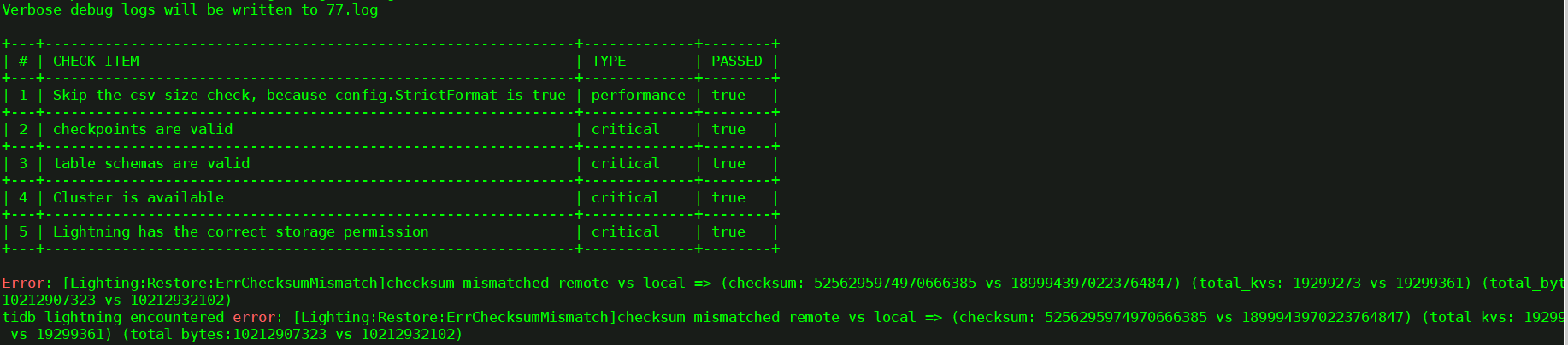

After trying to import 100 rows of data, it worked fine, but when importing 200 million rows, there was an error, possibly due to the large data volume:

Error: [Lighting:Restore:ErrChecksumMismatch] checksum mismatched remote vs local => (checksum: 5256295974970666385 vs 1899943970223764847) (total_kvs: 19299273 vs 19299361) (total_bytes:10212907323 vs 10212932102)

tidb lightning encountered error: [Lighting:Restore:ErrChecksumMismatch] checksum mismatched remote vs local => (checksum: 5256295974970666385 vs 1899943970223764847) (total_kvs: 19299273 vs 19299361) (total_bytes:10212907323 vs 10212932102)

Additionally, may I ask, what if multiple threads are used for simultaneous import?

The first error might be because you didn’t clear the table after importing data previously. When you import data again, there will be a check error due to the inconsistency between the table data and the local data. You can clear the table, clear the breakpoints, and then re-import the large file.

You can refer to this for parallel import:

Thank you for your support.

This topic was automatically closed 1 minute after the last reply. No new replies are allowed.