Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 希望有一个对tidb查询和插入性能的基本概念

Since I previously used Oracle, I will use Oracle as a benchmark for comparison in this issue. The test table is a table with 40 million rows and about 10 fields, with an ID primary key.

TiDB cluster: 3 PD nodes with 8 cores and 16 GB RAM, 3 DB nodes with 16 cores and 32 GB RAM, 6 KV nodes with 16 cores and 32 GB RAM, and 1 TB storage.

Oracle single machine: 16 cores and 32 GB RAM.

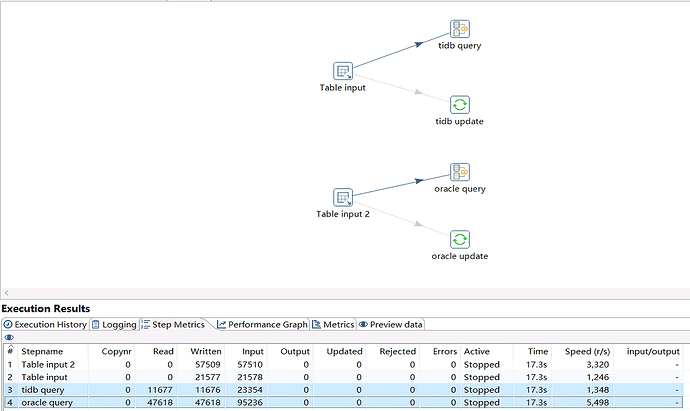

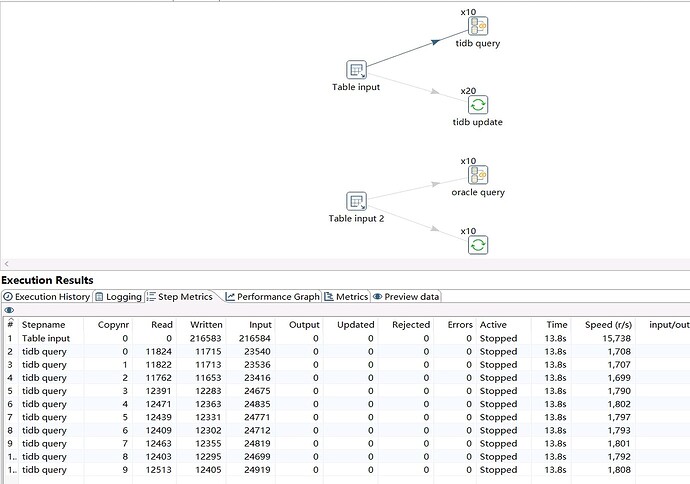

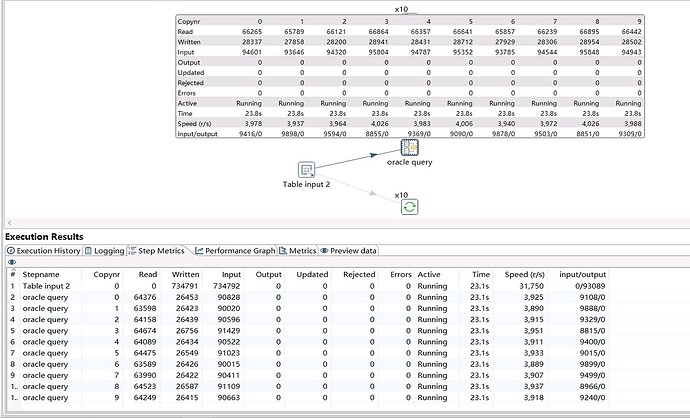

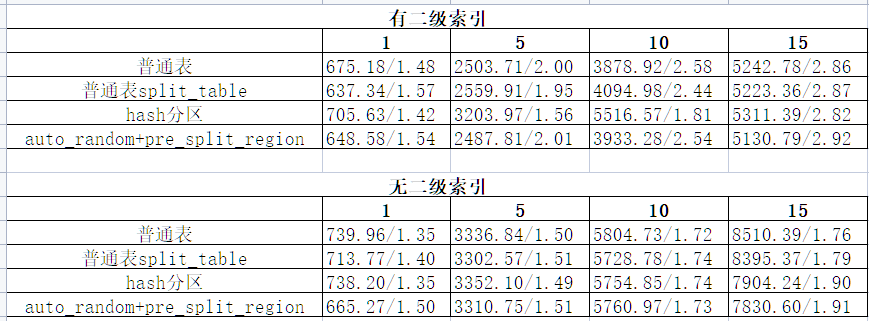

The first test is about the insert operation. When inserting data into the test table, using a single connection to insert and using multiple connections to insert simultaneously, within a certain range, the more connections there are, the faster the total insertion speed (the speed of each connection added together, with a maximum speed of about 20,000 rows per second). Compared to Oracle, when using batch processing, the single connection speed of Oracle is already very fast (also about 20,000 rows per second in the test case). If multiple connections are used to insert simultaneously, the speed of a single connection will decrease, and the total speed will be roughly the same as that of a single connection.

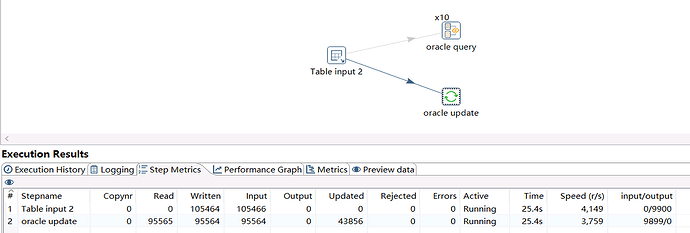

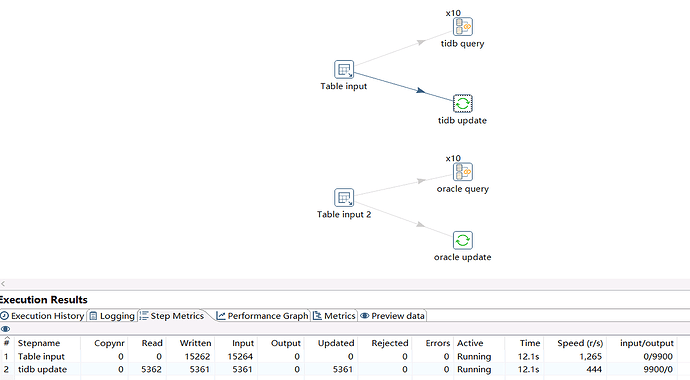

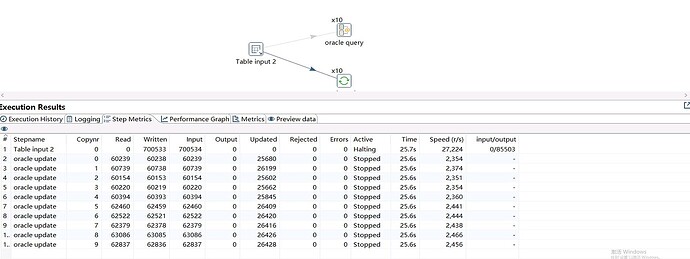

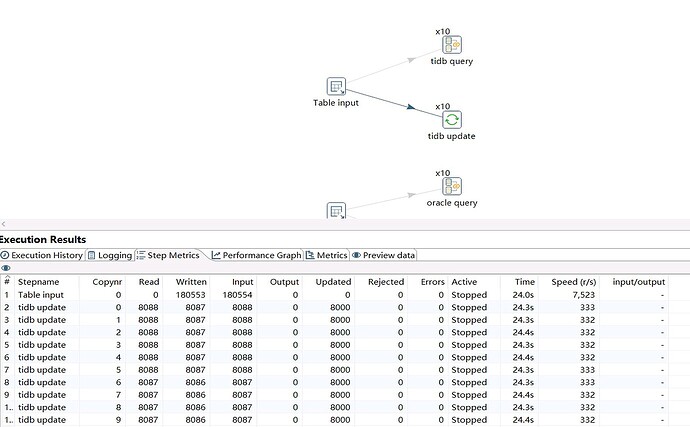

The second test is about the update operation. When querying data using the primary key ID and updating two of the fields, under a single connection, Oracle’s update speed is about 5,000 rows per second, while TiDB’s speed is 500 rows per second. If the number of connections is increased, Oracle’s behavior is basically the same as during insertion—the speed of a single connection is divided among multiple connections. However, for TiDB, when multiple connections update simultaneously, the overall speed does not increase, and the speed of the original single connection is divided among multiple connections.

Is this behavior normal for TiDB? Since the test scenario is quite general, I did not provide test data. If needed, I can upload it. Thank you.