Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 使用tidb 4.0版本,如何提升性能?

[TiDB Usage Environment] Production Environment / Testing / PoC

[TiDB Version]

[Reproduction Path] What operations were performed when the issue occurred

[Encountered Issue: Issue Phenomenon and Impact]

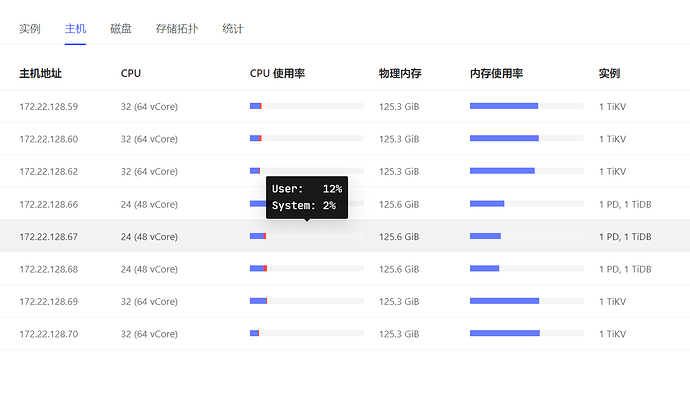

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachments: Screenshots / Logs / Monitoring]

There is currently a performance issue with the online TiDB service.

We are currently using TiDB version 4.0.9.

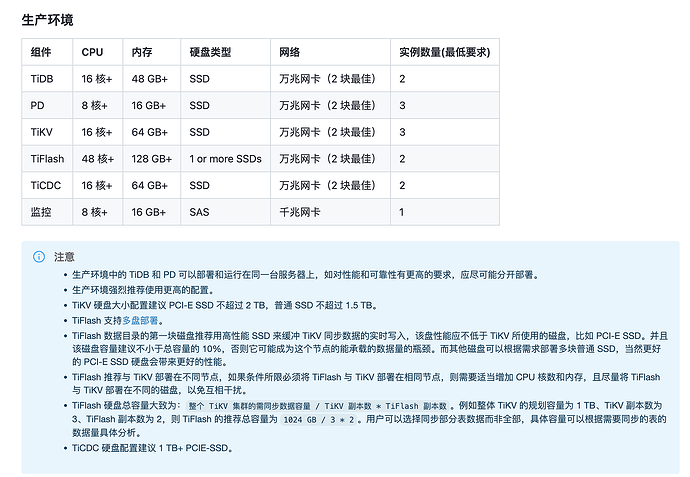

The TiKV configuration consists of 5 servers, each with 32 cores, 64GB of RAM, and NVMe SSDs.

Currently, PD and TiDB-server are configured on 3 servers, each with 48 cores and 128GB of RAM.

There are 5 TiKV nodes, each with 15TB of disk space, with around 3TB used and 11-12TB remaining.

Currently, 90 business servers are writing data to the TiDB cluster, supporting a maximum data write rate of 11MB per second. Could anyone suggest ways to improve concurrent data insertion performance and optimization solutions given the current version and hardware configuration?