Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 新增kv实例,如何快速region数据均衡

Production environment v5.4.0

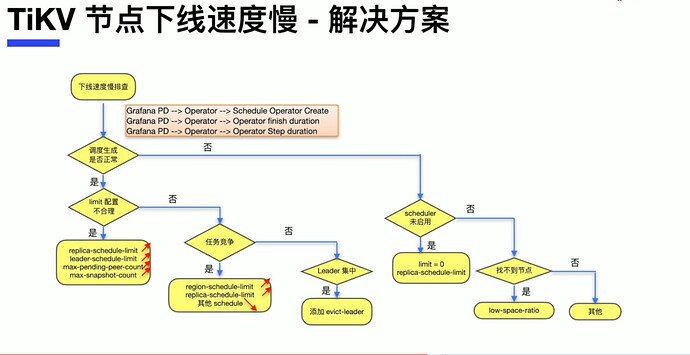

There are a total of 3 KV instances, and now the disk space is insufficient. We plan to expand by adding 3 more KV instances. How can we speed up the region data balancing?

Would adjusting the following parameters help?

config set leader-schedule-limit 400

config set region-schedule-limit 20480000

config set max-pending-peer-count 64000

config set max-snapshot-count 64000

store limit all 200

Additionally,

The disk space on the old 3 KV instances is still increasing, and the remaining space is decreasing. Why is this happening? How can we prevent the old KV disks from becoming full?