Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: CDC 延迟非常高如何解决

[TiDB Usage Environment] Production Environment

[TiDB Version] 4.0.13

[Encountered Problem: Phenomenon and Impact]

The upstream TiDB performed multiple full data updates on a table with approximately 30 million records, causing significant delay when executed sequentially in the downstream MySQL. Is there a good solution to this? Can we directly back up the upstream table and restore it downstream, but CDC will execute repeatedly. The root cause should be that the upstream TiDB executes sequentially and the downstream MySQL also executes sequentially.

[Resource Configuration]

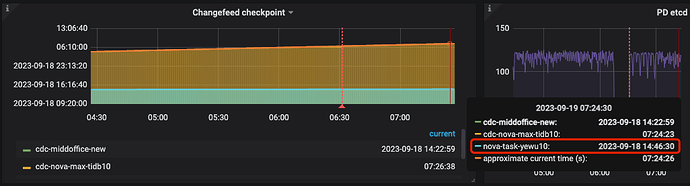

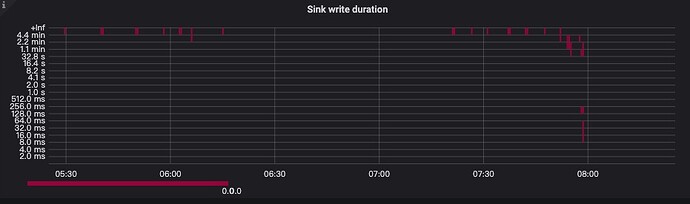

[Attachments: Screenshots/Logs/Monitoring]

[details="Summary"]

This text will be hidden

[/details]

Is the bottleneck of high latency due to slow MySQL execution?

Is it to repeatedly modify all the data in a table?

Five new columns were added, and then the original five columns were copied to these new columns. This was done because TiDB version 4.0.13 does not support altering double precision.

How do you view this? I see it being executed one by one in MySQL.

First, check the load and IO of the MySQL server.

In version v6, you can control whether TiCDC splits single-table transactions by configuring the sink URI parameter transaction-atomicity. Splitting transactions can significantly reduce the latency and memory consumption of MySQL sink when synchronizing large transactions.

Check whether the upstream or downstream is slow.

Forget it, MySQL can’t handle this kind of replication. Manually import it.

When TiDB CDC synchronizes a large number of data updates, there may be significant delays. This is because TiDB CDC needs to synchronize upstream transactions to downstream in their original order, and the downstream MySQL may not be able to process these transactions quickly.

Adjust TiDB CDC parameters, such as per-table-memory-quota, sink.max-txn-row, sink.worker-count, etc., to improve TiDB CDC’s memory and concurrency capabilities.

Adjust downstream MySQL parameters, such as innodb_flush_log_at_trx_commit, sync_binlog, max_allowed_packet, etc., to improve MySQL’s write performance.

If the table structure and data volume of the upstream TiDB allow, you can consider using the BR tool for backup and recovery to reduce the synchronization pressure on TiDB CDC.

It executes one by one, so MySQL is slow, similar to the row mode of binlog.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.