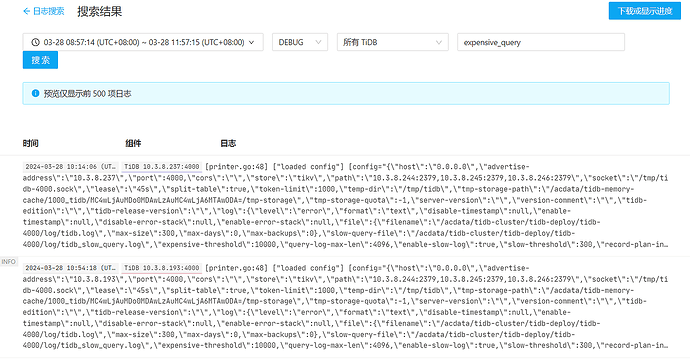

2024-03-28 10:54:18 (UTC+08:00)

TiDB 10.3.8.193:4000

[printer.go:48] [“loaded config”] [config=“{"host":"0.0.0.0","advertise-address":"10.3.8.193","port":4000,"cors":"","store":"tikv","path":"10.3.8.244:2379,10.3.8.245:2379,10.3.8.246:2379","socket":"/tmp/tidb-4000.sock","lease":"45s","split-table":true,"token-limit":1000,"temp-dir":"/tmp/tidb","tmp-storage-path":"/acdata/tidb-memory-cache/1000_tidb/MC4wLjAuMDo0MDAwLzAuMC4wLjA6MTAwODA=/tmp-storage","tmp-storage-quota":-1,"server-version":"","version-comment":"","tidb-edition":"","tidb-release-version":"","log":{"level":"error","format":"text","disable-timestamp":null,"enable-timestamp":null,"disable-error-stack":null,"enable-error-stack":null,"file":{"filename":"/acdata/tidb-cluster/tidb-deploy/tidb-4000/log/tidb.log","max-size":300,"max-days":0,"max-backups":0},"slow-query-file":"/acdata/tidb-cluster/tidb-deploy/tidb-4000/log/tidb_slow_query.log","expensive-threshold":10000,"query-log-max-len":4096,"enable-slow-log":true,"slow-threshold":300,"record-plan-in-slow-log":1},"instance":{"tidb_general_log":false,"tidb_pprof_sql_cpu":false,"ddl_slow_threshold":300,"tidb_expensive_query_time_threshold":60,"tidb_enable_slow_log":true,"tidb_slow_log_threshold":300,"tidb_record_plan_in_slow_log":1,"tidb_check_mb4_value_in_utf8":true,"tidb_force_priority":"NO_PRIORITY","tidb_memory_usage_alarm_ratio":0.8,"tidb_enable_collect_execution_info":true,"plugin_dir":"/data/deploy/plugin","plugin_load":"","max_connections":0,"tidb_enable_ddl":true,"tidb_rc_read_check_ts":false},"security":{"skip-grant-table":false,"ssl-ca":"","ssl-cert":"","ssl-key":"","cluster-ssl-ca":"","cluster-ssl-cert":"","cluster-ssl-key":"","cluster-verify-cn":null,"session-token-signing-cert":"","session-token-signing-key":"","spilled-file-encryption-method":"plaintext","enable-sem":false,"auto-tls":false,"tls-version":"","rsa-key-size":4096,"secure-bootstrap":false,"auth-token-jwks":"","auth-token-refresh-interval":"1h0m0s","disconnect-on-expired-password":true},"status":{"status-host":"0.0.0.0","metrics-addr":"","status-port":10080,"metrics-interval":15,"report-status":true,"record-db-qps":false,"grpc-keepalive-time":10,"grpc-keepalive-timeout":3,"grpc-concurrent-streams":1024,"grpc-initial-window-size":2097152,"grpc-max-send-msg-size":2147483647},"performance":{"max-procs":30,"max-memory":0,"server-memory-quota":0,"stats-lease":"3s","stmt-count-limit":5000,"pseudo-estimate-ratio":0.8,"bind-info-lease":"3s","txn-entry-size-limit":6291456,"txn-total-size-limit":4221225472,"tcp-keep-alive":true,"tcp-no-delay":true,"cross-join":true,"distinct-agg-push-down":false,"projection-push-down":false,"max-txn-ttl":3600000,"index-usage-sync-lease":"0s","plan-replayer-gc-lease":"10m","gogc":100,"enforce-mpp":false,"stats-load-concurrency":5,"stats-load-queue-size":1000,"analyze-partition-concurrency-quota":16,"enable-stats-cache-mem-quota":false,"committer-concurrency":128,"run-auto-analyze":true,"force-priority":"NO_PRIORITY","memory-usage-alarm-ratio":0.8,"enable-load-fmsketch":false},"prepared-plan-cache":{"enabled":true,"capacity":100,"memory-guard-ratio":0.1},"opentracing":{"enable":false,"rpc-metrics":false,"sampler":{"type":"const","param":1,"sampling-server-url":"","max-operations":0,"sampling-refresh-interval":0},"reporter":{"queue-size":0,"buffer-flush-interval":0,"log-spans":false,"local-agent-host-port":""}},"proxy-protocol":{"networks":"","header-timeout":5},"pd-client":{"pd-server-timeout":3},"tikv-client":{"grpc-connection-count":4,"grpc-keepalive-time":10,"grpc-keepalive-timeout":3,"grpc-compression-type":"none","commit-timeout":"41s","async-commit":{"keys-limit":256,"total-key-size-limit":4096,"safe-window":2000000000,"allowed-clock-drift":500000000},"max-batch-size":128,"overload-threshold":200,"max-batch-wait-time":0,"batch-wait-size":8,"enable-chunk-rpc":true,"region-cache-ttl":600,"store-limit":0,"store-liveness-timeout":"1s","copr-cache":{"capacity-mb":1000},"ttl-refreshed-txn-size":33554432,"resolve-lock-lite-threshold":16},"binlog":{"enable":false,"ignore-error":false,"write-timeout":"15s","binlog-socket":"","strategy":"range"},"compatible-kill-query":false,"pessimistic-txn":{"max-retry-count":256,"deadlock-history-capacity":10,"deadlock-history-collect-retryable":false,"pessimistic-auto-commit":false,"constraint-check-in-place-pessimistic":true},"max-index-length":3072,"index-limit":64,"table-column-count-limit":1017,"graceful-wait-before-shutdown":0,"alter-primary-key":false,"treat-old-version-utf8-as-utf8mb4":true,"enable-table-lock":false,"delay-clean-table-lock":0,"split-region-max-num":1000,"top-sql":{"receiver-address":""},"repair-mode":false,"repair-table-list":,"isolation-read":{"engines":["tikv","tiflash","tidb"]},"new_collations_enabled_on_first_bootstrap":true,"experimental":{"allow-expression-index":false},"skip-register-to-dashboard":false,"enable-telemetry":true,"labels":{},"enable-global-index":false,"deprecate-integer-display-length":false,"enable-enum-length-limit":true,"stores-refresh-interval":60,"enable-tcp4-only":false,"enable-forwarding":false,"max-ballast-object-size":0,"ballast-object-size":0,"transaction-summary":{"transaction-summary-capacity":500,"transaction-id-digest-min-duration":2147483647},"enable-global-kill":true,"enable-batch-dml":false,"mem-quota-query":1073741824,"oom-action":"cancel","oom-use-tmp-storage":true,"check-mb4-value-in-utf8":true,"enable-collect-execution-info":true,"plugin":{"dir":"/data/deploy/plugin","load":""},"max-server-connections":0,"run-ddl":true,"tidb-max-reuse-chunk":64,"tidb-max-reuse-column":256}”]